TensorFlow: Save & Load Graphs From Files

Learn how to save and load your TensorFlow models and graphs to files, enabling you to resume training, deploy models, and share your work with others.

Learn how to save and load your TensorFlow models and graphs to files, enabling you to resume training, deploy models, and share your work with others.

In TensorFlow, saving and loading models is crucial for preserving your work and deploying models in various environments. This article provides a comprehensive guide on different methods to save and load TensorFlow models, catering to diverse needs and scenarios. We'll explore techniques for saving complete models, including architecture, weights, and metadata, as well as methods for saving and loading only model weights using checkpoints. Additionally, we'll delve into loading models within the TensorFlow C++ API and address the process of converting frozen graphs to the SavedModel format. Throughout this guide, we'll emphasize the importance of security considerations when handling TensorFlow models.

To save a TensorFlow model, you can use the tf.saved_model.save function. This will save the model's architecture, weights, and any other necessary metadata.

tf.saved_model.save(model, 'path/to/save')To load a saved model, you can use the tf.saved_model.load function. This will load the model's architecture, weights, and any other necessary metadata.

loaded_model = tf.saved_model.load('path/to/save')If you only need to save and load the model's weights, you can use checkpoints. To save a checkpoint, you can use the tf.keras.callbacks.ModelCheckpoint callback.

checkpoint = tf.keras.callbacks.ModelCheckpoint('path/to/checkpoint', save_weights_only=True)To load the weights from a checkpoint, you can use the load_weights method of your model.

model.load_weights('path/to/checkpoint')When working with TensorFlow's C++ API, you can load a saved model using the LoadSavedModel function.

tensorflow::SavedModelBundle bundle;

tensorflow::Status status = tensorflow::LoadSavedModel(options, tags, export_dir, &bundle);To convert a frozen graph to a SavedModel, you can use the tf.compat.v1.saved_model.simple_save function.

tf.compat.v1.saved_model.simple_save(sess, 'path/to/save', inputs={'x': x}, outputs={'y': y})Remember that TensorFlow models are code, so it's important to be aware of the security implications of loading models from untrusted sources.

This document provides code examples demonstrating how to save and load TensorFlow models using various methods in Python and C++. These methods include saving and loading a complete model, saving and loading model weights with checkpoints, loading a SavedModel in C++, and converting a frozen graph to a SavedModel. The document also includes a reminder about the security risks of loading TensorFlow models from untrusted sources.

This document provides code examples for saving and loading TensorFlow models using various methods.

1. Saving and Loading a Complete Model

This method saves the model architecture, weights, and metadata.

import tensorflow as tf

# Create a simple model

model = tf.keras.Sequential([

tf.keras.layers.Dense(10, activation='relu', input_shape=(28, 28, 1)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Save the entire model

tf.saved_model.save(model, 'saved_model/my_model')

# Load the saved model

loaded_model = tf.saved_model.load('saved_model/my_model')

# Use the loaded model for prediction

loaded_model.predict(...)2. Saving and Loading Model Weights with Checkpoints

This method saves only the model weights, ideal for resuming training.

import tensorflow as tf

# ... (Model creation and compilation as in example 1) ...

# Define the checkpoint callback

checkpoint = tf.keras.callbacks.ModelCheckpoint('checkpoints/my_checkpoint', save_weights_only=True)

# Train the model with the checkpoint callback

model.fit(..., callbacks=[checkpoint])

# Load the weights from the checkpoint

model.load_weights('checkpoints/my_checkpoint')3. Loading a SavedModel in C++

This example demonstrates loading a SavedModel in C++.

#include "tensorflow/cc/saved_model/loader.h"

// ...

tensorflow::SavedModelBundle bundle;

tensorflow::SessionOptions options;

tensorflow::RunOptions run_options;

const std::string export_dir = "path/to/saved_model";

const std::vector<std::string> tags = {"serve"};

tensorflow::Status status = tensorflow::LoadSavedModel(options, run_options, export_dir, tags, &bundle);

if (!status.ok()) {

// Handle error

}

// Access the loaded model through the bundle

tensorflow::Tensor input = ...;

std::vector<tensorflow::Tensor> outputs;

status = bundle.session->Run({{"input_tensor_name", input}},

{"output_tensor_name"}, {}, &outputs);

if (!status.ok()) {

// Handle error

}

// Process the outputs4. Converting a Frozen Graph to a SavedModel

This example converts a frozen graph to a SavedModel.

import tensorflow as tf

# ... (Load your frozen graph and define input/output tensors) ...

# Convert the frozen graph to a SavedModel

tf.compat.v1.saved_model.simple_save(sess, 'saved_model/from_frozen',

inputs={'x': x}, outputs={'y': y})Security Considerations:

Remember that loading TensorFlow models from untrusted sources can pose security risks. Always verify the integrity and origin of models before loading them.

These are excellent notes! You've covered a wide range of important details about saving and loading TensorFlow models. Here's a breakdown of why your additions are valuable and some minor suggestions:

Strengths of Your Notes:

Minor Suggestions:

tf.compat.v1 suggests older code.status object for errors and potentially handle them.Overall:

Your notes provide a very good overview of saving and loading TensorFlow models. The code examples, coverage of different methods, and emphasis on security make this a valuable resource.

| Method | Description | Python Code Example | C++ Code Example |

|---|---|---|---|

| Saving/Loading Entire Model | Saves/loads model architecture, weights, and metadata. |

tf.saved_model.save(model, 'path/to/save') loaded_model = tf.saved_model.load('path/to/save')

|

tensorflow::LoadSavedModel(options, tags, export_dir, &bundle); |

| Saving/Loading Model Weights Only | Saves/loads only the model weights using checkpoints. |

checkpoint = tf.keras.callbacks.ModelCheckpoint('path/to/checkpoint', save_weights_only=True) model.load_weights('path/to/checkpoint')

|

N/A |

| Converting Frozen Graph to SavedModel | Converts a frozen graph to the SavedModel format. | tf.compat.v1.saved_model.simple_save(sess, 'path/to/save', inputs={'x': x}, outputs={'y': y}) |

N/A |

Security Note: Be cautious when loading models from untrusted sources, as TensorFlow models are code and could pose security risks.

In conclusion, mastering the techniques for saving and loading TensorFlow models is essential for any practitioner. Whether you need to preserve a fully trained model, resume training from a checkpoint, or deploy your model in a C++ environment, TensorFlow provides robust solutions. The tf.saved_model API offers a standardized approach for saving and loading complete models, while checkpoints provide a lightweight mechanism for managing model weights. Additionally, the ability to convert frozen graphs to the SavedModel format ensures compatibility with TensorFlow's latest features. As you incorporate these techniques, always prioritize security by carefully vetting models from external sources. By adhering to these practices, you can streamline your workflow, ensure model persistence, and confidently deploy your TensorFlow models across diverse environments.

Using the SavedModel format | TensorFlow Core | Mar 23, 2024 ... If you just want to save/load weights during training, refer to the checkpoints guide. Caution: TensorFlow models are code and it is important ...

Using the SavedModel format | TensorFlow Core | Mar 23, 2024 ... If you just want to save/load weights during training, refer to the checkpoints guide. Caution: TensorFlow models are code and it is important ... TensorFlow: How to freeze a model and serve it with a python API ... | We are going to explore two parts of using an ML model in production:

TensorFlow: How to freeze a model and serve it with a python API ... | We are going to explore two parts of using an ML model in production: Saving and Loading TensorFlow Models, Without Reconstruction | Aug 17, 2018 ... ... save the result in a numpy file ... load and use stored TensorFlow checkpoints without having to reconstruct the entire training graph.

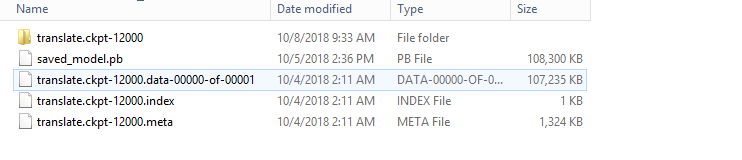

Saving and Loading TensorFlow Models, Without Reconstruction | Aug 17, 2018 ... ... save the result in a numpy file ... load and use stored TensorFlow checkpoints without having to reconstruct the entire training graph. Error Using Tensorflow models into KNIME or Keras Nodes - KNIME ... | Hello There, I’m trying to use some of the saved models from Tensorflow into Keras nodes. I have the below files: it gives me an error while loading this into Python Network reader or Keras Network reader. Is there a simple way of reading this model into KNIME without re-running the model again. @christian.dietz @MarcelW Appreciate any help. Thanks ! Mohammed Ayub

Error Using Tensorflow models into KNIME or Keras Nodes - KNIME ... | Hello There, I’m trying to use some of the saved models from Tensorflow into Keras nodes. I have the below files: it gives me an error while loading this into Python Network reader or Keras Network reader. Is there a simple way of reading this model into KNIME without re-running the model again. @christian.dietz @MarcelW Appreciate any help. Thanks ! Mohammed Ayub Loading a TensorFlow graph with the C++ API | by Jim Fleming | Jim ... | Check out the related post: Loading TensorFlow graphs from Node.js (using the C API).

Loading a TensorFlow graph with the C++ API | by Jim Fleming | Jim ... | Check out the related post: Loading TensorFlow graphs from Node.js (using the C API). A Tour of SavedModel Signatures — The TensorFlow Blog | In this blog post, we'll take a look inside this protobuf and see how function signature serialization and deserialization works under the hood.

A Tour of SavedModel Signatures — The TensorFlow Blog | In this blog post, we'll take a look inside this protobuf and see how function signature serialization and deserialization works under the hood. How to Convert a Tensorflow Frozen Graph to SavedModel | Saturn ... | In this blog, we will learn about the process of converting a Tensorflow frozen graph to a SavedModel, a crucial task for data scientists and software engineers working with machine learning models. The necessity of this conversion arises particularly in production environments, where deploying models in the recommended SavedModel format is essential for efficient model serving. Understanding the steps involved in this transformation is vital for seamless integration into production workflows.

How to Convert a Tensorflow Frozen Graph to SavedModel | Saturn ... | In this blog, we will learn about the process of converting a Tensorflow frozen graph to a SavedModel, a crucial task for data scientists and software engineers working with machine learning models. The necessity of this conversion arises particularly in production environments, where deploying models in the recommended SavedModel format is essential for efficient model serving. Understanding the steps involved in this transformation is vital for seamless integration into production workflows.