PyTorch Two Input Network Tutorial: Step-by-Step Guide

Learn how to build a neural network in PyTorch that effectively processes and learns from two distinct input sources.

Learn how to build a neural network in PyTorch that effectively processes and learns from two distinct input sources.

In PyTorch, handling multiple inputs to a neural network involves a structured approach within your model's forward function. This involves defining separate input processing paths, combining features effectively, and directing the combined information through the remaining layers for the final output.

To build a PyTorch neural network with multiple inputs, you'll primarily work within the forward function of your nn.Module. Here's a breakdown:

Define Inputs in __init__:

__init__ method.class TwoInputNet(nn.Module):

def __init__(self):

super().__init__()

self.image_layers = nn.Sequential(...) # Layers for image input

self.text_layers = nn.Sequential(...) # Layers for text inputProcess Inputs in forward:

forward function. def forward(self, image, text):

image_features = self.image_layers(image)

text_features = self.text_layers(text)Combine Features:

combined_features = torch.cat((image_features, text_features), dim=1) # Concatenate along a specific dimensionFinal Layers and Output:

output = self.classifier(combined_features) # Assuming 'classifier' is a final linear layer

return outputExample:

import torch

import torch.nn as nn

class TwoInputNet(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, input1, input2):

x = self.conv1(input1)

x = torch.relu(x)

x = self.conv2(x)

x = torch.relu(x)

x = x.view(-1, 320)

x = self.fc1(x)

x = torch.relu(x)

x = self.fc2(x)

return x

# Example usage

input1 = torch.randn(1, 1, 28, 28)

input2 = torch.randn(1, 1, 28, 28)

model = TwoInputNet()

output = model(input1, input2)

print(output)Key Points:

This Python code defines a PyTorch neural network model named MultiInputNet designed to handle two types of input data: images and numerical data. The model processes the image data through a series of convolutional and pooling layers, while the numerical data goes through fully connected layers. The features extracted from both branches are then concatenated and passed through additional fully connected layers to produce the final output. The code includes an example of how to create an instance of the model and pass sample image and numerical data through it.

import torch

import torch.nn as nn

class MultiInputNet(nn.Module):

def __init__(self):

super(MultiInputNet, self).__init__()

# Image input branch

self.image_layers = nn.Sequential(

nn.Conv2d(1, 16, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(16, 32, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

)

# Numerical input branch

self.numerical_layers = nn.Sequential(

nn.Linear(5, 16),

nn.ReLU(),

nn.Linear(16, 32),

nn.ReLU(),

)

# Combined layers

self.combined_layers = nn.Sequential(

nn.Linear(544, 128), # 512 (image) + 32 (numerical)

nn.ReLU(),

nn.Linear(128, 10),

)

def forward(self, image_input, numerical_input):

# Process image input

image_features = self.image_layers(image_input)

# Process numerical input

numerical_features = self.numerical_layers(numerical_input)

# Combine features (concatenation in this example)

combined_features = torch.cat((image_features, numerical_features), dim=1)

# Final layers and output

output = self.combined_layers(combined_features)

return output

# Example usage

image_data = torch.randn(1, 1, 28, 28) # Example image input

numerical_data = torch.randn(1, 5) # Example numerical input

model = MultiInputNet()

output = model(image_data, numerical_data)

print(output.shape) # Expected output shape: torch.Size([1, 10])Explanation:

Initialization (__init__):

image_layers) and numerical data processing (numerical_layers).combined_layers will handle the concatenated features.Forward Pass (forward):

image_input is passed through self.image_layers.numerical_input is passed through self.numerical_layers.torch.cat combines the outputs of the two branches along dim=1 (concatenating feature vectors).self.combined_layers to produce the final output.Key Points:

Input Branch Architectures: The architecture of each input branch (e.g., image_layers, numerical_layers) should be tailored to the specific type of input data. For instance, convolutional layers are well-suited for images, while fully connected layers are often used for numerical data.

Feature Dimensionality: Pay attention to the output dimensions of your input branches. You might need to adjust the number of neurons in the final layers of each branch or add additional layers to ensure that the concatenated features have a suitable dimensionality for the subsequent layers.

Alternative Combination Methods: While concatenation is a common way to combine features, you can explore other methods like:

Data Parallelism: If you're working with large datasets, consider using PyTorch's data parallelism features (e.g., DataParallel) to distribute the computation across multiple GPUs.

Debugging and Visualization: Use tools like PyTorch's print statements, the Python debugger, and TensorBoard to inspect the shapes of tensors at different stages of the network and visualize the network's architecture.

Regularization: To prevent overfitting, especially when dealing with multiple inputs, consider using regularization techniques like dropout, weight decay, or early stopping.

Transfer Learning: If you have limited data for one or more input branches, explore using pre-trained models for those branches and fine-tuning them on your specific task.

Experimentation: The optimal architecture and hyperparameters for a multi-input neural network will depend on your specific dataset and task. It's crucial to experiment with different configurations to find what works best.

This guide explains how to construct a PyTorch neural network that handles multiple input types.

1. Initialization (__init__)

__init__ method.

self.image_layers = nn.Sequential(...) for image input and self.text_layers = nn.Sequential(...) for text input.2. Forward Pass (forward)

forward function.

image_features = self.image_layers(image) and text_features = self.text_layers(text).3. Feature Combination

torch.cat((image_features, text_features), dim=1).4. Final Layers and Output

Key Considerations:

By thoughtfully designing the __init__ and forward functions of your nn.Module, you can create sophisticated PyTorch models that effectively process and combine information from multiple inputs. Remember to consider the nature of your data, explore different architectural choices, and leverage techniques like data parallelism and regularization to build robust and high-performing multi-input neural networks.

nn.Module with multiple inputs - PyTorch Forums | Hey, I am interested in building a network having multiple inputs. I understand that when calling the forward function, only one Variable is taken in parameter. I have two possible use case here : the same image at multiple resolutions is used different images are used I would like some advice to design a nn.Module in the same fashion as alexnet for example. I have no idea how to : give multiple inputs to the nn.Module join the fc layers together I am following the example of imagenet, ...

nn.Module with multiple inputs - PyTorch Forums | Hey, I am interested in building a network having multiple inputs. I understand that when calling the forward function, only one Variable is taken in parameter. I have two possible use case here : the same image at multiple resolutions is used different images are used I would like some advice to design a nn.Module in the same fashion as alexnet for example. I have no idea how to : give multiple inputs to the nn.Module join the fc layers together I am following the example of imagenet, ... Multiple (numeric) Inputs in Neural Network for Classification - data ... | Hi! I have been struggling with this code for a couple days. I couldn’t find many similar posts but the one’s I found have attributed to the code below. I have 3 inputs that are three independent signals of a sensor. The rows represent a signal and the columns are values of that signal. On another file I have the target that is a column vector of 0 and 1’s. These 4 files are CSV. I am having trouble passing three inputs to the network. From what I’ve gathered, it should occur in the forward s...

Multiple (numeric) Inputs in Neural Network for Classification - data ... | Hi! I have been struggling with this code for a couple days. I couldn’t find many similar posts but the one’s I found have attributed to the code below. I have 3 inputs that are three independent signals of a sensor. The rows represent a signal and the columns are values of that signal. On another file I have the target that is a column vector of 0 and 1’s. These 4 files are CSV. I am having trouble passing three inputs to the network. From what I’ve gathered, it should occur in the forward s... Defining a Neural Network in PyTorch — PyTorch Tutorials 2.6.0+ ... | Apr 17, 2020 ... nn , to help you create and train neural networks. An nn.Module contains layers, and a method forward(input) that returns the output ...

Defining a Neural Network in PyTorch — PyTorch Tutorials 2.6.0+ ... | Apr 17, 2020 ... nn , to help you create and train neural networks. An nn.Module contains layers, and a method forward(input) that returns the output ... PyTorch multiple input and output - PyTorch Forums | My apology for this beginner question, I have watched serveral tutorials before but didn’t have a clue to solve my specific questions. I am building a model that takes 3 pics of an object as input and will output labels on 5 aspects. On the dataloader tutorials, there are lots of them saying I am not limited to have multiple input channels, how do I code this? And for the output, the tutorials are constantly talking the out-channel number is equal to however many labels that’s available in the...

PyTorch multiple input and output - PyTorch Forums | My apology for this beginner question, I have watched serveral tutorials before but didn’t have a clue to solve my specific questions. I am building a model that takes 3 pics of an object as input and will output labels on 5 aspects. On the dataloader tutorials, there are lots of them saying I am not limited to have multiple input channels, how do I code this? And for the output, the tutorials are constantly talking the out-channel number is equal to however many labels that’s available in the... Two image input CNN Model - vision - PyTorch Forums | Hello everybody, I’m a new CNN learner. I have some videos. each video represents a car. I extract frame images of car from each video and then also extract car’s voice spectrum images from each video. Let say I have 5 types of car. I have two main distinct dataset folders. One main folder contains car pictures. Other main folder contains voice spectrum images of each car. Each of main folders contain five subfolders to distinguish types of cars. My CNN network will have two input image -one ...

Two image input CNN Model - vision - PyTorch Forums | Hello everybody, I’m a new CNN learner. I have some videos. each video represents a car. I extract frame images of car from each video and then also extract car’s voice spectrum images from each video. Let say I have 5 types of car. I have two main distinct dataset folders. One main folder contains car pictures. Other main folder contains voice spectrum images of each car. Each of main folders contain five subfolders to distinguish types of cars. My CNN network will have two input image -one ... PyTorch Tutorial: Building a Simple Neural Network From Scratch ... | Our PyTorch Tutorial covers the basics of PyTorch, while also providing you with a detailed background on how neural networks work. Read the full article here.

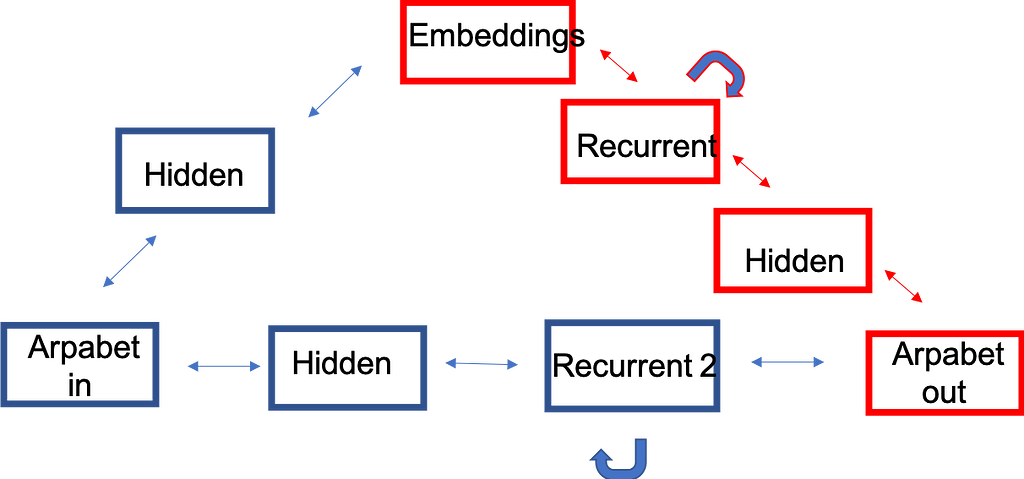

PyTorch Tutorial: Building a Simple Neural Network From Scratch ... | Our PyTorch Tutorial covers the basics of PyTorch, while also providing you with a detailed background on how neural networks work. Read the full article here. Bidirectional NN with two inputs and one output - PyTorch Forums | Hello! *Disclaimer, my first post to stackoverflow is coming to mind where I got flamed for poor formatting, I’m sorry ahead of time if I broke a formatting rule. I am creating a neural network model to mimic three tasks used for clinical assessments in stroke survivors. The data structures of the model are word embeddings from gloves’ wiki-gigaworld and the output are a series of numbers reflecting the letters needed to spell the word that coincides with each vector. The three tasks I am mo...

Bidirectional NN with two inputs and one output - PyTorch Forums | Hello! *Disclaimer, my first post to stackoverflow is coming to mind where I got flamed for poor formatting, I’m sorry ahead of time if I broke a formatting rule. I am creating a neural network model to mimic three tasks used for clinical assessments in stroke survivors. The data structures of the model are word embeddings from gloves’ wiki-gigaworld and the output are a series of numbers reflecting the letters needed to spell the word that coincides with each vector. The three tasks I am mo...