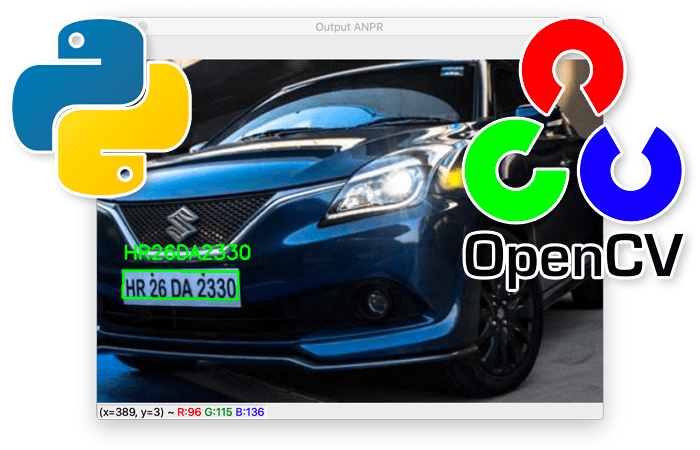

License Plate Recognition from Image: ANPR Guide

Learn how ANPR technology uses image recognition algorithms to accurately identify and read vehicle license plates from images.

Learn how ANPR technology uses image recognition algorithms to accurately identify and read vehicle license plates from images.

Automatic License Plate Recognition (ALPR) is a technology that uses image processing and machine learning to automatically identify vehicles by their license plates. This article provides a step-by-step guide to building a basic ALPR system using Python and OpenCV. We'll break down the process into six key stages, explaining the concepts and code snippets involved in each.

Image Acquisition: Capture an image of the vehicle using a camera.

import cv2

image = cv2.imread('car.jpg') Preprocessing: Enhance the image to improve accuracy. This might involve:

resized_image = cv2.resize(image, (width, height))

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

denoised_image = cv2.fastNlMeansDenoising(gray, None, h=10, templateWindowSize=7, searchWindowSize=21)

Plate Localization: Identify the region of the image containing the license plate. Common techniques include:

edges = cv2.Canny(image, 100, 200)

contours, hierarchy = cv2.findContours(edges, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for contour in contours:

# Filter contours based on properties like area, aspect ratio etc.Character Segmentation: Isolate individual characters within the detected plate region.

thresh = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)[1]

Optical Character Recognition (OCR): Convert segmented characters into machine-readable text.

import pytesseract

text = pytesseract.image_to_string(cropped_character)Post-processing: Clean up the extracted text.

This Python code uses OpenCV and Tesseract OCR to detect and recognize license plate text from an image. It preprocesses the image, localizes the plate region using contours, segments characters, performs OCR on each character, and suggests post-processing steps for validation and correction.

import cv2

import pytesseract

# 1. Image Acquisition

image = cv2.imread('car.jpg')

# 2. Preprocessing

resized_image = cv2.resize(image, (640, 480)) # Adjust width, height as needed

gray = cv2.cvtColor(resized_image, cv2.COLOR_BGR2GRAY)

denoised_image = cv2.fastNlMeansDenoising(gray, None, h=10, templateWindowSize=7, searchWindowSize=21)

# 3. Plate Localization

edges = cv2.Canny(denoised_image, 100, 200)

contours, hierarchy = cv2.findContours(edges, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

plate_contour = None

for contour in contours:

# Filter contours based on area, aspect ratio, etc.

area = cv2.contourArea(contour)

x, y, w, h = cv2.boundingRect(contour)

aspect_ratio = float(w) / h

if 100 < area < 1000 and 2 < aspect_ratio < 5: # Adjust these values based on your images

plate_contour = contour

break

if plate_contour is not None:

# Extract plate region

x, y, w, h = cv2.boundingRect(plate_contour)

plate_image = gray[y:y+h, x:x+w]

# 4. Character Segmentation

thresh = cv2.threshold(plate_image, 127, 255, cv2.THRESH_BINARY)[1]

char_contours, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

characters = []

for char_contour in char_contours:

# Filter contours based on size, aspect ratio, etc.

# ... (similar to plate contour filtering)

# Extract character region

x, y, w, h = cv2.boundingRect(char_contour)

cropped_char = thresh[y:y+h, x:x+w]

characters.append(cropped_char)

# 5. Optical Character Recognition (OCR)

license_plate_text = ""

for char_img in characters:

char = pytesseract.image_to_string(char_img, config='--psm 10') # Try different psm values

license_plate_text += char

# 6. Post-processing

# ... (Implement validation and correction logic based on your license plate format)

print("License Plate Text:", license_plate_text)

else:

print("No license plate found")

# Display the results (optional)

cv2.imshow("Original Image", image)

cv2.waitKey(0)

cv2.destroyAllWindows()Explanation:

pytesseract.image_to_string to perform OCR on each segmented character image.config='--psm 10' setting tells Tesseract to treat the input as a single character.Remember:

opencv-python, pytesseract

General Considerations:

Specific to Code:

Further Exploration:

| Step | Description | Techniques | Code Snippets |

|---|---|---|---|

| 1. Image Acquisition | Capture the vehicle image. | Using a camera or loading from a file. | image = cv2.imread('car.jpg') |

| 2. Preprocessing | Improve image quality for better recognition. | - Resizing: cv2.resize(image, (width, height)) - Grayscale Conversion: cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) - Noise Reduction: cv2.fastNlMeansDenoising()

|

|

| 3. Plate Localization | Find the license plate region within the image. | - Edge Detection: cv2.Canny(image, 100, 200) - Contour Analysis: cv2.findContours(), filter based on contour properties. |

|

| 4. Character Segmentation | Separate individual characters on the license plate. | - Thresholding: cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)[1] - Contour Analysis: Similar to plate localization, but applied to the plate region. |

|

| 5. Optical Character Recognition (OCR) | Convert character images into text. | Use OCR libraries like Tesseract: text = pytesseract.image_to_string(cropped_character)

|

|

| 6. Post-processing | Clean and validate the extracted text. | - Validation: Check against expected license plate patterns. - Correction: Fix minor errors based on context. |

Building a robust ALPR system involves a series of image processing steps, each with its own challenges. While this article outlines a basic framework using OpenCV and Tesseract OCR, real-world applications often demand more sophisticated techniques. Fine-tuning parameters, improving character segmentation, and implementing advanced OCR error correction are crucial for achieving higher accuracy. Exploring deep learning models for end-to-end ALPR and leveraging cloud-based OCR APIs can further enhance performance and scalability. As you delve deeper into ALPR, consider the legal and ethical implications associated with its use, ensuring responsible and ethical implementation in real-world scenarios.

Automatic License Plate Recognition - High Accuracy ALPR | Accurate, fast Automatic License Plate Recognition (ALPR) software. Works in dark, blurry images. Includes Vehicle Make, Model, Color.

Automatic License Plate Recognition - High Accuracy ALPR | Accurate, fast Automatic License Plate Recognition (ALPR) software. Works in dark, blurry images. Includes Vehicle Make, Model, Color. OpenCV: Automatic License/Number Plate Recognition (ANPR) with ... | In this tutorial, you will build a basic Automatic License/Number Plate (ANPR) recognition system using OpenCV and Python.

OpenCV: Automatic License/Number Plate Recognition (ANPR) with ... | In this tutorial, you will build a basic Automatic License/Number Plate (ANPR) recognition system using OpenCV and Python. California ALPR FAQs | Automated License Plate Recognition (ALPR) systems function to automatically capture an image of a vehicle and the vehicle's license plate, transform the ...

California ALPR FAQs | Automated License Plate Recognition (ALPR) systems function to automatically capture an image of a vehicle and the vehicle's license plate, transform the ... Automatic number-plate recognition - Wikipedia | Automatic number-plate recognition is a technology that uses optical character recognition on images to read vehicle registration plates to create vehicle ...

Automatic number-plate recognition - Wikipedia | Automatic number-plate recognition is a technology that uses optical character recognition on images to read vehicle registration plates to create vehicle ... How do number plate readers work on cars without front number ... | Sep 25, 2018 ... Often shortened to ANPR, It is a software application that can capture, identify, analyze and store a vehicle's license plate. With such ...

How do number plate readers work on cars without front number ... | Sep 25, 2018 ... Often shortened to ANPR, It is a software application that can capture, identify, analyze and store a vehicle's license plate. With such ... Percv.AI Automatic Number-plate Recognition ANPR Solution on ... | Effortlessly monitor vehicles with our Vision AI-powered ANPR solution, delivering precise and reliable license plate recognition using STM32 MCUs.

Percv.AI Automatic Number-plate Recognition ANPR Solution on ... | Effortlessly monitor vehicles with our Vision AI-powered ANPR solution, delivering precise and reliable license plate recognition using STM32 MCUs. What is ANPR? A Guide to Automatic Number Plate Recognition | Discover ANPR in our comprehensive guide! Learn how automatic number plate recognition cameras read, identify license plates, and improve security.

What is ANPR? A Guide to Automatic Number Plate Recognition | Discover ANPR in our comprehensive guide! Learn how automatic number plate recognition cameras read, identify license plates, and improve security.