RuntimeError: CUDA Deserialization Error - Fix & Solutions

Learn how to resolve the "RuntimeError: Attempting to deserialize object on a CUDA device" error in your PyTorch code and get your deep learning projects running smoothly.

Learn how to resolve the "RuntimeError: Attempting to deserialize object on a CUDA device" error in your PyTorch code and get your deep learning projects running smoothly.

Encountering the "RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False" error in PyTorch? This usually happens when you try to load a model trained on a GPU onto a machine that only has a CPU. Don't worry, it's a common issue with a straightforward solution.

This error means you're trying to load a model trained on a GPU onto a machine without one. Here's how to fix it:

1. Identify the Loading Line:

Look for where you load your model, usually with torch.load():

model = torch.load('my_model.pth') 2. Specify CPU Loading:

Add map_location='cpu' inside torch.load():

model = torch.load('my_model.pth', map_location='cpu')Explanation:

torch.save()) stores its structure and parameters.torch.load()) reconstructs the model.Additional Notes:

Colab/Remote Training: If you trained on a platform with GPUs and are deploying on a CPU, this error is common.

torch.device('cpu'): You can use this for more explicit control:

device = torch.device('cpu')

model = torch.load('my_model.pth', map_location=device)This Python code demonstrates how to load a PyTorch model from a file named 'my_model.pth' and ensure it's placed on the CPU for use. It includes error handling for cases where the model might have been saved on a GPU. The code provides three methods for loading the model onto the CPU: using a try-except block, specifying 'cpu' with map_location, and using torch.device('cpu'). After loading, the model is set to evaluation mode using model.eval(), preparing it for inference or other CPU-based operations.

import torch

# 1. Loading a model (this might cause an error if the model was saved on a GPU)

try:

model = torch.load('my_model.pth')

except RuntimeError as e:

print(f"Error loading model: {e}")

# 2. Loading a model with CPU mapping

model = torch.load('my_model.pth', map_location='cpu')

# 3. Loading a model with explicit device specification

device = torch.device('cpu')

model = torch.load('my_model.pth', map_location=device)

# Now you can use the model on CPU

model.eval() # Set the model to evaluation mode

# ... Perform inference or other operations with the model on CPU ... Explanation:

try-except block demonstrates how to catch the potential RuntimeError that occurs when trying to load a GPU-trained model on a CPU.map_location='cpu': This argument within torch.load() tells PyTorch to load the model's tensors onto the CPU, even if they were saved from a GPU.torch.device('cpu'): This provides a more explicit way to specify the target device. It's useful for managing devices in larger projects.model.eval(): After loading, it's good practice to set the model to evaluation mode if you're using it for inference.Important:

'my_model.pth' with the actual path to your saved model file.pip install torch).torchvision, they often come with options to load onto the CPU directly. Check the documentation.map_location: While map_location is the most common solution, you can also move the model to the CPU after loading it (if it doesn't raise an error during loading):

model = torch.load('my_model.pth')

model.cpu() This article addresses the error encountered when loading a GPU-trained PyTorch model onto a machine without a GPU.

Problem: PyTorch models store information about the device they were trained on (GPU or CPU). Attempting to load a GPU-trained model on a CPU-only machine results in an error.

Solution: Specify CPU loading during deserialization using map_location='cpu' within the torch.load() function:

model = torch.load('my_model.pth', map_location='cpu')Explanation:

torch.save() saves the model's structure and parameters.torch.load() reconstructs the model.map_location='cpu': Forces the model to be loaded onto the CPU, even if it was trained on a GPU.Key Points:

torch.device('cpu') as the map_location argument.Understanding how to manage GPU-trained models for CPU environments is crucial for deploying PyTorch models effectively. By using the map_location='cpu' argument or similar techniques, you can ensure your models load correctly and are ready for inference or other tasks on CPU-only machines. This knowledge helps bridge the gap between training powerful models on GPUs and making them accessible for wider use.

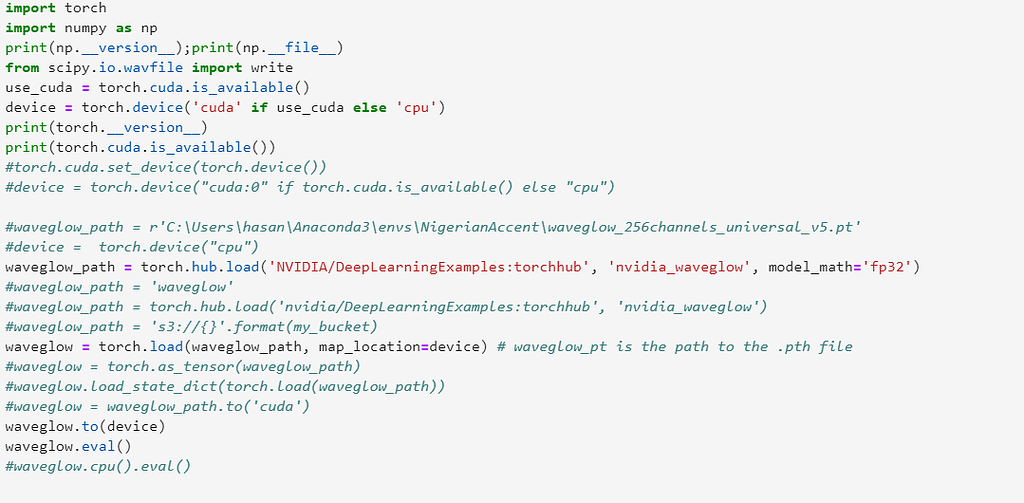

RuntimeError: Attempting to deserialize object on a CUDA device ... | Shifting CUDA to CPU for Inferencing I am trying to generate inference results of my trained Text-to-Speech Tacotron2 model on CPU. However, initially the model provide inferencing on GPU but due to the non-availability of GPU I am transferring to CPU device. I have made the required changes like map_location = torch.device('cpu') CUDA to CPU Inferencing I am trying to generate inference results of my trained Text-to-Speech Tacotron2 model on CPU. However, initially the model provide inferenc...

RuntimeError: Attempting to deserialize object on a CUDA device ... | Shifting CUDA to CPU for Inferencing I am trying to generate inference results of my trained Text-to-Speech Tacotron2 model on CPU. However, initially the model provide inferencing on GPU but due to the non-availability of GPU I am transferring to CPU device. I have made the required changes like map_location = torch.device('cpu') CUDA to CPU Inferencing I am trying to generate inference results of my trained Text-to-Speech Tacotron2 model on CPU. However, initially the model provide inferenc... Load_learner on CPU throws "RuntimeError('Attempting to ... | Hi, I am currently training a model in colab. After training, I use learner.export() to create the pickle file. But I want to use this learner for inference on CPU on my local machine. When I use load_learner() to load from the pickle file. I am getting below exception. RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False. If you are running on a CPU-only machine, please use torch.load with map_location=‘cpu’ to map your storages to the CPU. I...

Load_learner on CPU throws "RuntimeError('Attempting to ... | Hi, I am currently training a model in colab. After training, I use learner.export() to create the pickle file. But I want to use this learner for inference on CPU on my local machine. When I use load_learner() to load from the pickle file. I am getting below exception. RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False. If you are running on a CPU-only machine, please use torch.load with map_location=‘cpu’ to map your storages to the CPU. I... Deploying ML Skill fails - AI Center - UiPath Community Forum | Hi I am trying to deploy a ML Skill based on the “UiPath Image Analysis ImageClassification” package. The dataset was successfully uploaded and the pipeline was trained and evaluated successfully. When deploying the skill, there are about 20 consecutive warnings in the log, and then the skill changes status from Deploying to Failed. I made my first test yesterday with another dataset of images and that went fine all the way - even with deployment of the skill. The ML Log contains the followi...

Deploying ML Skill fails - AI Center - UiPath Community Forum | Hi I am trying to deploy a ML Skill based on the “UiPath Image Analysis ImageClassification” package. The dataset was successfully uploaded and the pipeline was trained and evaluated successfully. When deploying the skill, there are about 20 consecutive warnings in the log, and then the skill changes status from Deploying to Failed. I made my first test yesterday with another dataset of images and that went fine all the way - even with deployment of the skill. The ML Log contains the followi... Attempting to deserialize object on a CUDA device... error on 2 GPU ... | I use TorchTrainer.as_trainable() to tune my neural net on 2 GPU machine. I checked Cuda usage with nvidia-smi on first trial and it says I use together about 6 GB out of 26 GB but torch.cuda.is_available() is obviously False. After first trial I get following error: Traceback (most recent call last): File “/…/anaconda3/envs/ox/lib/python3.8/site-packages/ray/tune/trial_runner.py”, line 726, in _process_trial result = self.trial_executor.fetch_result(trial) File “/…/anaconda3/envs/ox/lib/py...

Attempting to deserialize object on a CUDA device... error on 2 GPU ... | I use TorchTrainer.as_trainable() to tune my neural net on 2 GPU machine. I checked Cuda usage with nvidia-smi on first trial and it says I use together about 6 GB out of 26 GB but torch.cuda.is_available() is obviously False. After first trial I get following error: Traceback (most recent call last): File “/…/anaconda3/envs/ox/lib/python3.8/site-packages/ray/tune/trial_runner.py”, line 726, in _process_trial result = self.trial_executor.fetch_result(trial) File “/…/anaconda3/envs/ox/lib/py... FILE NOT FOUND : Forums : PythonAnywhere | May 31, 2023 ... ... device('cpu') , there will be an error. RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False.

FILE NOT FOUND : Forums : PythonAnywhere | May 31, 2023 ... ... device('cpu') , there will be an error. RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False.