Feature Detection vs. Descriptor Extraction: Key Differences

This article explores the key differences between feature detection and descriptor extraction in computer vision, highlighting their unique roles and applications.

This article explores the key differences between feature detection and descriptor extraction in computer vision, highlighting their unique roles and applications.

In computer vision, understanding images goes beyond simply "seeing" them. We need to identify and interpret significant points within these images. This is where feature detection and description come into play.

Feature detection identifies points of interest in an image like corners or edges.

import cv2

img = cv2.imread('image.jpg', 0)

fast = cv2.FastFeatureDetector_create()

keypoints = fast.detect(img, None) Feature description creates a numerical vector representing the detected feature, making it recognizable.

brief = cv2.xBRIEF.create()

keypoints, descriptors = brief.compute(img, keypoints)Think of it like finding a landmark (detection) and describing its unique characteristics (description).

Algorithms like SIFT, SURF, and ORB combine both detection and description.

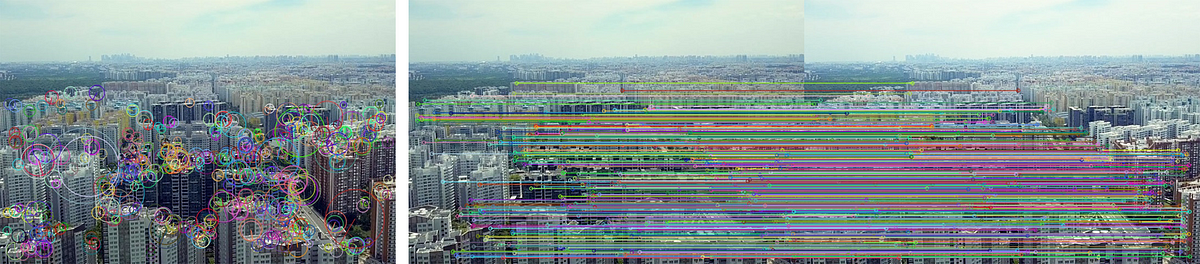

These features are used in various applications like object recognition and image stitching.

This Python code demonstrates feature detection and description in images using OpenCV. It first detects keypoints in a grayscale image using the FAST algorithm. Then, it describes these keypoints using the BRIEF descriptor, generating numerical vectors representing each keypoint's surrounding features. The code also shows how to perform combined detection and description using the ORB algorithm. Visualizations of keypoints are displayed, and the shape of the descriptor output is printed. This example provides a foundation for exploring various feature detection and description algorithms and their applications in computer vision tasks.

This code demonstrates feature detection and description using OpenCV in Python:

import cv2

# Load the image in grayscale

img = cv2.imread('image.jpg', 0)

# --- Feature Detection ---

# Initialize FAST feature detector

fast = cv2.FastFeatureDetector_create()

# Detect keypoints

keypoints = fast.detect(img, None)

# Draw keypoints on the image (optional)

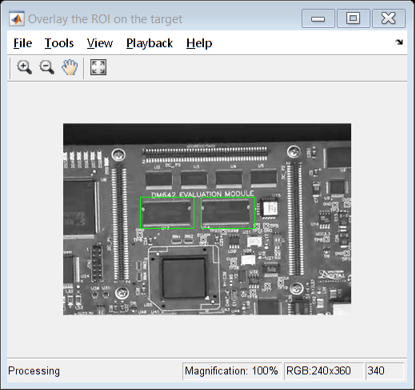

img_with_keypoints = cv2.drawKeypoints(img, keypoints, None, color=(0, 255, 0))

cv2.imshow("Keypoints", img_with_keypoints)

cv2.waitKey(0)

# --- Feature Description ---

# Initialize BRIEF descriptor

brief = cv2.xfeatures2d.BriefDescriptorExtractor_create()

# Compute descriptors for the detected keypoints

keypoints, descriptors = brief.compute(img, keypoints)

# Print the shape of the descriptors (number of keypoints x descriptor size)

print(descriptors.shape)

# --- Using ORB (Combined Detection and Description) ---

# Initialize ORB detector and descriptor

orb = cv2.ORB_create()

# Detect keypoints and compute descriptors

keypoints, descriptors = orb.detectAndCompute(img, None)

# Draw keypoints on the image (optional)

img_with_keypoints = cv2.drawKeypoints(img, keypoints, None, color=(0, 255, 0))

cv2.imshow("Keypoints with ORB", img_with_keypoints)

cv2.waitKey(0)

# Print the shape of the descriptors (number of keypoints x descriptor size)

print(descriptors.shape)

cv2.destroyAllWindows()Explanation:

Feature Detection:

cv2.FastFeatureDetector_create()) to detect keypoints in the image.fast.detect(img, None) returns a list of keypoints.cv2.drawKeypoints().Feature Description:

cv2.xfeatures2d.BriefDescriptorExtractor_create()) to describe the detected keypoints.brief.compute(img, keypoints) takes the image and keypoints as input and returns the descriptors.Combined Detection and Description (ORB):

cv2.ORB_create()), which combines both detection and description in a single step.orb.detectAndCompute(img, None) returns both the keypoints and their descriptors.This code provides a basic example of feature detection and description using OpenCV. You can experiment with different algorithms like SIFT, SURF, and ORB, and explore their applications in object recognition, image stitching, and other computer vision tasks.

Here are some additional notes expanding on the concepts of feature detection and description in computer vision:

Feature Detection

Feature Description

Combined Detection and Description

Key Considerations

| Task | Description

Feature detection and description are essential steps in many computer vision applications. By identifying important points and creating unique representations of them, we can enable computers to "understand" images in a way that goes beyond simply displaying them. Algorithms like FAST for detection, BRIEF for description, and ORB for combined detection and description, provide powerful tools for tasks ranging from object recognition to image stitching. As you delve deeper into computer vision, understanding these fundamental concepts will be crucial for tackling more complex challenges.

Difference between feature detection,extraction,descriptor,selection ... | computer vision,object detection

Difference between feature detection,extraction,descriptor,selection ... | computer vision,object detection Introduction To Feature Detection And Matching | by Deep | Medium | Feature detection and matching is an important task in many computer vision applications, such as structure-from-motion, image retrieval…

Introduction To Feature Detection And Matching | by Deep | Medium | Feature detection and matching is an important task in many computer vision applications, such as structure-from-motion, image retrieval… Feature Detection and Extraction | Local features and their descriptors are the building blocks of many computer vision algorithms. Their applications include image registration, object detection ...

Feature Detection and Extraction | Local features and their descriptors are the building blocks of many computer vision algorithms. Their applications include image registration, object detection ... Feature Detection and Description - OpenCV | FAST Algorithm for Corner Detection ... We know a great deal about feature detectors and descriptors. It is time to learn how to match different descriptors.

Feature Detection and Description - OpenCV | FAST Algorithm for Corner Detection ... We know a great deal about feature detectors and descriptors. It is time to learn how to match different descriptors. A Deep-Based Approach for Multi-Descriptor Feature Extraction ... | Synthetic aperture radar (SAR) images have found increasing attention in various applications, such as remote sensing, surveillance, and disaster mana…

A Deep-Based Approach for Multi-Descriptor Feature Extraction ... | Synthetic aperture radar (SAR) images have found increasing attention in various applications, such as remote sensing, surveillance, and disaster mana… skimage.feature — skimage 0.25.2 documentation | Class for cascade of classifiers that is used for object detection. ORB. Oriented FAST and rotated BRIEF feature detector and binary descriptor extractor.

skimage.feature — skimage 0.25.2 documentation | Class for cascade of classifiers that is used for object detection. ORB. Oriented FAST and rotated BRIEF feature detector and binary descriptor extractor. A Comparison of SIFT, SURF and ORB on OpenCV | by Mikhail ... | Feature extraction is an important part of many image processing methods with use cases ranging from image panorama stitching and robotics…

A Comparison of SIFT, SURF and ORB on OpenCV | by Mikhail ... | Feature extraction is an important part of many image processing methods with use cases ranging from image panorama stitching and robotics…