Disparity Map Definition: Understanding Depth Perception

A disparity map, a key concept in computer vision, is an image that visualizes depth by representing the difference in distances between objects in a scene.

A disparity map, a key concept in computer vision, is an image that visualizes depth by representing the difference in distances between objects in a scene.

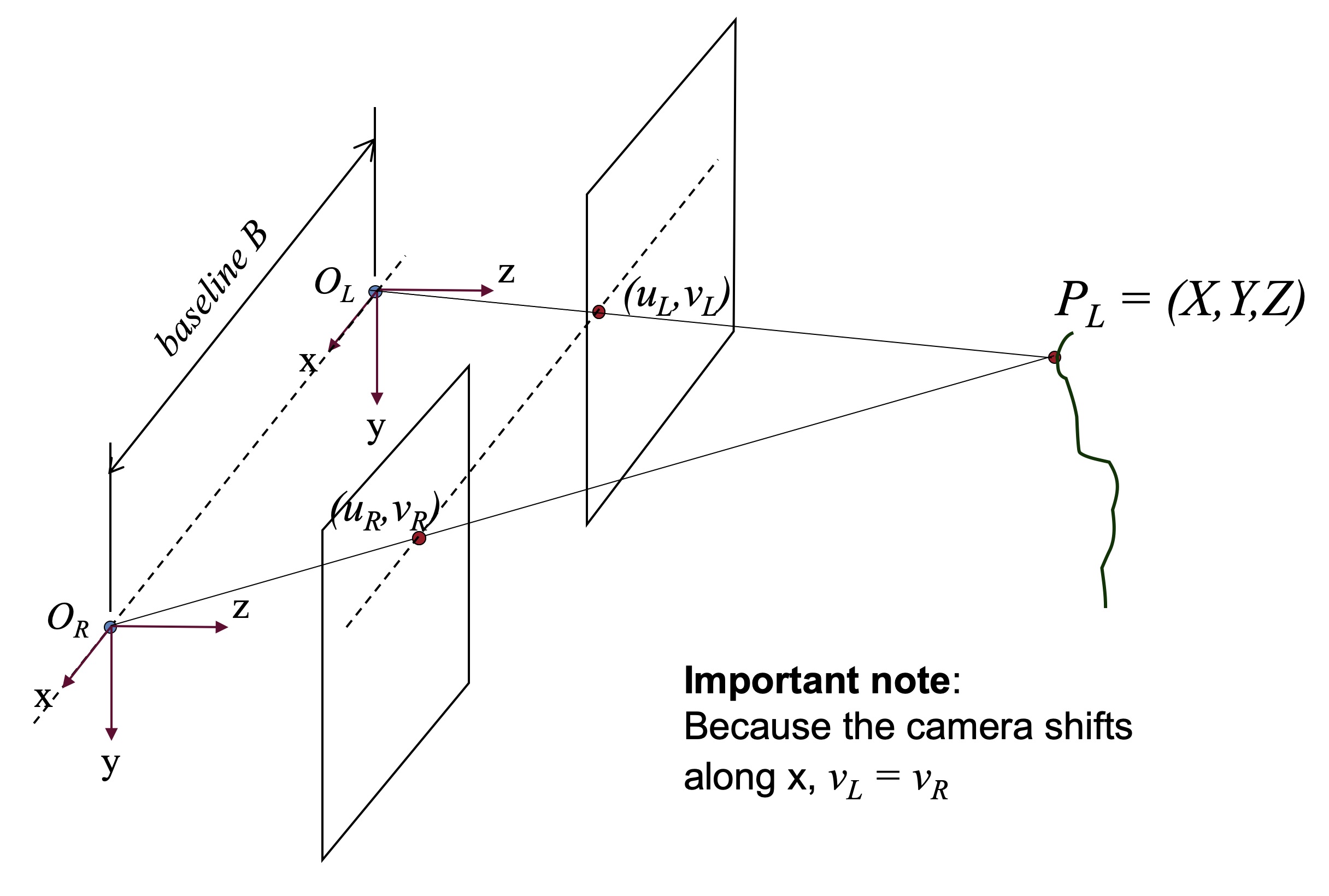

A disparity map is like a magic lens that reveals depth in a pair of images. Imagine you have two eyes, each capturing a slightly different view of the world. Your brain effortlessly combines these images to perceive depth. A disparity map does something similar. It starts with two images taken from slightly different angles, like those from a stereo camera. The map itself is a grayscale image where each pixel value represents the difference in position of a corresponding point in the two images. Brighter pixels in the disparity map indicate objects closer to the camera, while darker pixels represent objects farther away. This is because closer objects have a larger difference in their positions between the two images. Think of it like this: hold your finger close to your face and close one eye, then switch eyes. Your finger seems to jump a greater distance than objects in the background. This jump is what the disparity map captures. Disparity maps are crucial for various applications, including 3D reconstruction, depth estimation, and even robot navigation. By analyzing the differences in pixel positions, we can infer depth information and understand the spatial relationships between objects in a scene.

A disparity map is like a magic lens that reveals depth in a pair of images. Imagine you have two eyes, each capturing a slightly different view of the world. Your brain effortlessly combines these images to perceive depth. A disparity map does something similar.

left_image = cv2.imread('left.png')

right_image = cv2.imread('right.png')

It starts with two images taken from slightly different angles, like those from a stereo camera. The map itself is a grayscale image where each pixel value represents the difference in position of a corresponding point in the two images.

stereo = cv2.StereoBM_create()

disparity_map = stereo.compute(left_image, right_image)

Brighter pixels in the disparity map indicate objects closer to the camera, while darker pixels represent objects farther away. This is because closer objects have a larger difference in their positions between the two images.

plt.imshow(disparity_map, 'gray')

plt.show()

Think of it like this: hold your finger close to your face and close one eye, then switch eyes. Your finger seems to jump a greater distance than objects in the background. This jump is what the disparity map captures.

Disparity maps are crucial for various applications, including 3D reconstruction, depth estimation, and even robot navigation. By analyzing the differences in pixel positions, we can infer depth information and understand the spatial relationships between objects in a scene.

This Python code uses OpenCV and matplotlib libraries to compute and visualize a disparity map from a pair of rectified stereo images. It loads the left and right images, creates a StereoBM object, computes the disparity map, normalizes it for display, and finally shows the disparity map using matplotlib.

import cv2

import matplotlib.pyplot as plt

# Load the left and right stereo images

left_image = cv2.imread('left.png', cv2.IMREAD_GRAYSCALE)

right_image = cv2.imread('right.png', cv2.IMREAD_GRAYSCALE)

# Create a StereoBM object for computing the disparity map

stereo = cv2.StereoBM_create(numDisparities=16, blockSize=15)

# Compute the disparity map

disparity_map = stereo.compute(left_image, right_image)

# Normalize the disparity map for display

disparity_map = cv2.normalize(disparity_map, None, 255,0, cv2.NORM_MINMAX, cv2.CV_8U)

# Display the disparity map

plt.imshow(disparity_map, 'gray')

plt.title('Disparity Map')

plt.show()Explanation:

cv2 for OpenCV and matplotlib.pyplot for plotting.cv2.imread(). Make sure to load them as grayscale images using the cv2.IMREAD_GRAYSCALE flag.cv2.StereoBM_create() object. This object is responsible for computing the disparity map using the block matching algorithm. You can adjust parameters like numDisparities (number of disparities to consider) and blockSize (size of the blocks for matching) for better results depending on your images.stereo.compute() method to calculate the disparity map from the left and right images.cv2.normalize() for better visualization.plt.imshow() from matplotlib.Key Points:

numDisparities and blockSize parameters of cv2.StereoBM_create() can significantly affect the quality of the disparity map. Experiment with different values to find what works best for your images.This code provides a basic example of disparity map generation. You can explore more advanced stereo matching algorithms and techniques for improved accuracy and robustness in real-world applications.

numDisparities parameter during StereoBM object creation. Understanding this range is important for interpreting the disparity map correctly.This article explains the concept of a disparity map and its use in depth perception.

Here's a summary:

In essence, disparity maps allow us to extract valuable depth information from images, enabling a deeper understanding of the spatial relationships within a scene.

In conclusion, disparity maps, generated from the subtle differences between two images, provide a powerful tool for extracting depth information. This grayscale representation, where brighter pixels signify proximity and darker ones indicate distance, finds applications in diverse fields like 3D modeling, robotic vision, and depth perception. By analyzing the "jumps" or disparities, we gain a deeper understanding of spatial relationships within a scene, essentially enabling machines to perceive depth much like human vision. As technology advances, the role of disparity maps in bridging the gap between 2D images and 3D understanding continues to expand, opening up new possibilities in various fields.

Literature Survey on Stereo Vision Disparity Map Algorithms ... | This paper presents a literature survey on existing disparity map algorithms. It focuses on four main stages of processing as proposed by Scharstein and Szeliski in a taxonomy and evaluation of dense...

Literature Survey on Stereo Vision Disparity Map Algorithms ... | This paper presents a literature survey on existing disparity map algorithms. It focuses on four main stages of processing as proposed by Scharstein and Szeliski in a taxonomy and evaluation of dense... Disparity Map - an overview | ScienceDirect Topics | A disparity map computes the horizontal displacement in each pixel between two images. Wang et al. [57] present a technique for disparity estimation.

Disparity Map - an overview | ScienceDirect Topics | A disparity map computes the horizontal displacement in each pixel between two images. Wang et al. [57] present a technique for disparity estimation. Disparity Map | Historically, a disparity map is a visual representation of the differences in pixel coordinates between the corresponding points in a stereo image pair. It is typically generated from a pair of stereo images taken from slightly different viewpoints (left and right cameras). Using the power of the I...

Disparity Map | Historically, a disparity map is a visual representation of the differences in pixel coordinates between the corresponding points in a stereo image pair. It is typically generated from a pair of stereo images taken from slightly different viewpoints (left and right cameras). Using the power of the I... Disparity Map in Stereo Vision | Baeldung on Computer Science | Explore the concept of disparity, and learn about its importance in stereo vision.

Disparity Map in Stereo Vision | Baeldung on Computer Science | Explore the concept of disparity, and learn about its importance in stereo vision. Stereo and Disparity | John Lambert | Dec 27, 2018 ... Stereo Geometry; Disparity; Classical Matching Techniques; A First Stereo Algorithm; Disparity Map; Cost Volumes; Smoothing; When is smoothing a ...

Stereo and Disparity | John Lambert | Dec 27, 2018 ... Stereo Geometry; Disparity; Classical Matching Techniques; A First Stereo Algorithm; Disparity Map; Cost Volumes; Smoothing; When is smoothing a ... disparity | Examples. collapse all. Compute Disparity Map for a Pair of Stereo Images. Open ... The function defines uniqueness as a ratio of the optimal disparity ...

disparity | Examples. collapse all. Compute Disparity Map for a Pair of Stereo Images. Open ... The function defines uniqueness as a ratio of the optimal disparity ...