SIFT Algorithm for Image Similarity Comparison

Learn how to use the Scale Invariant Feature Transform (SIFT) algorithm to determine the similarity between two images by identifying and comparing keypoints.

Learn how to use the Scale Invariant Feature Transform (SIFT) algorithm to determine the similarity between two images by identifying and comparing keypoints.

Image matching is a fundamental task in computer vision, used in various applications like object recognition, image stitching, and 3D reconstruction. This article demonstrates how to perform image matching using the Scale-Invariant Feature Transform (SIFT) algorithm in Python. We'll cover the step-by-step process of loading images, detecting keypoints, computing descriptors, matching descriptors, filtering matches, and visualizing the results. Additionally, we'll explore how to calculate a similarity score based on the number of good matches.

import cv2

import numpy as npimg1 = cv2.imread('image1.jpg', cv2.IMREAD_GRAYSCALE)

img2 = cv2.imread('image2.jpg', cv2.IMREAD_GRAYSCALE)sift = cv2.SIFT_create()kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)bf = cv2.BFMatcher()matches = bf.knnMatch(des1, des2, k=2)good_matches = []

for m, n in matches:

if m.distance < 0.75 * n.distance:

good_matches.append([m])img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, good_matches, None, flags=2)similarity_score = len(good_matches) / len(matches)Explanation:

The Python code performs image similarity comparison using SIFT feature detection and matching. It loads two grayscale images, detects keypoints and descriptors using SIFT, matches the descriptors using Brute-Force Matcher, filters good matches based on a distance ratio test, draws the matches, calculates a similarity score, displays the matched image, and prints the similarity score.

import cv2

import numpy as np

# Load the images

img1 = cv2.imread('image1.jpg', cv2.IMREAD_GRAYSCALE)

img2 = cv2.imread('image2.jpg', cv2.IMREAD_GRAYSCALE)

# Create a SIFT object

sift = cv2.SIFT_create()

# Detect keypoints and compute descriptors

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

# Create a Brute-Force Matcher object

bf = cv2.BFMatcher()

# Match descriptors

matches = bf.knnMatch(des1, des2, k=2)

# Apply ratio test to filter good matches

good_matches = []

for m, n in matches:

if m.distance < 0.75 * n.distance:

good_matches.append([m])

# Draw matches

img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, good_matches, None, flags=2)

# Calculate similarity score (optional)

similarity_score = len(good_matches) / len(matches)

# Display the matched image

cv2.imshow('Matches', img3)

cv2.waitKey(0)

cv2.destroyAllWindows()

# Print similarity score

print("Similarity Score:", similarity_score)Before running the code:

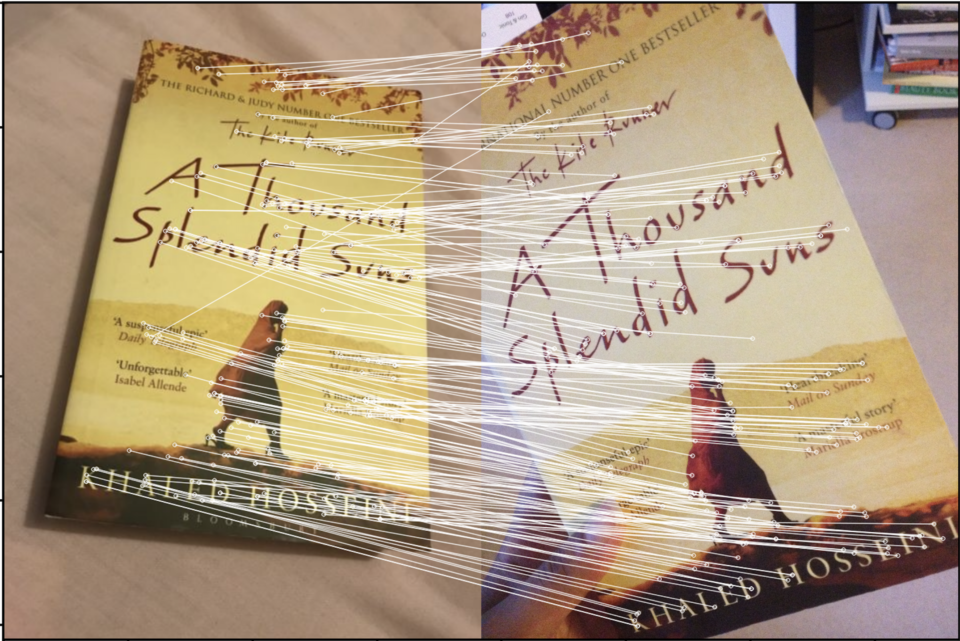

pip install opencv-python).image1.jpg and image2.jpg with the actual filenames of your images.Output:

## Additional Notes

**Image Preprocessing:**

* **Grayscale Conversion:** Converting images to grayscale is common before applying SIFT as it reduces computational complexity without significant loss of information for feature detection.

* **Image Size:** Consider resizing images if they have significantly different resolutions. This can improve matching speed and potentially accuracy.

**SIFT Parameters:**

* **`cv2.SIFT_create()`:** You can adjust parameters within this function. For example, `nfeatures` controls the maximum number of keypoints detected.

* **Experimentation:** The choice of SIFT parameters can impact results. Experiment with different settings based on your images and application.

**Matching and Filtering:**

* **k-NN Matching (`k=2`):** We find the two nearest neighbors for each descriptor in `bf.knnMatch`. This helps in the ratio test for more robust filtering.

* **Ratio Test Threshold (0.75):** This value influences the strictness of filtering. A lower threshold is more stringent, potentially reducing false positives but also potentially discarding good matches.

**Similarity Score:**

* **Normalization:** The provided similarity score is a simple ratio. You might need to normalize it differently depending on your use case.

* **Alternative Metrics:** Consider other similarity or distance metrics based on the distribution of your good matches and the nature of your comparison.

**Performance Considerations:**

* **Computational Cost:** SIFT can be computationally expensive, especially for large images. Consider using faster alternatives like ORB or AKAZE if speed is critical.

* **Library Optimization:** OpenCV offers optimized implementations. Ensure you're using an optimized version for your platform.

**Alternatives and Extensions:**

* **Feature Descriptors:** Explore other feature descriptors like SURF, ORB, BRISK, or AKAZE. They might offer different trade-offs in terms of speed and accuracy.

* **Homography Estimation:** After finding good matches, you can use techniques like RANSAC with `cv2.findHomography` to estimate the geometric transformation between images. This is useful for image alignment and stitching.

## Summary

| Step | Description | Code |

|---|---|---|

| 1. **Load Images** | Load two grayscale images. | `img1 = cv2.imread('image1.jpg', cv2.IMREAD_GRAYSCALE)`<br>`img2 = cv2.imread('image2.jpg', cv2.IMREAD_GRAYSCALE)` |

| 2. **SIFT Feature Extraction** | Create a SIFT object and detect keypoints and descriptors for both images. | `sift = cv2.SIFT_create()`<br>`kp1, des1 = sift.detectAndCompute(img1, None)`<br>`kp2, des2 = sift.detectAndCompute(img2, None)` |

| 3. **Feature Matching** | Use a Brute-Force Matcher to find the best matches between the descriptors of the two images. | `bf = cv2.BFMatcher()`<br>`matches = bf.knnMatch(des1, des2, k=2)` |

| 4. **Ratio Test Filtering** | Apply a ratio test to keep only good matches (where the distance between the best match is significantly smaller than the distance to the second-best match). | `good_matches = []`<br>`for m, n in matches:`<br>` if m.distance < 0.75 * n.distance:`<br>` good_matches.append([m])` |

| 5. **Visualize Matches (Optional)** | Draw lines connecting the matched keypoints between the two images. | `img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, good_matches, None, flags=2)` |

| 6. **Similarity Score (Optional)** | Calculate a similarity score based on the number of good matches. | `similarity_score = len(good_matches) / len(matches)` |

**Summary:** This code performs image similarity comparison using SIFT feature extraction and matching. It identifies keypoints in two images, describes them using SIFT descriptors, matches these descriptors, filters out poor matches, and optionally visualizes the results. A similarity score can be calculated based on the number of good matches.

## Conclusion

This code provides a practical implementation of image matching using the SIFT algorithm in Python. By understanding the steps involved, including feature detection, descriptor computation, matching, and filtering, you can adapt this code for various applications requiring image comparison and analysis. Remember to consider the performance implications and explore alternative algorithms and optimizations based on your specific needs.

## References

*  [adumrewal/SIFTImageSimilarity: Interactive code for image ... - GitHub](https://github.com/adumrewal/SIFTImageSimilarity) | Interactive code for image similarity using SIFT algorithm - adumrewal/SIFTImageSimilarity

*  [Sift comparison, calculate similarity score, python - Stack Overflow](https://stackoverflow.com/questions/50217364/sift-comparison-calculate-similarity-score-python) | May 7, 2018 ... How to use SIFT algorithm to compute how similar two images are? 3 · SIFT- how to find similarity in more than 2 images · 1 · sift features for ...

*  [Align two versions of the same image that are at different resolutions ...](https://forum.image.sc/t/align-two-versions-of-the-same-image-that-are-at-different-resolutions-and-one-is-cropped/54737) | Looking for recommendations in ImageJ or any other programming language or program for some tips or basic strategy to align the smaller subsetted image (below) onto the larger image (below). The reason I am attempting to do this is because I am trying to restore the cropped image to its original high resolution. The cropped image, was resized due to computation constraints that I no longer have. Additionally the cropped image contains extensive compound selections of ROIs that I wish to preserv...

*  [Feature-matching using BRISK. an open-source alternative to SIFT ...](https://medium.com/analytics-vidhya/feature-matching-using-brisk-277c47539e8) | an open-source alternative to SIFT

*  [Is SIFT good for similar but non identical pattern? - Image Analysis ...](https://forum.image.sc/t/is-sift-good-for-similar-but-non-identical-pattern/11709) | Hi I want to detect a pattern of interest in a large image using a template as a reference. The goal is to use it to automatically detect the region of interest in many similar samples. I have already a nice template matching routine that is quite efficient (using 0-mean normalised cross-correlation) for most cases, however if I want to look for rotated version of my template I have to repeat the search with n rotated version of the template which increases the computation time. So I came ac...

*  [What is SIFT(Scale Invariant Feature Transform) Algorithm?](https://www.analyticsvidhya.com/blog/2019/10/detailed-guide-powerful-sift-technique-image-matching-python/) | Learn about SIFT(scale invariant feature transform), a powerful algorithm in computer vision. Understand what it is, sift computer vision.

*  [python - How to determine if two images contain the same object ...](https://stats.stackexchange.com/questions/601294/how-to-determine-if-two-images-contain-the-same-object-without-a-dataset) | Jan 8, 2023 ... ... different angles, cameras, and lighting conditions. I've tried using the SIFT algorithm to find keypoints and descriptors. Then using the cv2.

*  [How to measure similarity between two images - Python - OpenCV](https://forum.opencv.org/t/how-to-measure-similarity-between-two-images/7941) | I want to compute a similarity measure between two images (if images are totally different then similarity = 0, if images are exactly the same then similarity = 1) using SIFT or ORB descriptors I am trying to face this problem using feature matching. However, I think I am not doing it in the correct way. This is my actual code: def get_similarity_from_desc(approach, query_desc, corp_desc): if approach == 'sift': # BFMatcher with euclidean distance bf = cv.BFMatcher() el...

*  [How is the SIFT algorithm rotation invariant? : r/computervision](https://www.reddit.com/r/computervision/comments/1jekly/how_is_the_sift_algorithm_rotation_invariant/) | Posted by u/vcxzzxcv - 9 votes and 6 comments