Detector, Extractor, Matcher Classification Guide

This article explores the different types of detectors, extractors, and matchers used in computer vision and pattern recognition.

This article explores the different types of detectors, extractors, and matchers used in computer vision and pattern recognition.

Image matching is a fundamental task in computer vision, enabling applications like image stitching, 3D reconstruction, and object tracking. This process typically involves three key steps: feature detection, feature description, and feature matching. First, we identify salient and repeatable points in images called features. Then, we characterize these features using descriptors that are robust to variations in viewpoint and illumination. Finally, we compare descriptors across images to establish correspondences. This article provides a concise overview of these steps using OpenCV, a popular computer vision library in Python.

Feature Detection: Identify interesting points in an image. These points are distinctive and repeatable.

import cv2

img = cv2.imread('image.jpg', 0)

fast = cv2.FastFeatureDetector_create()

keypoints = fast.detect(img, None)Feature Description: For each detected point, compute a descriptor that captures its characteristics. This descriptor should be robust to changes in viewpoint, illumination, etc.

sift = cv2.SIFT_create()

keypoints, descriptors = sift.compute(img, keypoints)Feature Matching: Compare descriptors from different images to find correspondences. This helps in tasks like image stitching or object recognition.

bf = cv2.BFMatcher()

matches = bf.knnMatch(descriptors1, descriptors2, k=2)Key Points:

Example Combinations:

The Python code performs image feature matching using OpenCV library. It loads two images, detects keypoints using the FAST algorithm, and describes them using SIFT descriptors. Brute-Force Matcher finds corresponding keypoints between the images. A ratio test filters ambiguous matches. Finally, the code visualizes the matched keypoints between the two images.

import cv2

# Load images

img1 = cv2.imread('image1.jpg', 0)

img2 = cv2.imread('image2.jpg', 0)

# --- Feature Detection ---

# Choose a feature detector (FAST, SIFT, SURF, ORB)

# Example: Using FAST

fast = cv2.FastFeatureDetector_create()

keypoints1 = fast.detect(img1, None)

keypoints2 = fast.detect(img2, None)

# --- Feature Description ---

# Choose a feature descriptor (SIFT, SURF, BRIEF, ORB)

# Example: Using SIFT

sift = cv2.SIFT_create()

_, descriptors1 = sift.compute(img1, keypoints1)

_, descriptors2 = sift.compute(img2, keypoints2)

# --- Feature Matching ---

# Choose a matching algorithm (Brute-Force, FlannBased)

# Example: Using Brute-Force Matcher

bf = cv2.BFMatcher()

matches = bf.knnMatch(descriptors1, descriptors2, k=2)

# --- Filter Matches (Optional) ---

# Apply ratio test to filter good matches

good_matches = []

for m, n in matches:

if m.distance < 0.75 * n.distance:

good_matches.append([m])

# --- Visualize Matches ---

img_matches = cv2.drawMatchesKnn(

img1, keypoints1, img2, keypoints2, good_matches, None, flags=2

)

cv2.imshow("Matches", img_matches)

cv2.waitKey(0)

cv2.destroyAllWindows()Explanation:

Feature Detection:

cv2.FastFeatureDetector_create() to detect keypoints in both images.Feature Description:

cv2.SIFT_create() to compute SIFT descriptors for each detected keypoint.Feature Matching:

cv2.BFMatcher() to find the best matches between descriptors from the two images.Visualization:

cv2.drawMatchesKnn() to visualize the matched keypoints between the two images.Remember:

Feature Detection:

Feature Description:

Feature Matching:

General Considerations:

This article outlines the process of finding corresponding points between images using feature detection, description, and matching techniques.

1. Feature Detection:

cv2.FastFeatureDetector_create().detect(img, None)

2. Feature Description:

cv2.SIFT_create().compute(img, keypoints)

3. Feature Matching:

cv2.BFMatcher().knnMatch(descriptors1, descriptors2, k=2)

Key Takeaways:

This process enables various computer vision tasks like image stitching and object recognition by establishing point correspondences between images.

Image feature matching is a crucial element of computer vision, empowering applications like image stitching, 3D reconstruction, and object tracking. This process involves three fundamental steps: feature detection, feature description, and feature matching. By identifying salient points in images, characterizing them with robust descriptors, and comparing these descriptors across images, we can establish correspondences that underpin these applications. This article provides a practical guide to image feature matching using OpenCV in Python, offering a foundational understanding of the core concepts and demonstrating their implementation. Remember to select detectors, descriptors, and matching algorithms based on the specific requirements of your application, prioritizing speed or accuracy as needed. By mastering these techniques, you unlock a powerful toolkit for tackling a wide range of computer vision challenges.

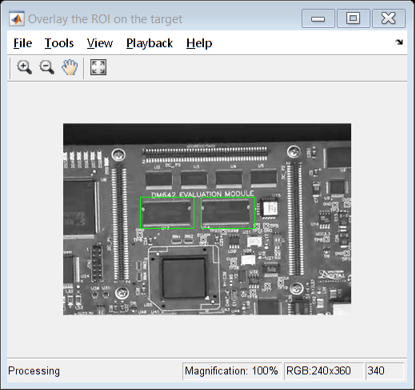

Feature Detection and Extraction | Image registration, interest point detection, feature descriptor extraction, point feature matching, and image retrieval ... detection and classification ...

Feature Detection and Extraction | Image registration, interest point detection, feature descriptor extraction, point feature matching, and image retrieval ... detection and classification ... Local Feature Detection and Extraction | You can mix and match the detectors and the descriptors depending on the requirements of your application. For more details, see Point Feature Types. What ...

Local Feature Detection and Extraction | You can mix and match the detectors and the descriptors depending on the requirements of your application. For more details, see Point Feature Types. What ... An Overview of Audio Event Detection Methods from Feature ... | Audio streams, such as news broadcasting, meeting rooms, and special video comprise sound from an extensive variety of sources. The detection of audio events including speech, coughing, gunshots, e...

An Overview of Audio Event Detection Methods from Feature ... | Audio streams, such as news broadcasting, meeting rooms, and special video comprise sound from an extensive variety of sources. The detection of audio events including speech, coughing, gunshots, e... PCA Feature Extraction for Change Detection in Multidimensional ... | When classifiers are deployed in real-world applications, it is assumed that the distribution of the incoming data matches the distribution of the data used to train the classifier. This assumption is often incorrect, which necessitates some form of change detection or adaptive classification. While there has been a lot of work on change detection based on the classification error monitored over the course of the operation of the classifier, finding changes in multidimensional unlabeled data is still a challenge. Here, we propose to apply principal component analysis (PCA) for feature extraction prior to the change detection. Supported by a theoretical example, we argue that the components with the lowest variance should be retained as the extracted features because they are more likely to be affected by a change. We chose a recently proposed semiparametric log-likelihood change detection criterion that is sensitive to changes in both mean and variance of the multidimensional distribution. An experiment with

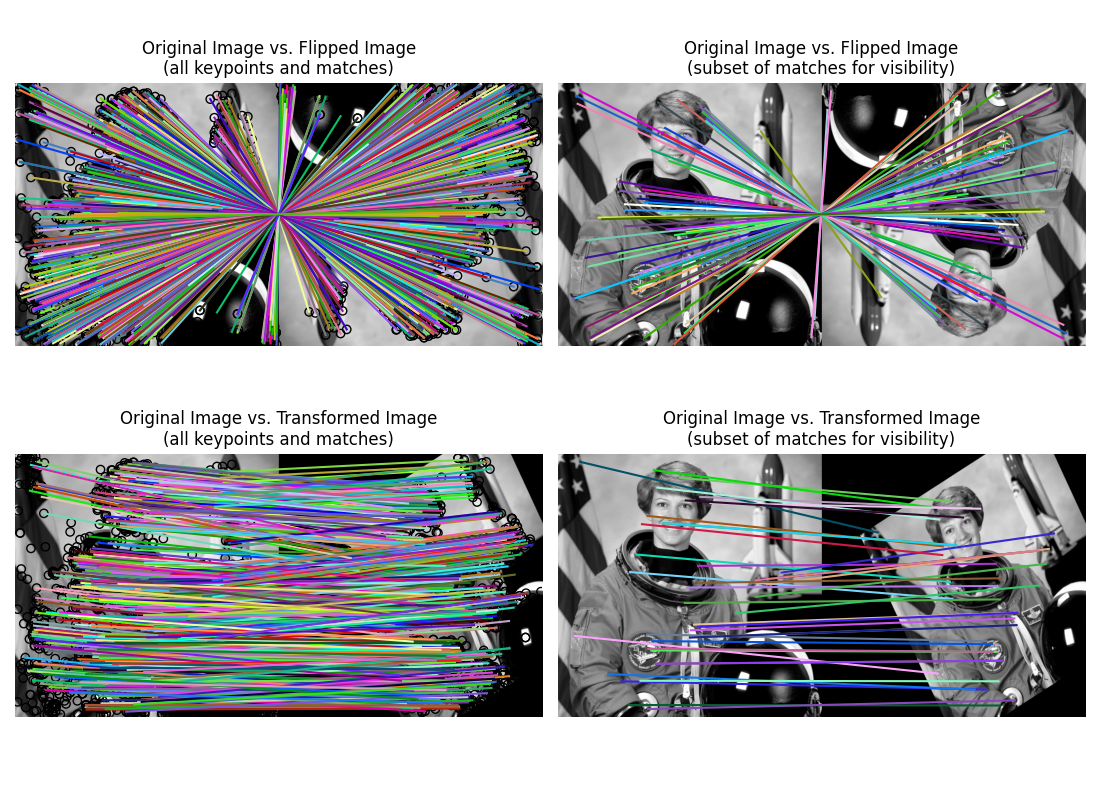

PCA Feature Extraction for Change Detection in Multidimensional ... | When classifiers are deployed in real-world applications, it is assumed that the distribution of the incoming data matches the distribution of the data used to train the classifier. This assumption is often incorrect, which necessitates some form of change detection or adaptive classification. While there has been a lot of work on change detection based on the classification error monitored over the course of the operation of the classifier, finding changes in multidimensional unlabeled data is still a challenge. Here, we propose to apply principal component analysis (PCA) for feature extraction prior to the change detection. Supported by a theoretical example, we argue that the components with the lowest variance should be retained as the extracted features because they are more likely to be affected by a change. We chose a recently proposed semiparametric log-likelihood change detection criterion that is sensitive to changes in both mean and variance of the multidimensional distribution. An experiment with SIFT feature detector and descriptor extractor — skimage 0.23.2 ... | Face classification using Haar-like feature descriptor · Explore 3D images (of ... Flipped Image (all keypoints and matches), Original Image vs. /home ...

SIFT feature detector and descriptor extractor — skimage 0.23.2 ... | Face classification using Haar-like feature descriptor · Explore 3D images (of ... Flipped Image (all keypoints and matches), Original Image vs. /home ... Feature extraction and image classification using OpenCV | Mar 16, 2023 ... Image classification and object detection. Image classification is one of the most promising applications of machine learning aiming to deliver ...

Feature extraction and image classification using OpenCV | Mar 16, 2023 ... Image classification and object detection. Image classification is one of the most promising applications of machine learning aiming to deliver ... skimage.feature — skimage 0.25.1 documentation | Feature detection and extraction, e.g., texture analysis, corners, etc. ... Haar-like features have been successfully used for image classification and object ...

skimage.feature — skimage 0.25.1 documentation | Feature detection and extraction, e.g., texture analysis, corners, etc. ... Haar-like features have been successfully used for image classification and object ...