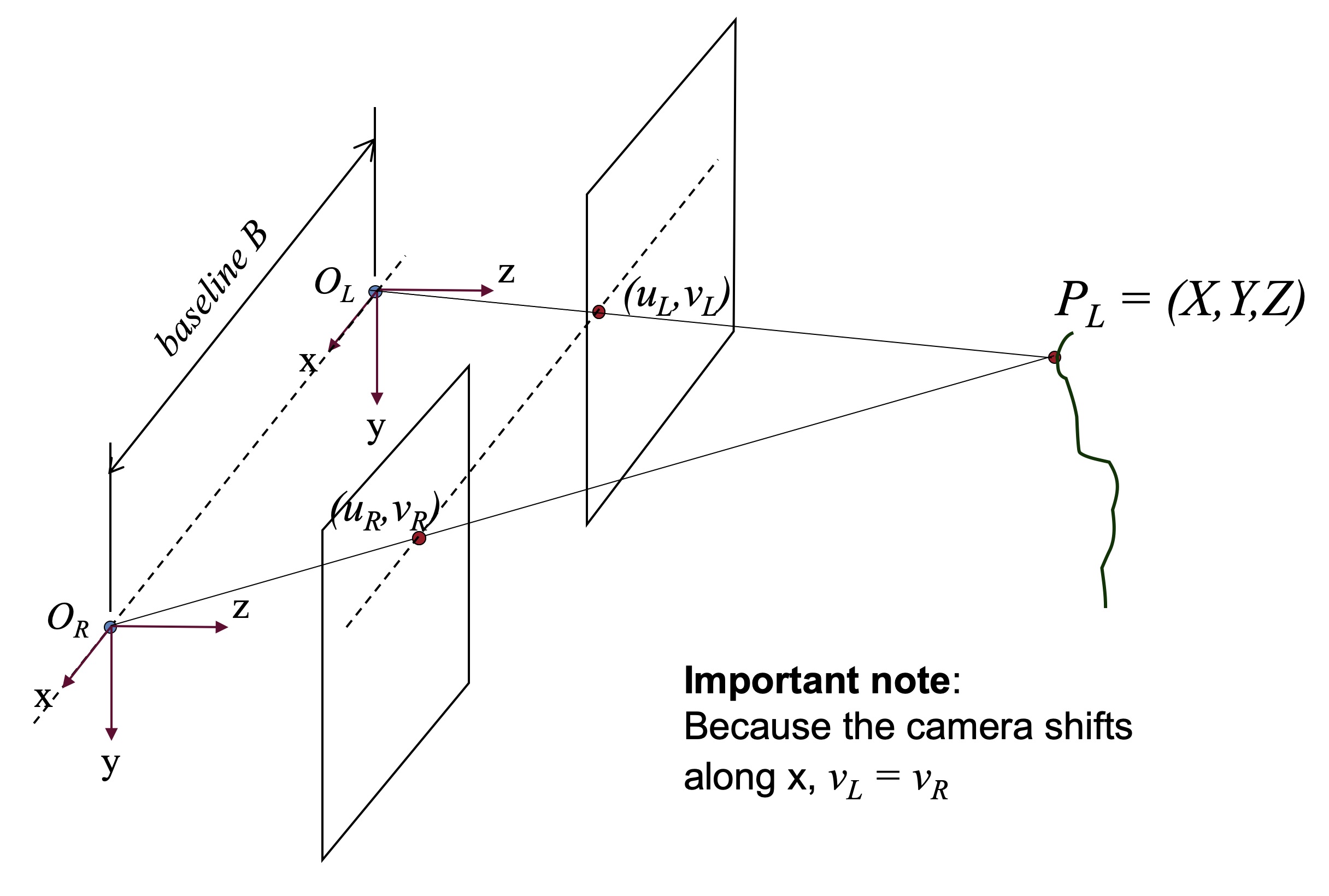

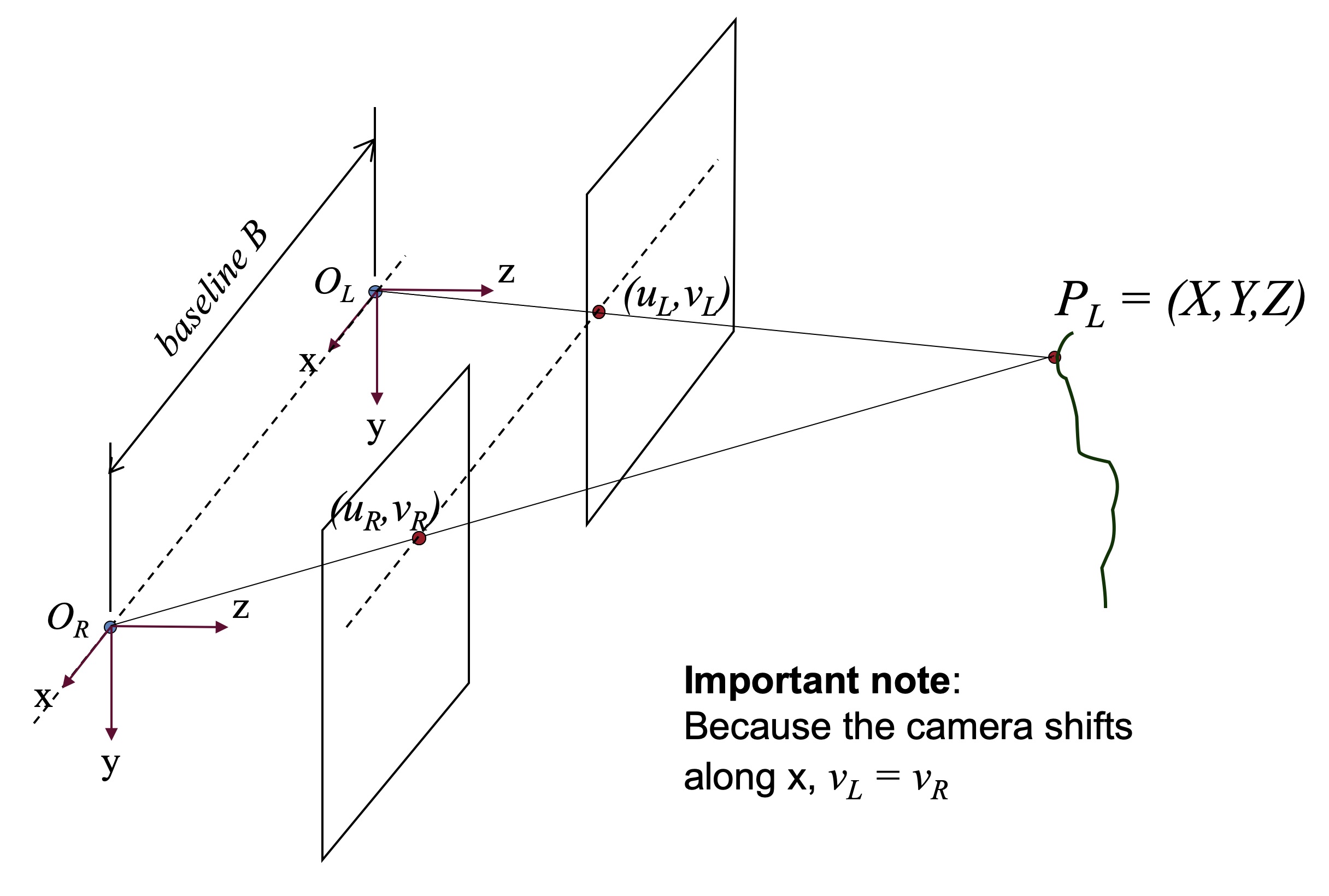

Stereo vision, a technique mimicking human vision, uses two cameras to perceive depth. By analyzing the horizontal shift, known as disparity, between corresponding points in the images from these cameras, we can estimate distances. A disparity map visually represents these disparities, with brighter pixels typically indicating closer objects. While disparity measures the difference in image position, depth refers to the actual distance from the camera. Using disparity, along with camera parameters like focal length and baseline (distance between cameras), we can calculate depth. This depth information is often represented in a depth map, providing a visual representation of the scene's 3D structure.

-

Stereo Vision: Imagine two cameras slightly apart, like your eyes. They capture the same scene but from different angles. This setup is the foundation of stereo vision.

# Example: Loading left and right images

import cv2

left_image = cv2.imread('left.jpg')

right_image = cv2.imread('right.jpg')

-

Disparity: Focus on a single point in the left image. Now find the corresponding point in the right image. The horizontal distance between these two points is the disparity. A larger disparity means the point is closer to the cameras.

# Example (Conceptual - actual disparity calculation is more complex)

x_left = 100 # Point's x-coordinate in the left image

x_right = 80 # Point's x-coordinate in the right image

disparity = x_left - x_right # Simplified disparity

-

Disparity Map: Instead of a single point, we calculate disparities for all points in the image, creating a disparity map. Brighter pixels in the map usually represent closer objects.

# Example using OpenCV's StereoBM (Basic Block Matching)

stereo = cv2.StereoBM_create()

disparity_map = stereo.compute(left_image, right_image)

-

Depth: Disparity and depth are related but not the same. Depth is the actual distance of a point from the camera. We can calculate depth using disparity, the distance between the cameras (baseline), and their focal length.

# Simplified depth calculation

focal_length = 500 # Example focal length in pixels

baseline = 60 # Distance between cameras in mm

depth = (focal_length * baseline) / disparity

-

Depth Map: Similar to a disparity map, a depth map provides the distance (usually in millimeters or meters) of each pixel from the camera.

In summary:

-

Disparity: Difference in horizontal position of corresponding points in stereo images.

-

Disparity Map: An image where each pixel's brightness represents disparity.

-

Depth: Actual distance of a point from the camera.

-

Depth Map: An image where each pixel represents the depth at that point.

This Python code performs stereo depth estimation using OpenCV. It loads left and right rectified images, computes the disparity map using Stereo Block Matching, and then estimates the depth map using camera parameters (focal length and baseline). The disparity and depth maps are displayed for visualization.

import cv2

import numpy as np

# Load left and right images (make sure they are rectified)

left_image = cv2.imread('left.jpg', cv2.IMREAD_GRAYSCALE)

right_image = cv2.imread('right.jpg', cv2.IMREAD_GRAYSCALE)

# 1. Stereo Matching (Disparity Calculation)

# -------------------------------------------

# Create a StereoBM object (you can experiment with different parameters)

stereo = cv2.StereoBM_create(numDisparities=16, blockSize=15)

# Compute the disparity map

disparity_map = stereo.compute(left_image, right_image)

# Scale and display the disparity map (for visualization)

disparity_map = cv2.normalize(disparity_map, None, 255,0, cv2.NORM_MINMAX, cv2.CV_8U)

cv2.imshow('Disparity Map', disparity_map)

# 2. Depth Estimation

# ---------------------

# Camera parameters (you need to calibrate your cameras to get accurate values)

focal_length = 500 # In pixels

baseline = 60 # In millimeters

# Create a depth map (avoiding division by zero)

depth_map = np.zeros_like(disparity_map, dtype=np.float32)

depth_map[disparity_map > 0] = (focal_length * baseline) / disparity_map[disparity_map > 0]

# Scale and display the depth map (for visualization)

depth_map = cv2.normalize(depth_map, None, 255,0, cv2.NORM_MINMAX, cv2.CV_8U)

cv2.imshow('Depth Map', depth_map)

cv2.waitKey(0)

cv2.destroyAllWindows()Explanation:

-

Image Loading and Grayscale Conversion:

- Load the left and right images using

cv2.imread.

- Convert them to grayscale using

cv2.IMREAD_GRAYSCALE as stereo matching algorithms often work on intensity variations.

-

Stereo Matching (Disparity Calculation):

-

cv2.StereoBM_create(): Create a Stereo Block Matching (BM) object. This algorithm compares blocks of pixels between the images to find correspondences.

-

numDisparities: Number of disparity levels (multiples of 16).

-

blockSize: Size of the blocks used for matching (larger values can handle more texture but may blur edges).

-

stereo.compute(): Calculate the disparity map.

-

Normalization: The disparity map values are normalized to the 0-255 range for display.

-

Depth Estimation:

-

Camera Parameters: You must provide accurate

focal_length (in pixels) and baseline (distance between cameras in mm) values. These are obtained through camera calibration.

-

Depth Calculation: The depth for each pixel is calculated using the formula:

depth = (focal_length * baseline) / disparity.

-

Handling Zero Disparity: We avoid division by zero by only calculating depth where

disparity_map > 0.

-

Visualization:

- Both the disparity map and depth map are normalized and displayed using

cv2.imshow.

Important Notes:

-

Camera Calibration: Accurate depth estimation heavily relies on precise camera calibration to determine the focal length and baseline.

-

Image Rectification: The input images should be rectified, meaning they are aligned as if captured by perfectly parallel cameras. This step is crucial for accurate disparity calculation.

-

Algorithm Choice: StereoBM is a basic block matching algorithm. More advanced algorithms like StereoSGBM (Semi-Global Block Matching) or deep learning-based methods can provide more accurate results, especially in challenging scenes.

-

Real-World Applications: Stereo vision is used in robotics (navigation, obstacle avoidance), autonomous driving, 3D modeling, and more.

General Concepts:

-

Epipolar Geometry: The relationship between corresponding points in stereo images is constrained by epipolar lines. Understanding this geometry is crucial for efficient stereo matching. Rectification aligns these lines horizontally, simplifying the search for correspondences.

-

Occlusion: Regions visible in one image but hidden in the other due to perspective differences. These areas create holes in the disparity map and pose challenges for depth estimation.

-

Matching Ambiguity: Repetitive textures or featureless regions can lead to incorrect matches, resulting in noisy or inaccurate disparity maps.

Code Example Enhancements:

-

Error Handling: The code assumes ideal conditions. In reality, you should include checks for successful image loading and handle potential errors during disparity calculation.

-

Parameter Tuning: The

numDisparities and blockSize parameters significantly impact the results. Experiment with different values based on your scene and camera setup.

-

Advanced Algorithms: Explore StereoSGBM or deep learning-based methods (e.g., PSMNet, StereoNet) for improved accuracy, especially in challenging scenarios.

-

Post-processing: Apply filtering or smoothing techniques to the disparity map to reduce noise and improve the visual quality of the depth map.

Real-World Considerations:

-

Computational Cost: Stereo matching can be computationally expensive, especially for high-resolution images or real-time applications. Consider hardware acceleration (GPUs) or optimized algorithms.

-

Baseline Selection: A larger baseline increases depth accuracy but also increases the likelihood of occlusions and makes matching more difficult.

-

Applications Beyond Depth: Stereo vision can also be used for object recognition, tracking, and scene understanding by providing 3D information.

Further Exploration:

-

Camera Calibration Techniques: Learn about camera calibration methods (e.g., using checkerboard patterns) to obtain accurate intrinsic and extrinsic camera parameters.

-

OpenCV Documentation: Refer to the OpenCV documentation for detailed explanations of stereo matching algorithms, parameters, and examples: https://docs.opencv.org/

-

Research Papers: Explore recent research papers on stereo vision and depth estimation for the latest advancements and techniques.

This text describes how stereo vision works to estimate depth:

1. Stereo Setup: Two cameras, slightly apart like human eyes, capture the same scene from different angles.

2. Disparity: The horizontal difference in position of a point in the left image compared to the right image. Larger disparity indicates the point is closer to the cameras.

3. Disparity Map: An image where each pixel's brightness represents the disparity at that point. Brighter pixels generally indicate closer objects.

4. Depth: The actual distance of a point from the camera. It's calculated using disparity, the distance between the cameras (baseline), and their focal length.

5. Depth Map: An image where each pixel represents the depth at that point, providing a visual representation of the scene's 3D structure.

In essence: Stereo vision uses the difference in perspective between two images (disparity) to calculate the distance of objects from the camera (depth), creating a depth map that mimics human 3D perception.

Stereo vision, by mimicking the way human eyes perceive depth, enables machines to see the world in three dimensions. By analyzing the disparity, the horizontal shift in object positions between two images taken from slightly different viewpoints, we can infer depth information. This disparity is visually represented in a disparity map, where brighter pixels typically correspond to closer objects. While disparity measures the difference in image coordinates, depth represents the actual distance of a point from the camera. Using disparity, along with camera parameters like focal length and baseline distance, we can accurately calculate depth. This depth information is often visualized as a depth map, providing a compelling representation of the scene's 3D structure. This technology has far-reaching applications, from enabling robots to navigate complex environments and avoid obstacles, to empowering self-driving cars to perceive their surroundings, to creating realistic 3D models for various purposes. As our understanding of stereo vision and depth estimation continues to evolve, we can expect even more innovative applications to emerge, further blurring the lines between human and machine vision.

-

Understanding Depth Map / Depth Image / Disparity Map / Disparity ... | Feb 26, 2022 ... Please help me understand the difference between a depth map, a depth image ... As I read relevant papers about stereo matching, the disparity ...

Understanding Depth Map / Depth Image / Disparity Map / Disparity ... | Feb 26, 2022 ... Please help me understand the difference between a depth map, a depth image ... As I read relevant papers about stereo matching, the disparity ...

-

computer vision - What is the difference between a disparity map ... | Jul 12, 2013 ... Disparity. Disparity refers to the distance between two corresponding points in the left and right image of a stereo pair.

computer vision - What is the difference between a disparity map ... | Jul 12, 2013 ... Disparity. Disparity refers to the distance between two corresponding points in the left and right image of a stereo pair.

-

Disparity and Depth Estimation From Stereo Camera | Discover how to estimate the disparity and the depth in stereo vision.

Disparity and Depth Estimation From Stereo Camera | Discover how to estimate the disparity and the depth in stereo vision.

-

![WaveletStereo: Learning Wavelet Coefficients of Disparity Map in ...]() WaveletStereo: Learning Wavelet Coefficients of Disparity Map in ... | The pyramid stereo matching network (PSMNet) [5] was proposed, where a spatial pyramid pooling module ag- gregates context in different scales and locations to ...

WaveletStereo: Learning Wavelet Coefficients of Disparity Map in ... | The pyramid stereo matching network (PSMNet) [5] was proposed, where a spatial pyramid pooling module ag- gregates context in different scales and locations to ...

-

Comparison of Stereo Matching Algorithms for the Development of ... | Stereo Matching is one of the classical problems in computer vision for the extraction of 3D information but still controversial for accuracy and processing costs. The use of matching techniques and cost functions is crucial in the development of the disparity map. This paper presents a comparative study of six different stereo matching algorithms including Block Matching (BM), Block Matching with Dynamic Programming (BMDP), Belief Propagation (BP), Gradient Feature Matching (GF), Histogram of Oriented Gradient (HOG), and the proposed method. Also three cost functions namely Mean Squared Error (MSE), Sum of Absolute Differences (SAD), Normalized Cross-Correlation (NCC) were used and compared. The stereo images used in this study were from the Middlebury Stereo Datasets provided with perfect and imperfect calibrations. Results show that the selection of matching function is quite important and also depends on the images properties. Results showed that the BP algorithm in most cases provided better results gett

Comparison of Stereo Matching Algorithms for the Development of ... | Stereo Matching is one of the classical problems in computer vision for the extraction of 3D information but still controversial for accuracy and processing costs. The use of matching techniques and cost functions is crucial in the development of the disparity map. This paper presents a comparative study of six different stereo matching algorithms including Block Matching (BM), Block Matching with Dynamic Programming (BMDP), Belief Propagation (BP), Gradient Feature Matching (GF), Histogram of Oriented Gradient (HOG), and the proposed method. Also three cost functions namely Mean Squared Error (MSE), Sum of Absolute Differences (SAD), Normalized Cross-Correlation (NCC) were used and compared. The stereo images used in this study were from the Middlebury Stereo Datasets provided with perfect and imperfect calibrations. Results show that the selection of matching function is quite important and also depends on the images properties. Results showed that the BP algorithm in most cases provided better results gett

-

Disparity for both left and right image, is it possible? - OpenCV Q&A ... | Jul 5, 2019 ... It also takes as input the left disparity map and the matrix "cost" computed by the stereo correspondence algorithm. It is more like a ...

Disparity for both left and right image, is it possible? - OpenCV Q&A ... | Jul 5, 2019 ... It also takes as input the left disparity map and the matrix "cost" computed by the stereo correspondence algorithm. It is more like a ...

-

Stereo and Disparity | John Lambert | Dec 27, 2018 ... The Correspondence Problem. Think of two cameras collecting images at the same time. There are differences in the images. Notably, there will be ...

Stereo and Disparity | John Lambert | Dec 27, 2018 ... The Correspondence Problem. Think of two cameras collecting images at the same time. There are differences in the images. Notably, there will be ...

-

in stereo matching is the disparity always to the left? - OpenCV Q&A ... | Mar 12, 2013 ... The resulting disparity maps are always crop from one side. OpenCV assumes that the disparity between a pair of rectified stereo image is always ...

in stereo matching is the disparity always to the left? - OpenCV Q&A ... | Mar 12, 2013 ... The resulting disparity maps are always crop from one side. OpenCV assumes that the disparity between a pair of rectified stereo image is always ...

-

Literature Survey on Stereo Vision Disparity Map Algorithms ... | This paper presents a literature survey on existing disparity map algorithms. It focuses on four main stages of processing as proposed by Scharstein and Szeliski in a taxonomy and evaluation of dense...

Literature Survey on Stereo Vision Disparity Map Algorithms ... | This paper presents a literature survey on existing disparity map algorithms. It focuses on four main stages of processing as proposed by Scharstein and Szeliski in a taxonomy and evaluation of dense...