Understanding TensorFlow Strides: A Beginner's Guide

Learn how to control the movement of filters in TensorFlow by mastering the strides argument for efficient and effective convolutional neural networks.

Learn how to control the movement of filters in TensorFlow by mastering the strides argument for efficient and effective convolutional neural networks.

In convolutional neural networks, strides are a crucial parameter that dictates how the convolutional filter traverses the input data. Imagine examining an image with a magnifying glass; the stride determines how many pixels you move the glass with each step. A stride of 1 signifies moving the filter pixel by pixel, while a stride of 2 means skipping every other pixel. Larger strides result in a reduced output size. Typically, strides are set to 1 for the batch (first) and depth (last) dimensions to ensure that each data sample is processed individually, and no channels are skipped. A common stride configuration is (1, 2, 2, 1), representing batch, height, width, and channels, respectively. It's important to note that modifying the strides doesn't directly impact the number of parameters within the convolutional layer. The filter size and the number of filters determine the parameter count. The primary influence of strides lies in controlling the output size and the extent of the input "seen" by the filter.

Strides control the movement of the convolutional filter across your input data.

Think of it like moving a magnifying glass over an image. The stride is how many pixels you shift the glass each time.

# A stride of 2 in both dimensions

strides=(2, 2)A stride of 1 means you move the filter one pixel at a time. A stride of 2 means you skip every other pixel. Larger strides lead to smaller output sizes.

You'll usually set strides to 1 for the first (batch) and last (depth) dimensions. This is because you process one data sample at a time and don't want to skip any channels.

# Common stride setup

strides=(1, 2, 2, 1) # Batch, height, width, channelsChanging the strides doesn't directly change the number of parameters in your convolutional layer. The number of parameters depends on the filter size and the number of filters.

Strides primarily affect the output size and how much the filter "sees" of the input.

This Python code demonstrates the effect of stride on the output size of a convolutional layer in TensorFlow. It defines two convolutional layers with different strides (1 and 2) and applies them to a sample image. The output shapes are printed, showing that a stride of 2 results in a smaller output size compared to a stride of 1.

import tensorflow as tf

# Input data (example)

input_data = tf.random.normal(shape=(1, 10, 10, 3)) # 1 sample, 10x10 image, 3 channels

# Convolutional layer with stride 1

conv_layer_1 = tf.keras.layers.Conv2D(

filters=32, kernel_size=(3, 3), strides=(1, 1), activation="relu"

)

output_1 = conv_layer_1(input_data)

print("Output shape with stride 1:", output_1.shape) # Output: (1, 8, 8, 32)

# Convolutional layer with stride 2

conv_layer_2 = tf.keras.layers.Conv2D(

filters=32, kernel_size=(3, 3), strides=(2, 2), activation="relu"

)

output_2 = conv_layer_2(input_data)

print("Output shape with stride 2:", output_2.shape) # Output: (1, 4, 4, 32)

# Notice the output size is smaller with a stride of 2.Explanation:

conv_layer_1: Uses a stride of 1 in both height and width dimensions.conv_layer_2: Uses a stride of 2 in both height and width dimensions.conv_layer_2 (with stride 2) is smaller than the output from conv_layer_1 (with stride 1).Key Points:

Impact on Receptive Field:

Trade-offs:

Relationship with Other Parameters:

Common Use Cases:

Experimentation:

| Concept | Description |

|---|---|

| Strides | Control how a convolutional filter moves across input data, similar to moving a magnifying glass. |

| Stride Value | Determines the number of pixels the filter shifts in each dimension. |

| Stride of 1 | The filter moves one pixel at a time. |

| Stride of 2 | The filter skips every other pixel. |

| Impact of Strides | Larger strides result in smaller output sizes. |

| Typical Stride Configuration |

(1, 2, 2, 1) for batch, height, width, and channels, respectively. |

| Strides and Parameters | Strides don't directly affect the number of parameters in a convolutional layer. |

| Key Effects of Strides | Primarily influence the output size and the portion of the input the filter processes. |

Strides are a fundamental concept in convolutional neural networks, akin to adjusting the steps of a magnifying glass across an image. They determine how much the convolutional filter shifts over the input data, directly impacting the output size and the features captured. While larger strides promote computational efficiency by downsampling, they risk potential information loss. Conversely, smaller strides offer a more detailed scan but demand more resources. The optimal stride value hinges on balancing these trade-offs, often discovered through experimentation and tailored to the specific dataset and task. Understanding and effectively utilizing strides is crucial for building efficient and accurate CNNs.

tf.keras.layers.Conv2D | TensorFlow v2.16.1 | 2D convolution layer.

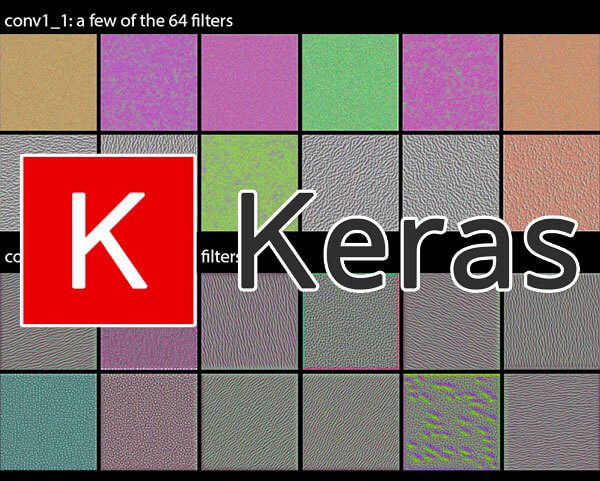

tf.keras.layers.Conv2D | TensorFlow v2.16.1 | 2D convolution layer. Keras Conv2D and Convolutional Layers - PyImageSearch | In this tutorial you will learn about the Keras Conv2D class and convolutions, including the most important parameters you need to tune when training your own Convolutional Neural Networks (CNNs).

Keras Conv2D and Convolutional Layers - PyImageSearch | In this tutorial you will learn about the Keras Conv2D class and convolutions, including the most important parameters you need to tune when training your own Convolutional Neural Networks (CNNs). MaxPooling2D layer | Arguments. pool_size: int or tuple of 2 integers, factors by which to ... strides: int or tuple of 2 integers, or None. Strides values. If None, it ...

MaxPooling2D layer | Arguments. pool_size: int or tuple of 2 integers, factors by which to ... strides: int or tuple of 2 integers, or None. Strides values. If None, it ...