Keras ImageDataGenerator Train Test Split Guide

Learn how to effectively split your image data into training and testing sets using Keras' ImageDataGenerator for accurate model evaluation in your deep learning projects.

Learn how to effectively split your image data into training and testing sets using Keras' ImageDataGenerator for accurate model evaluation in your deep learning projects.

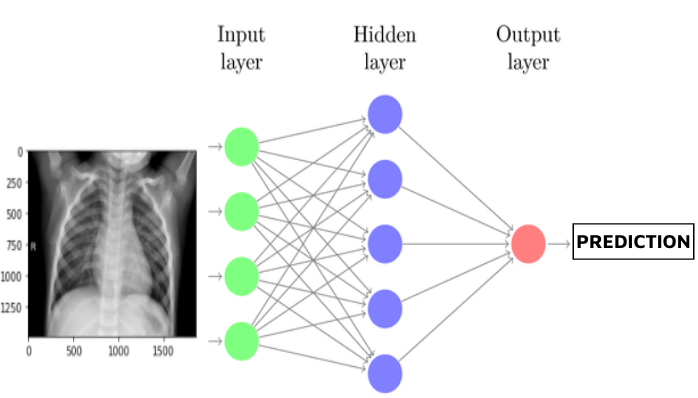

When working with image data for deep learning tasks in Keras, splitting your dataset into training and validation sets is crucial. This division allows you to train your model on one portion of the data and evaluate its performance on an unseen portion, preventing overfitting and ensuring generalization. Keras' ImageDataGenerator provides powerful tools for image augmentation and feeding data to your model, and there are two primary approaches to splitting your image data when using this class.

There are two primary ways to split image data into training and validation sets when using Keras' ImageDataGenerator:

Method 1: Using flow_from_directory(subset)

Organize your data: Ensure your dataset is structured with separate folders for training and validation images. Each folder should then have subfolders for each class.

data/

train/

class_a/

class_b/

validation/

class_a/

class_b/

Create two ImageDataGenerator instances: One for training with augmentations and one for validation without augmentations.

from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(rescale=1./255, shear_range=0.2, zoom_range=0.2, horizontal_flip=True)

val_datagen = ImageDataGenerator(rescale=1./255)Use flow_from_directory with subset argument: Specify subset='training' for the training generator and subset='validation' for the validation generator.

train_generator = train_datagen.flow_from_directory(

'data/',

target_size=(64, 64),

batch_size=32,

class_mode='categorical',

subset='training'

)

validation_generator = val_datagen.flow_from_directory(

'data/',

target_size=(64, 64),

batch_size=32,

class_mode='categorical',

subset='validation'

)Method 2: Manual Splitting with train_test_split

Organize your data: Have all images in a single directory with subfolders for each class.

data/

class_a/

class_b/

Use image_dataset_from_directory: Load the dataset using this function.

from tensorflow.keras.utils import image_dataset_from_directory

dataset = image_dataset_from_directory(

'data/',

labels='inferred',

label_mode='categorical',

image_size=(64, 64),

interpolation='nearest',

batch_size=32,

shuffle=True

)Split the dataset: Utilize take() and skip() to divide the dataset into training and validation sets.

val_size = int(0.2 * len(dataset))

train_ds = dataset.skip(val_size)

val_ds = dataset.take(val_size)Create ImageDataGenerator instances: Apply augmentations to the training set.

train_datagen = ImageDataGenerator(rescale=1./255, shear_range=0.2, zoom_range=0.2, horizontal_flip=True)

train_generator = train_datagen.flow(train_ds, batch_size=32)

val_datagen = ImageDataGenerator(rescale=1./255)

validation_generator = val_datagen.flow(val_ds, batch_size=32)Remember to choose the method that best suits your data organization and workflow.

The code demonstrates two methods for loading and preprocessing image data for a deep learning model in Python using TensorFlow and Keras. Method 1 uses the flow_from_directory function with the subset argument to automatically load data from separate training and validation folders. Method 2 manually splits the dataset after loading it using image_dataset_from_directory. Both methods utilize ImageDataGenerator for data augmentation and create data generators for training and validation. The choice between the methods depends on dataset size, organization, and the level of control desired over data splitting.

# Method 1: Using flow_from_directory(subset)

import tensorflow as tf

from keras.preprocessing.image import ImageDataGenerator

# --- Data Organization ---

# Assuming your data is organized as:

# data/

# train/

# class_a/

# class_b/

# validation/

# class_a/

# class_b/

# --- ImageDataGenerator ---

train_datagen = ImageDataGenerator(

rescale=1./255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True

)

val_datagen = ImageDataGenerator(rescale=1./255)

# --- Data Generators ---

train_generator = train_datagen.flow_from_directory(

'data/',

target_size=(64, 64),

batch_size=32,

class_mode='categorical',

subset='training'

)

validation_generator = val_datagen.flow_from_directory(

'data/',

target_size=(64, 64),

batch_size=32,

class_mode='categorical',

subset='validation'

)

# --- Model Training (Example) ---

model = tf.keras.models.Sequential([

# ... your model layers ...

])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.fit(

train_generator,

steps_per_epoch=train_generator.samples // train_generator.batch_size,

epochs=10,

validation_data=validation_generator,

validation_steps=validation_generator.samples // validation_generator.batch_size

)

# Method 2: Manual Splitting with train_test_split

import tensorflow as tf

from tensorflow.keras.utils import image_dataset_from_directory

from keras.preprocessing.image import ImageDataGenerator

# --- Data Organization ---

# Assuming your data is organized as:

# data/

# class_a/

# class_b/

# --- Load Dataset ---

dataset = image_dataset_from_directory(

'data/',

labels='inferred',

label_mode='categorical',

image_size=(64, 64),

interpolation='nearest',

batch_size=32,

shuffle=True

)

# --- Split Dataset ---

val_size = int(0.2 * len(dataset))

train_ds = dataset.skip(val_size)

val_ds = dataset.take(val_size)

# --- ImageDataGenerator ---

train_datagen = ImageDataGenerator(

rescale=1./255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True

)

val_datagen = ImageDataGenerator(rescale=1./255)

# --- Data Generators ---

train_generator = train_datagen.flow(train_ds, batch_size=32)

validation_generator = val_datagen.flow(val_ds, batch_size=32)

# --- Model Training (Example) ---

# (Same as in Method 1)Explanation:

Method 1:

flow_from_directory(subset): The key here is the subset argument. When you specify subset='training' or subset='validation', the flow_from_directory function automatically loads data from the corresponding subdirectories ('train' or 'validation').Method 2:

image_dataset_from_directory: This function loads the entire dataset.dataset.skip(val_size) and dataset.take(val_size).flow() on Datasets: You use the flow() method of ImageDataGenerator to apply augmentations to the training dataset (train_ds) and create the data generators.Choosing a Method:

subset) is generally preferred when you have a large dataset and want to avoid loading everything into memory at once. It's also convenient if your data is already organized into separate training and validation folders.General:

shear_range, zoom_range, horizontal_flip) helps create variations and can lead to a more robust model that generalizes better.batch_size, image size (target_size), and augmentation parameters can impact your model's performance. Experiment with different values.Method 1 (flow_from_directory with subset):

Method 2 (Manual Splitting):

image_dataset_from_directory can be memory intensive for very large datasets.Code Examples:

class_mode if you have a different classification problem (e.g., 'binary' for two classes).Beyond the Basics:

ImageDataGenerator.flow_from_dataframe: If your data is organized in a CSV file, you can use this function instead of flow_from_directory.keras.utils.Sequence. This gives you maximum flexibility in how you load and preprocess your data.This table summarizes two methods for splitting image data into training and validation sets when using Keras' ImageDataGenerator:

| Feature | Method 1: flow_from_directory(subset)

|

Method 2: Manual Splitting with train_test_split

|

|---|---|---|

| Data Organization | Separate folders for training and validation images, with subfolders for each class. | All images in a single directory with subfolders for each class. |

| Splitting Mechanism | Uses flow_from_directory with the subset argument to specify 'training' or 'validation'. |

Leverages image_dataset_from_directory, then splits using take() and skip() functions. |

| Flexibility | Less flexible, requires predefined directory structure. | More flexible, allows custom splitting ratios and data manipulation. |

| Ease of Use | Simpler implementation, directly specifies training/validation split. | Requires additional steps for splitting and applying ImageDataGenerator. |

| Code Example | python train_generator = train_datagen.flow_from_directory('data/', subset='training') |

python val_size = int(0.2 * len(dataset))<br>train_ds = dataset.skip(val_size) |

Choose Method 1 if:

Choose Method 2 if:

Both methods effectively split image data for training and validation, each offering unique advantages depending on your project's needs. Choosing the appropriate method, whether leveraging the convenience of flow_from_directory with predefined data organization or opting for the flexibility of manual splitting with train_test_split, ensures a robust and reliable model evaluation process. Understanding the nuances of each approach empowers you to make informed decisions tailored to your specific dataset and workflow, ultimately contributing to the successful development of your deep learning model. Remember that the key to a well-trained model lies in the effectiveness of your data splitting and augmentation strategies.

Split Train data into Training and Validation when using ... | Split train data into training and validation when using ImageDataGenerator.

Split Train data into Training and Validation when using ... | Split train data into training and validation when using ImageDataGenerator. Training/Validation Split with ImageDataGenerator in Keras | Keras comes bundled with many helpful utility functions and classes to accomplish all kinds of common tasks in your machine learning pipelines. One commonly used class is the ImageDataGenerator. Until recently though, you were on your own to put together your training and validation datasets, for instance by creatin...

Training/Validation Split with ImageDataGenerator in Keras | Keras comes bundled with many helpful utility functions and classes to accomplish all kinds of common tasks in your machine learning pipelines. One commonly used class is the ImageDataGenerator. Until recently though, you were on your own to put together your training and validation datasets, for instance by creatin... How to prepare custom image dataset, split as train set & test set ... | Imagine you have two class of images, Class_A & Class_B. Now, you need a custom dataset with...

How to prepare custom image dataset, split as train set & test set ... | Imagine you have two class of images, Class_A & Class_B. Now, you need a custom dataset with... Have Photos, Will Model. Use a Keras ImageDataGenerator to get ... | Use a Keras ImageDataGenerator to get your images into your neural network

Have Photos, Will Model. Use a Keras ImageDataGenerator to get ... | Use a Keras ImageDataGenerator to get your images into your neural network Tutorial on using Keras flow_from_directory and generators | by ... | Solutions to common problems faced when using Keras generators.

Tutorial on using Keras flow_from_directory and generators | by ... | Solutions to common problems faced when using Keras generators. Splitting image data into train, test and validation | Kaggle | Splitting image data into train, test and validation.

Splitting image data into train, test and validation | Kaggle | Splitting image data into train, test and validation.