TensorFlow Keras Model Overfitting: High Validation Accuracy

This article explores the unusual scenario of achieving higher validation accuracy than training accuracy in TensorFlow and Keras models, examining potential causes and solutions.

This article explores the unusual scenario of achieving higher validation accuracy than training accuracy in TensorFlow and Keras models, examining potential causes and solutions.

In machine learning, it can be puzzling to encounter a scenario where your model achieves higher accuracy on the validation set than on the training set. This phenomenon, while potentially counterintuitive, can often be attributed to specific factors within your model's training process and data handling. Let's delve into the reasons behind this observation and explore the implications it holds for your model's performance evaluation.

Let's break down why you might see higher accuracy on your validation set compared to your training set in machine learning.

1. Dropout Regularization:

layer = tf.keras.layers.Dropout(0.2) # 20% dropout2. Data Splits and Luck:

3. Underfitting (Rare Case):

What to Do

Key Takeaway: Focus on the performance on your test set (completely unseen data) as the true measure of your model's generalization ability.

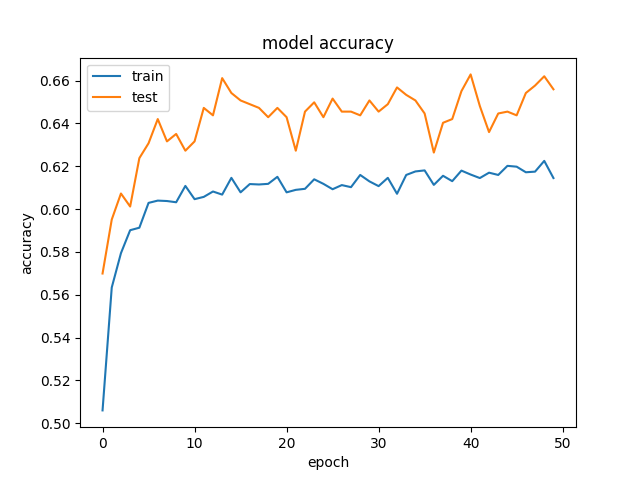

This Python code uses TensorFlow and Keras to build and train a simple neural network for image classification on the MNIST dataset. It preprocesses the data, creates a model with a dropout layer for regularization, trains the model, evaluates its performance on a test set, and visualizes the training process. The code demonstrates a basic example of applying dropout for improving model generalization.

import tensorflow as tf

from sklearn.model_selection import train_test_split

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout

# Load your dataset (replace with your own data)

(X_train, y_train), (X_test, y_test) = tf.keras.datasets.mnist.load_data()

# Preprocess data (flatten and normalize)

X_train = X_train.reshape(60000, 784).astype('float32') / 255

X_test = X_test.reshape(10000, 784).astype('float32') / 255

# Create a validation set

X_train, X_val, y_train, y_val = train_test_split(

X_train, y_train, test_size=0.2, random_state=42

)

# Build a simple model with dropout

model = Sequential([

Dense(512, activation='relu', input_shape=(784,)),

Dropout(0.5), # 50% dropout

Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Train the model

history = model.fit(X_train, y_train,

epochs=10,

batch_size=128,

validation_data=(X_val, y_val))

# Evaluate on test set

test_loss, test_acc = model.evaluate(X_test, y_test)

print('Test accuracy:', test_acc)

# Plot training and validation accuracy

import matplotlib.pyplot as plt

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.title('Model Accuracy')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.show()Explanation:

Dropout layer with a rate of 0.5 (50%) after the first dense layer.Observations:

Remember: The test set accuracy is the most reliable indicator of your model's performance on new, unseen data.

Dropout Regularization:

Data Splits and Luck:

Underfitting (Rare Case):

Other Factors:

Important Considerations:

| Reason | Explanation

Higher validation set accuracy compared to the training set accuracy, while sometimes unexpected, can often be attributed to regularization techniques like dropout, random data split variations, or in rarer cases, underfitting. Dropout, active during training but inactive during validation, often leads to more robust models that perform slightly better on unseen data. Random data splits, especially with smaller datasets, can lead to an easier validation set purely by chance. However, significantly higher validation accuracy might indicate underfitting, where the model is too simple to learn effectively from the training data. It's crucial to remember that the test set accuracy, derived from completely unseen data, is the most reliable measure of your model's generalization ability. Therefore, while a slightly higher validation accuracy is generally a positive sign, substantial differences warrant investigation for potential overfitting or underfitting. Focus on achieving a balance between training and validation performance, and prioritize the model's performance on the unseen test set for a realistic evaluation of its real-world applicability.

Validation vs Training Accuracy - Part 1 (2017) - fast.ai Course Forums | So we mentioned that a typical reason for validation accuracy being lower than training accuracy was overfitting. I also assume that when the opposite is true it’s because my model is underfitting the data. My question is in a few parts Is my assumption above true? Val. Acc > Train Acc. implies Underfitting? What are the key techniques to avoiding underfitting, besides training more and reducing dropout? How do I choose the model I want to run on my test data? Can I just pick the output with ...

Validation vs Training Accuracy - Part 1 (2017) - fast.ai Course Forums | So we mentioned that a typical reason for validation accuracy being lower than training accuracy was overfitting. I also assume that when the opposite is true it’s because my model is underfitting the data. My question is in a few parts Is my assumption above true? Val. Acc > Train Acc. implies Underfitting? What are the key techniques to avoiding underfitting, besides training more and reducing dropout? How do I choose the model I want to run on my test data? Can I just pick the output with ... 测试集精度大于训练精度- 谁动了我的奶盖- 博客园 | https://www.zhihu.com/question/64003151 本文参考自:https://stackoverflow.com/questions/43979449/higher-validation-accuracy-than-training-accurracy-using-te

测试集精度大于训练精度- 谁动了我的奶盖- 博客园 | https://www.zhihu.com/question/64003151 本文参考自:https://stackoverflow.com/questions/43979449/higher-validation-accuracy-than-training-accurracy-using-te

为什么神经网络模型在测试集上的准确率高于训练集上的准确率? 原创 | Oct 21, 2021 ... com/questions/43979449/higher-validation-accuracy-than-training-accurracy-using-tensorflow-and-keras. https://www.quora.com/How-can-I-explain ...

为什么神经网络模型在测试集上的准确率高于训练集上的准确率? 原创 | Oct 21, 2021 ... com/questions/43979449/higher-validation-accuracy-than-training-accurracy-using-tensorflow-and-keras. https://www.quora.com/How-can-I-explain ... 测试集的准确率为什么高于训练集的准确率? - RamboBai - 博客园 | 本文参考自:https://stackoverflow.com/questions/43979449/higher-validation-accuracy-than-training-accurracy-using-tensorflow-and-keras https://www.quora.com

测试集的准确率为什么高于训练集的准确率? - RamboBai - 博客园 | 本文参考自:https://stackoverflow.com/questions/43979449/higher-validation-accuracy-than-training-accurracy-using-tensorflow-and-keras https://www.quora.com