TensorFlow Datasets: from_tensors vs from_tensor_slices

Learn the key differences between TensorFlow's Dataset.from_tensors and Dataset.from_tensor_slices for efficient data loading and processing.

Learn the key differences between TensorFlow's Dataset.from_tensors and Dataset.from_tensor_slices for efficient data loading and processing.

In TensorFlow, the tf.data.Dataset API provides a flexible and efficient way to create input pipelines for your machine learning models. Two fundamental methods for dataset creation are from_tensors and from_tensor_slices, each serving distinct purposes in data handling.

tf.data.Dataset is a powerful tool for building efficient input pipelines in TensorFlow. Two commonly used methods for creating datasets are from_tensors and from_tensor_slices.

from_tensors

This method creates a dataset with a single element, which is the entire input tensor.

tensor = tf.constant([1, 2, 3])

dataset = tf.data.Dataset.from_tensors(tensor)Use from_tensors when you want to treat the entire input as a single element.

from_tensor_slices

This method creates a dataset where each element is a slice of the input tensor along the first dimension.

tensor = tf.constant([[1, 2], [3, 4], [5, 6]])

dataset = tf.data.Dataset.from_tensor_slices(tensor)In this example, the dataset will have three elements: [1, 2], [3, 4], and [5, 6].

Use from_tensor_slices when you want to process individual slices of the input tensor.

Key Differences

from_tensors creates a dataset with one element, while from_tensor_slices creates a dataset with elements equal to the size of the first dimension of the input tensor.from_tensors uses the entire tensor as a single element, while from_tensor_slices uses slices of the tensor as elements.Choosing the Right Method

from_tensors.from_tensor_slices.Remember that nested elements in from_tensor_slices must have the same dimension in the 0th rank.

This Python code demonstrates how to create TensorFlow datasets from tensors using tf.data.Dataset.from_tensors and tf.data.Dataset.from_tensor_slices. It provides examples of creating datasets from single tensors, slicing tensors into multiple elements, and handling nested elements. The code also includes an example that raises a ValueError to illustrate the importance of consistent dimensions when using from_tensor_slices with nested elements.

import tensorflow as tf

# Example 1: Using from_tensors

tensor = tf.constant([1, 2, 3])

dataset_from_tensors = tf.data.Dataset.from_tensors(tensor)

# Print elements of the dataset

print("Dataset created with from_tensors:")

for element in dataset_from_tensors:

print(element.numpy())

# Example 2: Using from_tensor_slices

tensor = tf.constant([[1, 2], [3, 4], [5, 6]])

dataset_from_slices = tf.data.Dataset.from_tensor_slices(tensor)

# Print elements of the dataset

print("\nDataset created with from_tensor_slices:")

for element in dataset_from_slices:

print(element.numpy())

# Example 3: Nested elements with from_tensor_slices

tensor = tf.constant([[[1, 2], [3, 4]], [[5, 6], [7, 8]]])

dataset_nested_slices = tf.data.Dataset.from_tensor_slices(tensor)

# Print elements of the dataset

print("\nDataset with nested elements:")

for element in dataset_nested_slices:

print(element.numpy())

# Example of an error: Inconsistent dimensions in nested elements

try:

tensor_error = tf.constant([[[1, 2]], [[3, 4], [5, 6]]])

dataset_error = tf.data.Dataset.from_tensor_slices(tensor_error)

for element in dataset_error:

print(element.numpy())

except ValueError as e:

print(f"\nError: {e}")Explanation:

from_tensors. It prints the entire input tensor as a single element.from_tensor_slices. It prints each row of the input tensor as a separate element.from_tensor_slices with nested elements. Each element in the dataset is a 2D tensor.ValueError to demonstrate the requirement of consistent dimensions in the 0th rank for nested elements when using from_tensor_slices.This code provides clear examples and explanations for both methods, highlighting their differences and use cases. It also includes an example of how to use from_tensor_slices with nested elements and demonstrates the importance of consistent dimensions in such cases.

Excellent notes! Here are some additional points to consider:

Performance Implications

from_tensors:

from_tensors could lead to out-of-memory errors.from_tensor_slices:

Beyond the Basics

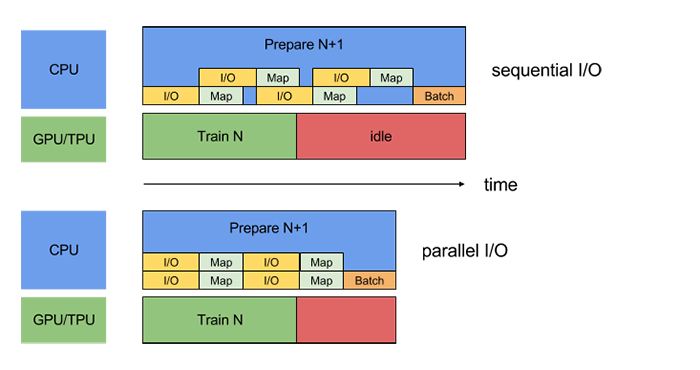

from_tensors and from_tensor_slices are often starting points. You'll usually chain additional tf.data.Dataset transformations:

shuffle: Randomizes the order of elements.batch: Groups elements into batches for training.map: Applies a function to each element (e.g., preprocessing).filter: Selectively includes elements based on a condition.Practical Examples

from_tensor_slices to load image paths and labels from a list. Then, use map to load and preprocess images on the fly.from_tensor_slices to create a dataset of time series windows from a larger sequence.Important Considerations

tf.data.Dataset might differ slightly between eager execution (default in TensorFlow 2.x) and graph execution (used in TensorFlow 1.x and for optimized performance).Let me know if you'd like me to elaborate on any of these points or provide more specific code examples!

This table summarizes the key differences between the from_tensors and from_tensor_slices methods for creating TensorFlow datasets:

| Feature | from_tensors |

from_tensor_slices |

|---|---|---|

| Dataset Elements | One element: the entire input tensor | Multiple elements: each a slice of the input tensor along the first dimension |

| Element Type | The entire input tensor | Individual slices of the input tensor |

| Use Case | Processing the entire input as a single unit | Processing individual slices of the input |

| Example | Treat a batch of images as one element | Treat each image in a batch as a separate element |

Important Note: When using from_tensor_slices, ensure that all nested elements within the input tensor have the same size along the 0th dimension (the dimension being sliced).

Understanding the distinction between from_tensors and from_tensor_slices is crucial for constructing efficient TensorFlow input pipelines. Choose from_tensors when the entire input is treated as a single unit, and opt for from_tensor_slices when processing individual slices of the input is necessary. Keep in mind the importance of consistent dimensions in nested elements when using from_tensor_slices. By mastering these methods and leveraging the versatility of the tf.data.Dataset API, you can build robust and high-performance data pipelines for your machine learning models.

Difference Between Dataset.from_tensors and Dataset ... | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions.

Difference Between Dataset.from_tensors and Dataset ... | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions. Difference Between Dataset.from_tensors and Dataset ... | Difference Between Dataset from tensors and Dataset from tensor slices - Dataset.from_tensors and Dataset.from_tensor_slices are methods in the TensorFlow library that are used to create datasets. Dataset.from_tensor creates a dataset from a single tensor whereas Dataset.from_tensor_slices creates data set by slicing a tensor along the first dimension. In this article, w

Difference Between Dataset.from_tensors and Dataset ... | Difference Between Dataset from tensors and Dataset from tensor slices - Dataset.from_tensors and Dataset.from_tensor_slices are methods in the TensorFlow library that are used to create datasets. Dataset.from_tensor creates a dataset from a single tensor whereas Dataset.from_tensor_slices creates data set by slicing a tensor along the first dimension. In this article, w tf.data.Dataset | TensorFlow v2.16.1 | Represents a potentially large set of elements.

tf.data.Dataset | TensorFlow v2.16.1 | Represents a potentially large set of elements. TFRecords: from_tensor vs from_tensor_slices · GitHub | TFRecords: from_tensor vs from_tensor_slices. GitHub Gist: instantly share code, notes, and snippets.

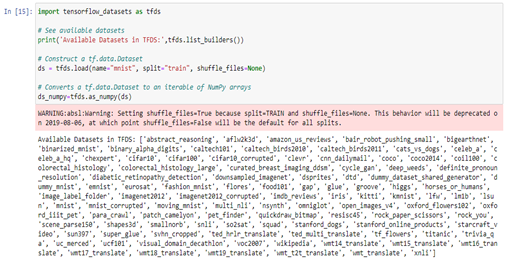

TFRecords: from_tensor vs from_tensor_slices · GitHub | TFRecords: from_tensor vs from_tensor_slices. GitHub Gist: instantly share code, notes, and snippets. Part 3: TensorFlow Datasets. There are definitely some academic ... | There are definitely some academic statisticians who just don’t understand why what I do is statistics, but basically I think they are all…

Part 3: TensorFlow Datasets. There are definitely some academic ... | There are definitely some academic statisticians who just don’t understand why what I do is statistics, but basically I think they are all… Building efficient data pipelines using TensorFlow | by Animesh ... | Having efficient data pipelines is of paramount importance for any machine learning model. In this blog, we will learn how to use…

Building efficient data pipelines using TensorFlow | by Animesh ... | Having efficient data pipelines is of paramount importance for any machine learning model. In this blog, we will learn how to use…