TensorFlow Gradient Clipping: A Practical Guide

Learn how to prevent exploding gradients and stabilize your TensorFlow model training with this comprehensive guide on implementing gradient clipping.

Learn how to prevent exploding gradients and stabilize your TensorFlow model training with this comprehensive guide on implementing gradient clipping.

Gradient clipping is a technique used to prevent exploding gradients during training of neural networks. In TensorFlow, you can implement gradient clipping using the following steps:

Calculate gradients:

with tf.GradientTape() as tape:

predictions = model(input_data)

loss = loss_function(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)Clip gradients:

clipped_gradients, _ = tf.clip_by_global_norm(gradients, clip_norm=1.0)Apply clipped gradients:

optimizer.apply_gradients(zip(clipped_gradients, model.trainable_variables))Explanation:

tf.clip_by_global_norm(). This prevents exploding gradients.Example:

# Define optimizer and clip norm

optimizer = tf.keras.optimizers.Adam(learning_rate=0.001)

clip_norm = 1.0

# Training loop

for batch in dataset:

# Calculate gradients

with tf.GradientTape() as tape:

# ...

# Clip gradients

clipped_gradients, _ = tf.clip_by_global_norm(gradients, clip_norm)

# Apply clipped gradients

optimizer.apply_gradients(zip(clipped_gradients, model.trainable_variables))This Python code defines a simple neural network using TensorFlow and demonstrates one epoch of the training process. It includes steps for defining the model, optimizer, loss function, and gradient clipping. The code generates random data, calculates gradients, clips them to prevent exploding gradients, and applies the clipped gradients to update the model's weights using the Adam optimizer.

import tensorflow as tf

# Define a simple model

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(10, activation='relu', input_shape=(4,)),

tf.keras.layers.Dense(1)

])

# Define optimizer, loss function, and clip norm

optimizer = tf.keras.optimizers.Adam(learning_rate=0.001)

loss_function = tf.keras.losses.MeanSquaredError()

clip_norm = 1.0

# Sample data

input_data = tf.random.normal((10, 4))

labels = tf.random.normal((10, 1))

# Training loop

epochs = 1

for epoch in range(epochs):

for x, y in zip(input_data, labels):

# Calculate gradients

with tf.GradientTape() as tape:

predictions = model(tf.expand_dims(x, axis=0))

loss = loss_function(tf.expand_dims(y, axis=0), predictions)

gradients = tape.gradient(loss, model.trainable_variables)

# Clip gradients

clipped_gradients, _ = tf.clip_by_global_norm(gradients, clip_norm)

# Apply clipped gradients

optimizer.apply_gradients(zip(clipped_gradients, model.trainable_variables))

print(f'Epoch {epoch+1} finished')Explanation:

tf.GradientTape() to record the operations and calculate gradients of the loss with respect to trainable variables.tf.clip_by_global_norm() to prevent exploding gradients.This code demonstrates a single epoch of training. In a real-world scenario, you would iterate over your dataset for multiple epochs to train the model effectively.

Purpose:

How it Works:

clip_norm) for the global norm of the gradients.Benefits:

Variations:

tf.clip_by_global_norm: Clips based on the global norm (sum of squares of all gradients). This is the most common method.tf.clip_by_value: Clips individual gradient values to a specified min/max range.tf.clip_by_norm: Clips based on the norm of individual gradients.Choosing clip_norm:

clip_norm value is a hyperparameter that needs to be tuned for your specific problem.When to Use:

Alternatives:

Monitoring:

clip_norm are needed.This code snippet demonstrates how to implement gradient clipping during model training in TensorFlow. Gradient clipping is a technique used to prevent exploding gradients, a problem where gradients become excessively large and destabilize the training process.

Here's a breakdown:

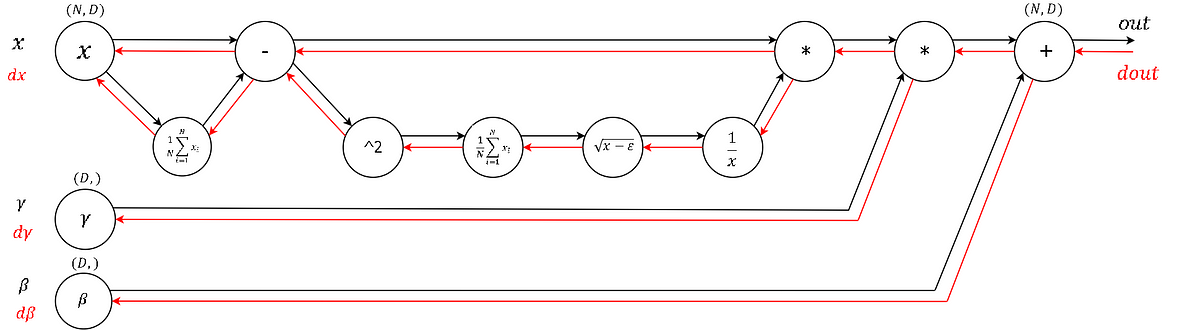

Gradient Calculation: The code first calculates the gradients of the loss function with respect to the model's trainable variables using tf.GradientTape().

Gradient Clipping: Next, it clips the calculated gradients to a maximum global norm using tf.clip_by_global_norm(). This function effectively sets a maximum threshold for the magnitude of the gradients, preventing them from becoming too large.

Gradient Application: Finally, the clipped gradients are applied to update the model's weights using the chosen optimizer (tf.keras.optimizers.Adam in this example).

Benefits of Gradient Clipping:

Key Points:

clip_norm parameter controls the maximum allowed global norm of the gradients.Gradient clipping is a crucial technique for stabilizing the training of neural networks, especially in scenarios prone to exploding gradients. By setting a maximum threshold for gradient values, we prevent drastic weight updates that can hinder convergence. TensorFlow provides convenient functions like tf.clip_by_global_norm to implement this, ensuring smoother and more effective training. Remember that tuning the clip_norm parameter is essential for optimal performance on different tasks and network architectures.

Introduction to Gradient Clipping Techniques with Tensorflow | Intel ... | Deep neural networks are prone to the vanishing and exploding gradients problem. This is especially true for Recurrent Neural Networks (RNNs). RNNs are mostly

Introduction to Gradient Clipping Techniques with Tensorflow | Intel ... | Deep neural networks are prone to the vanishing and exploding gradients problem. This is especially true for Recurrent Neural Networks (RNNs). RNNs are mostly tf.clip_by_norm | TensorFlow v2.16.1 | Clips tensor values to a maximum L2-norm.

tf.clip_by_norm | TensorFlow v2.16.1 | Clips tensor values to a maximum L2-norm. Applying Gradient Clipping in TensorFlow - GeeksforGeeks | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions.

Applying Gradient Clipping in TensorFlow - GeeksforGeeks | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions. Yes you should understand backprop | by Andrej Karpathy | Medium | When we offered CS231n (Deep Learning class) at Stanford, we intentionally designed the programming assignments to include explicit…

Yes you should understand backprop | by Andrej Karpathy | Medium | When we offered CS231n (Deep Learning class) at Stanford, we intentionally designed the programming assignments to include explicit… Understanding Gradient Clipping (and How It Can Fix Exploding ... | Explore backprop issues, the exploding gradients problem, and the role of gradient clipping in popular DL frameworks.

Understanding Gradient Clipping (and How It Can Fix Exploding ... | Explore backprop issues, the exploding gradients problem, and the role of gradient clipping in popular DL frameworks. Learner for Tensorflow - fastai dev - fast.ai Course Forums | I would like to share a Learner class that has most of the functionality of a Fastai Pytorch Learner except it is ported to use Tensorflow/Keras models, optimizers, and loss functions. It uses Fastai’s DataBunch which means all the awesome data augmentation dataloaders work. It also uses the callback system which means Fastai’s learing rate / momentum scheduler, lr_finder, etc work seamlessly. A link to the dev notebook can be found here: https://github.com/Pendar2/fastai_tf_learner This is s...

Learner for Tensorflow - fastai dev - fast.ai Course Forums | I would like to share a Learner class that has most of the functionality of a Fastai Pytorch Learner except it is ported to use Tensorflow/Keras models, optimizers, and loss functions. It uses Fastai’s DataBunch which means all the awesome data augmentation dataloaders work. It also uses the callback system which means Fastai’s learing rate / momentum scheduler, lr_finder, etc work seamlessly. A link to the dev notebook can be found here: https://github.com/Pendar2/fastai_tf_learner This is s... I don't understand why I am getting NaN loss scores. Can anyone ... | Posted by u/brike3 - 6 votes and 17 comments

I don't understand why I am getting NaN loss scores. Can anyone ... | Posted by u/brike3 - 6 votes and 17 comments