OpenCV findHomography: Detecting Bad Homographies

Learn how to identify and handle inaccurate homographies calculated using OpenCV's findHomography function in your computer vision projects.

Learn how to identify and handle inaccurate homographies calculated using OpenCV's findHomography function in your computer vision projects.

Finding an accurate homography is crucial for various computer vision tasks like image stitching and augmented reality. While OpenCV simplifies the process with cv2.findHomography, obtaining good results requires careful consideration. Here are five key tips to help you achieve better homography estimation in OpenCV:

Ensure correct point correspondence: The most crucial step is to ensure that the input points to findHomography are correctly matched between the two images. Incorrect ordering will lead to nonsensical homographies.

# pts1 and pts2 should contain corresponding points from image 1 and image 2

H, _ = cv2.findHomography(pts1, pts2) Use a robust estimation method: findHomography offers different estimation methods. RANSAC (RANSAC, LMEDS) is more robust to outliers compared to the default (0).

H, mask = cv2.findHomography(pts1, pts2, cv2.RANSAC) Check the reprojection error: After finding the homography, reproject points from one image to the other and calculate the distance between the reprojected points and the actual points. A high average reprojection error indicates a poor homography.

reproj_pts = cv2.perspectiveTransform(pts1.reshape(-1,1,2), H).reshape(-1,2)

errors = np.linalg.norm(pts2 - reproj_pts, axis=1)

mean_error = np.mean(errors)Examine the homography matrix: While not foolproof, a sanity check on the homography matrix can sometimes reveal issues. For instance, very large values in the matrix might indicate problems.

Visualize the results: Always visually inspect the warped image or the aligned features to verify if the homography makes sense in the context of your application.

Remember, even with these steps, there's no guarantee of a perfect homography, especially in challenging conditions. You might need to incorporate additional checks or domain-specific knowledge for your particular use case.

This Python code demonstrates image alignment using homography. It assumes you have pre-calculated corresponding points between two images. It computes the homography matrix using RANSAC, calculates the reprojection error, and warps the first image to align with the second. Finally, it displays the original and warped images for comparison.

import cv2

import numpy as np

# Load the two images

image1 = cv2.imread('image1.jpg')

image2 = cv2.imread('image2.jpg')

# Find corresponding points between the two images

# (You'll need to use a feature matching technique like SIFT or ORB)

# For this example, let's assume you have the points in pts1 and pts2

pts1 = np.array([[...], [...], [...], [...]]) # Replace ... with actual point coordinates

pts2 = np.array([[...], [...], [...], [...]])

# 1. Find the homography matrix using RANSAC

H, mask = cv2.findHomography(pts1, pts2, cv2.RANSAC)

# 2. Check the reprojection error

reproj_pts = cv2.perspectiveTransform(pts1.reshape(-1, 1, 2), H).reshape(-1, 2)

errors = np.linalg.norm(pts2 - reproj_pts, axis=1)

mean_error = np.mean(errors)

print(f"Mean reprojection error: {mean_error}")

# 3. Examine the homography matrix (optional)

print(f"Homography matrix:\n{H}")

# 4. Warp image1 to the perspective of image2

warped_image = cv2.warpPerspective(image1, H, (image2.shape[1], image2.shape[0]))

# 5. Visualize the results

cv2.imshow("Image 1", image1)

cv2.imshow("Image 2", image2)

cv2.imshow("Warped Image", warped_image)

cv2.waitKey(0)

cv2.destroyAllWindows()Explanation:

pts1, pts2) from the two images. You'll need to use a feature detection and matching technique like SIFT, ORB, etc., to find these points in a real application.cv2.findHomography function calculates the homography matrix H using the RANSAC method for robustness.image1 to image2 using the homography and comparing them to the actual corresponding points in image2. A lower error indicates a better homography.H to check for any unusual values (e.g., very large numbers) that might indicate issues.cv2.warpPerspective function warps image1 using the calculated homography to align it with image2. The original images and the warped image are displayed for visual inspection.Remember: This is a basic example. You might need to adapt and add more sophisticated checks or logic depending on your specific application and the complexity of the images you are working with.

Point Correspondence:

Robust Estimation:

ransacReprojThreshold, maxIters) based on your data and noise levels. A smaller reprojection threshold enforces stricter inlier criteria.Error Analysis:

Homography Matrix Interpretation:

Practical Considerations:

Beyond OpenCV:

This summary outlines key steps to enhance homography estimation accuracy using OpenCV's findHomography function:

| Step | Description | Code Example |

|---|---|---|

| 1. Ensure Point Correspondence | Crucially, input points to findHomography must be correctly matched between images. Incorrect ordering leads to meaningless results. |

H, _ = cv2.findHomography(pts1, pts2) |

| 2. Use Robust Estimation | Employ robust methods like RANSAC (cv2.RANSAC) within findHomography to handle outliers effectively, compared to the default method. |

H, mask = cv2.findHomography(pts1, pts2, cv2.RANSAC) |

| 3. Check Reprojection Error | After obtaining the homography, reproject points from one image to the other. Calculate the distance between reprojected and actual points. High average error signals a poor homography. | python reproj_pts = cv2.perspectiveTransform(pts1.reshape(-1,1,2), H).reshape(-1,2) errors = np.linalg.norm(pts2 - reproj_pts, axis=1) mean_error = np.mean(errors) |

| 4. Examine the Homography Matrix | While not definitive, inspecting the matrix for unusually large values can sometimes reveal issues. | N/A |

| 5. Visualize the Results | Always visually verify the warped image or aligned features to ensure the homography makes sense within your application's context. | N/A |

Important Note: Even with these steps, achieving a perfect homography isn't guaranteed, especially in challenging scenarios. You might need to implement additional checks or leverage domain-specific knowledge for your specific use case.

In conclusion, achieving accurate homography estimation with OpenCV's findHomography function involves a combination of careful point correspondence, robust estimation techniques, and thorough error analysis. While the provided code offers a basic example, remember to adapt and expand upon it based on your specific application's needs. By understanding the factors influencing homography accuracy and following the outlined steps, you can significantly improve the performance of your computer vision applications that rely on image alignment and perspective transformations.

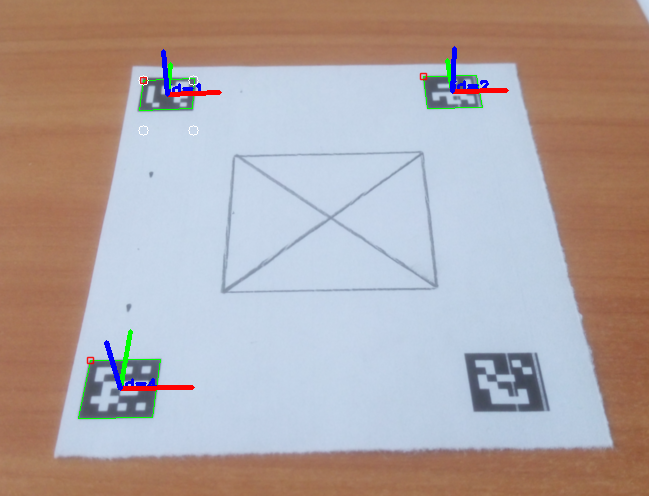

Turning ArUco marker in parallel with camera plane - OpenCV Q&A ... | Mar 30, 2017 ... ... detected marker I can get its pose ... The homography matrix can be estimated with findHomography() or getPerspectiveTransform() :

Turning ArUco marker in parallel with camera plane - OpenCV Q&A ... | Mar 30, 2017 ... ... detected marker I can get its pose ... The homography matrix can be estimated with findHomography() or getPerspectiveTransform() : findHomography inaccurate as it moves to left side of image - C++ ... | OpenCV 4.53, C++, VS 2017, Win10 I have a findHomography situation that I cannot explain. The findHomography is becoming increasingly inaccurate as the points move to the left of the camera view of the screen. Previously I had a chessboard of 8, 4 internal corners and used findChessboardCorners. I am now using a chessboard of 13, 6 and using findChessboardCornersSB and yet I am still seeing the same anomaly. I am projecting the chessboard on to a 2nd monitor and the camera is facing that 2n...

findHomography inaccurate as it moves to left side of image - C++ ... | OpenCV 4.53, C++, VS 2017, Win10 I have a findHomography situation that I cannot explain. The findHomography is becoming increasingly inaccurate as the points move to the left of the camera view of the screen. Previously I had a chessboard of 8, 4 internal corners and used findChessboardCorners. I am now using a chessboard of 13, 6 and using findChessboardCornersSB and yet I am still seeing the same anomaly. I am projecting the chessboard on to a 2nd monitor and the camera is facing that 2n... Detecting features between image and stl - Python - OpenCV | I am trying to match an image of a build plate (square with some holes in the corners) to an stl of the build plate. I want to rotate the stl of the build plate to match the angle from the camera. can this be done? should i be using feature detect?

Detecting features between image and stl - Python - OpenCV | I am trying to match an image of a build plate (square with some holes in the corners) to an stl of the build plate. I want to rotate the stl of the build plate to match the angle from the camera. can this be done? should i be using feature detect? Create stitched image from known calibrated points - OpenCV Q&A ... | Jul 13, 2017 ... If not but you know the 3D object coordinates, you can estimate the camera displacement and after get the homography. ... waste of CPU resources ...

Create stitched image from known calibrated points - OpenCV Q&A ... | Jul 13, 2017 ... If not but you know the 3D object coordinates, you can estimate the camera displacement and after get the homography. ... waste of CPU resources ...