Node.js Multi-Core Performance Tuning Guide

Learn how to leverage the power of multi-core machines for your Node.js applications to achieve optimal performance and scalability.

Learn how to leverage the power of multi-core machines for your Node.js applications to achieve optimal performance and scalability.

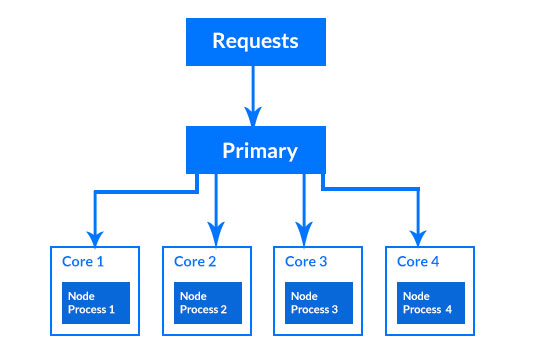

This article provides a comprehensive guide on how to scale Node.js applications across multiple cores using the cluster module. It begins by explaining the limitations of Node.js's single-threaded nature and the potential benefits of utilizing multi-core processors. The guide then walks you through setting up a project, installing dependencies, and creating the main script that leverages the cluster module to fork worker processes. Each step is accompanied by clear explanations and code examples. The article further discusses important considerations such as load balancing, worker management, and handling shared state among workers. By following this guide, developers can effectively optimize their Node.js applications for improved performance and scalability.

While Node.js excels in handling concurrent requests with its single-threaded event loop, it doesn't inherently leverage the full potential of multi-core processors. To maximize performance and utilize all available cores, we can employ the cluster module. Here's a step-by-step guide:

1. Understanding the Problem:

2. Setting Up the Project:

mkdir node-cluster-example

cd node-cluster-example

npm init -y3. Installing Dependencies:

4. Creating the Main Script (server.js):

const cluster = require('cluster');

const http = require('http');

const numCPUs = require('os').cpus().length;

if (cluster.isPrimary) {

console.log(`Primary ${process.pid} is running`);

// Fork workers.

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

cluster.on('exit', (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`);

});

} else {

// Workers can share any TCP connection

// In this case it is an HTTP server

http.createServer((req, res) => {

res.writeHead(200);

res.end(`Hello from worker ${process.pid}\n`);

}).listen(8000);

console.log(`Worker ${process.pid} started`);

}Explanation:

cluster and http modules.numCPUs stores the number of CPU cores available.cluster.isPrimary condition checks if the current process is the master.5. Running the Application:

node server.jshttp://localhost:8000 in your browser, and you'll see responses from different worker processes, demonstrating load balancing across cores.6. Additional Considerations:

By following these steps and understanding the core concepts, you can effectively scale your Node.js applications to utilize the full power of multi-core systems, leading to improved performance and responsiveness.

This Node.js code demonstrates how to scale an application across multiple CPU cores using the cluster module. The master process forks worker processes, each handling HTTP requests concurrently. If a worker process dies, the master process automatically restarts a new one. This ensures efficient resource utilization and high availability.

const cluster = require('cluster');

const http = require('http');

const numCPUs = require('os').cpus().length;

if (cluster.isPrimary) {

console.log(`Primary process ${process.pid} is running`);

// Fork workers.

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

cluster.on('exit', (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`);

// Restart the worker

cluster.fork();

});

} else {

// Workers can share any TCP connection

// In this case it is an HTTP server

http.createServer((req, res) => {

res.writeHead(200);

res.end(`Hello from worker ${process.pid}\n`);

}).listen(8000);

console.log(`Worker ${process.pid} started`);

}Explanation:

cluster for managing worker processes and http for creating the server.numCPUs stores the number of available CPU cores using os.cpus().length.cluster.isPrimary block executes only in the master process.cluster.fork().else block executes in each worker process.Running the Application:

server.js.node server.js

http://localhost:8000 in your browser to see responses from different worker processes, demonstrating load balancing across cores.Additional Considerations:

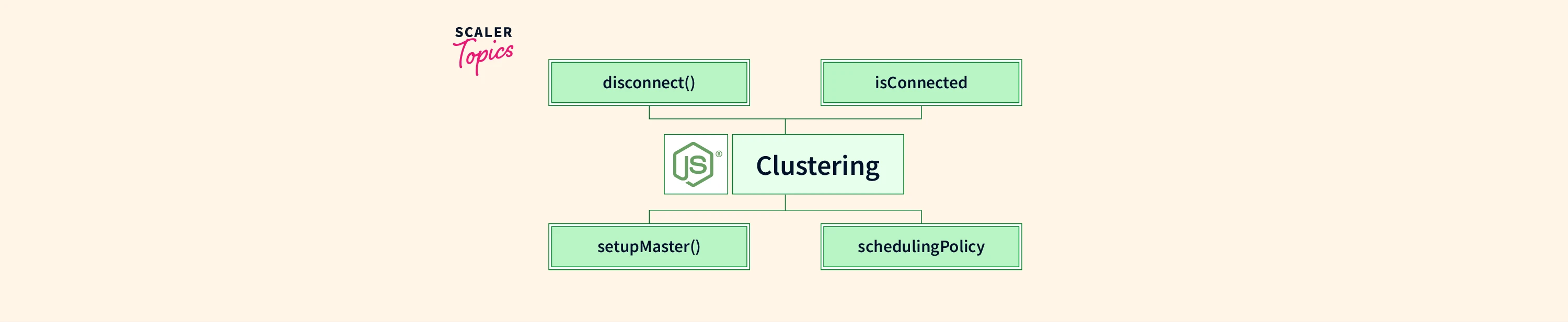

Advanced Techniques:

workerpool or piscina offer more control over worker processes, allowing for task queues, priorities, and worker lifecycle management.Optimizations:

Security Considerations:

Alternatives to the Cluster Module:

child_process module allows spawning child processes for specific tasks, but it requires more manual management compared to the cluster module.Choosing the Right Approach:

The best approach for scaling Node.js depends on your specific application requirements, complexity, and resource constraints. Consider factors such as:

Remember, scaling is an iterative process. Start with a basic cluster setup and gradually optimize and refine your approach as your application grows and evolves.

| Step | Description |

|---|---|

| Understanding the Problem | - Node.js is single-threaded, limiting CPU utilization. - Cluster module enables multi-core processing for better performance. |

| Setting Up | - Create project directory. - Initialize with npm init -y. |

| Dependencies | - Only the built-in cluster module is needed. |

| Creating the Main Script | - Import cluster, http, and get CPU core count. - Master process forks worker processes based on core count. - Workers create HTTP servers and respond to requests. |

| Running the Application | - Use node server.js to start. - Access http://localhost:8000 to see load balancing in action. |

| Additional Considerations | - Implement custom load balancing if needed. - Manage worker health and restarts. - Use external storage for shared data across workers. |

By leveraging the cluster module, developers can unlock the true potential of Node.js in multi-core environments. This guide has provided a solid foundation for understanding and implementing this technique, covering key aspects from setup to execution. Remember, scaling is an ongoing journey, and continuous optimization is crucial as your application evolves. Explore advanced techniques, optimize resource utilization, and prioritize security to ensure your Node.js applications thrive in the face of increasing demands.

How To Scale A NodeJS Application To All CPU Cores Of A ... | To All CPU Cores Of A Machine

How To Scale A NodeJS Application To All CPU Cores Of A ... | To All CPU Cores Of A Machine Clustering In NodeJs. When multiple CPU cores are available… | by ... | When multiple CPU cores are available, Node.js does not utilize them all by default. Fortunately, Node.js has a native cluster module that…

Clustering In NodeJs. When multiple CPU cores are available… | by ... | When multiple CPU cores are available, Node.js does not utilize them all by default. Fortunately, Node.js has a native cluster module that… Is it pointless to run node.js on a multi-core cpu because node.js is ... | Dec 22, 2014 ... NodeJS is in many respects a glue language for backend, so even NodeJS uses akin of 2 threads by default (most of GC and JIT operations run ...

Is it pointless to run node.js on a multi-core cpu because node.js is ... | Dec 22, 2014 ... NodeJS is in many respects a glue language for backend, so even NodeJS uses akin of 2 threads by default (most of GC and JIT operations run ... Node.Clustering in Node JS- Scaler Topics | Node.js Clustering in Node.js is a technique that allows the utilization of hardware based on a multicore processor. This article on scaler topics is about Clustering in Node JS.

Node.Clustering in Node JS- Scaler Topics | Node.js Clustering in Node.js is a technique that allows the utilization of hardware based on a multicore processor. This article on scaler topics is about Clustering in Node JS. How to Use All the Cores on Multi-Core Machines in node.js | Better ... | Better Stack lets you see inside any stack, debug any issue, and resolve any incident.

How to Use All the Cores on Multi-Core Machines in node.js | Better ... | Better Stack lets you see inside any stack, debug any issue, and resolve any incident. Multi Core NodeJS App, is it possible in a single thread framework ... | It has been a while since i wrote my last article, it has been very busy dates since then, but also it has been a great journey, because i…

Multi Core NodeJS App, is it possible in a single thread framework ... | It has been a while since i wrote my last article, it has been very busy dates since then, but also it has been a great journey, because i… Scaling Node on Multi-Cores System | js processes each running in a virtual machine assigned a single core from the multi-core processor. All the instances live behind a proxy which serves to ...

Scaling Node on Multi-Cores System | js processes each running in a virtual machine assigned a single core from the multi-core processor. All the instances live behind a proxy which serves to ...