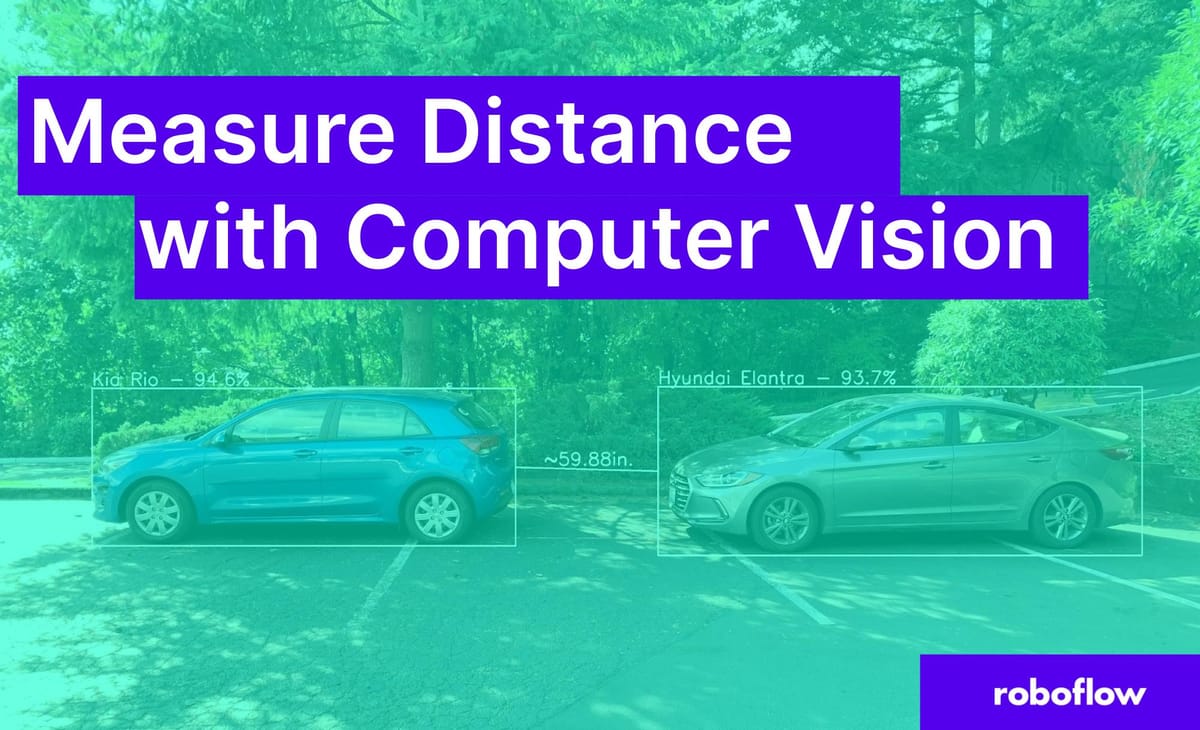

Measure Distance in Videos: Techniques and Tools

Learn how to use computer vision techniques and image processing to calculate the distance of objects from a camera in videos.

Learn how to use computer vision techniques and image processing to calculate the distance of objects from a camera in videos.

Estimating distances between objects in videos is a common requirement in computer vision applications. This process generally involves a series of steps that combine image processing, computer vision techniques, and geometric calculations. Here's a breakdown of the typical workflow:

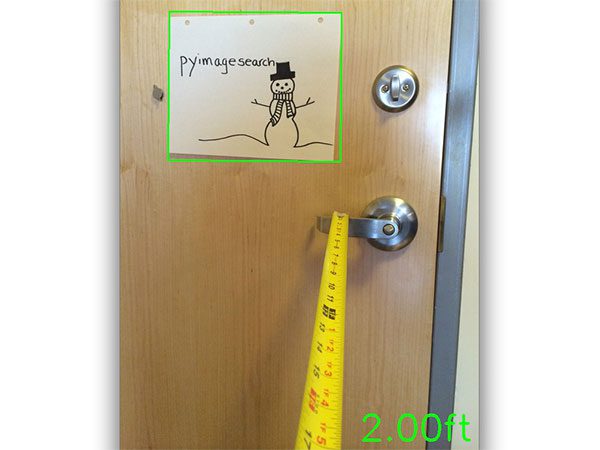

Calibrate your camera: This step is crucial to establish the relationship between pixel measurements and real-world distances. You can achieve this by capturing images of a known-size object at various distances and analyzing the corresponding pixel dimensions.

# Example (using OpenCV in Python)

import cv2

# Camera calibration code (refer to OpenCV documentation)

# ...

# Get camera matrix and distortion coefficients

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(...)Place a reference object: Introduce an object of known dimensions into your video scene. This object will serve as a reference point for distance calculations.

Detect and track the object: Utilize computer vision techniques like object detection or feature tracking to identify and follow the object's movement across video frames.

# Example (using OpenCV for object detection)

net = cv2.dnn.readNet("yolov3.weights", "yolov3.cfg")

classes = # Load object classes (e.g., "person", "car")

# ... (Object detection and bounding box extraction)Measure pixel distance: In each frame, calculate the distance in pixels between the reference object and the object of interest.

# Example (calculating Euclidean distance)

import numpy as np

point1 = (x1, y1) # Coordinates of reference object

point2 = (x2, y2) # Coordinates of object of interest

distance_pixels = np.sqrt((point2[0] - point1[0])**2 + (point2[1] - point1[1])**2)Convert pixel distance to real-world distance: Apply the calibration information obtained in step 1 to convert the pixel distance to a real-world metric (e.g., meters, feet).

# Example (using camera focal length and object size)

focal_length = # Obtain from camera calibration

real_object_height = # Known height of the object

# Assuming object is standing vertically

distance_meters = (real_object_height * focal_length) / distance_pixelsRemember that this is a simplified explanation. Factors like camera angle, lens distortion, and object orientation can influence the accuracy of distance measurements. Advanced techniques and libraries like OpenCV provide tools to address these complexities.

This Python code demonstrates distance measurement using a camera and computer vision. It simulates camera calibration data and object detection. The code calculates the distance to an object by measuring the pixel distance between a reference point and the detected object in the camera frame. It then converts this pixel distance to real-world distance using the camera's focal length and the known height of a reference object. The code provides a basic framework and needs to be customized with actual camera calibration data and a suitable object detection method.

import cv2

import numpy as np

# --- Step 1: Camera Calibration ---

# (Replace with your actual calibration code and parameters)

# ...

# ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(...)

# Simulated camera matrix and focal length (for demonstration)

mtx = np.array([[1000, 0, 640], [0, 1000, 360], [0, 0, 1]])

focal_length = mtx[0, 0]

# --- Step 2: Reference Object ---

reference_object_height = 0.1 # Height in meters (e.g., 10 cm)

# --- Step 3: Object Detection and Tracking ---

# (Replace with your chosen object detection method)

net = cv2.dnn.readNet("yolov3.weights", "yolov3.cfg")

classes = ["person"] # Example class

def detect_object(frame):

# ... (Object detection implementation using 'net' and 'classes')

# For this example, we'll simulate detection results

return (320, 240, 64, 128) # (x, y, w, h) of detected object

# --- Main Loop ---

video_capture = cv2.VideoCapture(0) # Use 0 for webcam, or video file path

while True:

ret, frame = video_capture.read()

if not ret:

break

# --- Step 3: Detect and Track ---

x, y, w, h = detect_object(frame)

cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 255, 0), 2)

# --- Step 4: Measure Pixel Distance ---

reference_point = (frame.shape[1] // 2, frame.shape[0] - 100) # Bottom center

object_point = (x + w // 2, y + h)

distance_pixels = np.sqrt(((object_point[0] - reference_point[0])**2) +

((object_point[1] - reference_point[1])**2))

# --- Step 5: Convert to Real-World Distance ---

distance_meters = (reference_object_height * focal_length) / distance_pixels

# --- Display Results ---

cv2.circle(frame, reference_point, 5, (0, 0, 255), -1) # Reference point

cv2.line(frame, reference_point, object_point, (255, 0, 0), 2)

cv2.putText(frame, f"Distance: {distance_meters:.2f} meters", (50, 50),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 255), 2)

cv2.imshow("Distance Measurement", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

video_capture.release()

cv2.destroyAllWindows()Explanation:

Camera Calibration:

mtx, focal_length) for demonstration. You'll need to replace this with your actual camera calibration results obtained using cv2.calibrateCamera.Reference Object:

reference_object_height (e.g., 0.1 meters for a 10 cm tall object).Object Detection and Tracking:

detect_object function is a placeholder. You'll need to implement your object detection logic here using a method like YOLO, Haar Cascades, or others. The function should return the bounding box coordinates (x, y, w, h) of the detected object.Pixel Distance Calculation:

Real-World Distance Conversion:

To Run:

pip install opencv-python numpy).cv2.calibrateCamera (refer to OpenCV documentation).detect_object function.Remember that this is a basic example. For more accurate results, consider factors like camera angle, lens distortion correction, and object orientation.

General Considerations:

Code Enhancements:

Further Exploration:

This article outlines a 5-step process for measuring the distance between objects in a video using computer vision techniques:

| Step | Description | Key Concepts |

|---|---|---|

| 1. Camera Calibration | Establish the relationship between pixels and real-world units. | Camera matrix, distortion coefficients, OpenCV's calibrateCamera function |

| 2. Reference Object Placement | Introduce an object of known size into the video scene. | Serves as a baseline for distance calculations. |

| 3. Object Detection & Tracking | Identify and follow the movement of both the reference object and the object of interest. | Object detection algorithms (e.g., YOLO), feature tracking, OpenCV's object detection modules. |

| 4. Pixel Distance Measurement | Calculate the distance in pixels between the reference object and the object of interest in each frame. | Euclidean distance formula. |

| 5. Pixel-to-Real-World Conversion | Use the camera calibration information and potentially the known size of the reference object to convert pixel distance to real-world units (e.g., meters). | Camera focal length, object height. |

Important Considerations:

This summary provides a high-level overview of the process. Refer to the original article for code examples and detailed explanations.

By accurately calibrating your camera, selecting a suitable reference object, and leveraging computer vision techniques for object detection and tracking, you can measure distances in videos with reasonable accuracy. This approach finds applications in various fields, including robotics, sports analysis, and surveillance. However, it's essential to consider factors influencing accuracy and explore advanced techniques for improved precision. Libraries like OpenCV provide powerful tools for camera calibration, object detection, and 3D reconstruction, enabling you to develop robust distance measurement solutions for diverse applications.

How to Measure Distance in Photos and Videos Using Computer ... | Learn how to measure distance in photos and videos using computer vision.

How to Measure Distance in Photos and Videos Using Computer ... | Learn how to measure distance in photos and videos using computer vision. Measuring distance traveled by an object in a video - MATLAB ... | Hello, I'm relatively new to Matlab but I'm working on a project with a friend who has a decent understanding of it. I have collections of images which I use to make into time lapse video of sea u...

Measuring distance traveled by an object in a video - MATLAB ... | Hello, I'm relatively new to Matlab but I'm working on a project with a friend who has a decent understanding of it. I have collections of images which I use to make into time lapse video of sea u... Calculating Distance using Video Analysis | Now simply measure how far an object moves on the screen, and multiply that by the calibration ratio. An easy way to do this is to place a clear plastic sheet ...

Calculating Distance using Video Analysis | Now simply measure how far an object moves on the screen, and multiply that by the calibration ratio. An easy way to do this is to place a clear plastic sheet ... Find distance from camera to object using Python and OpenCV | Learn how to compute the distance from a camera to an object or marker using OpenCV. Simple OpenCV + Python algorithm to find distance from camera to object

Find distance from camera to object using Python and OpenCV | Learn how to compute the distance from a camera to an object or marker using OpenCV. Simple OpenCV + Python algorithm to find distance from camera to object Are there Computer Vision methods/techniques that could measure ... | Jan 18, 2020 ... Are there Computer Vision methods/techniques that could measure the distance of two points of moving object on a video (real time)?. Sorry ...

Are there Computer Vision methods/techniques that could measure ... | Jan 18, 2020 ... Are there Computer Vision methods/techniques that could measure the distance of two points of moving object on a video (real time)?. Sorry ... Computer Vision: Determining the Distance From an Object in a Video | Lear how to calculate the distance from an object in a video.

Computer Vision: Determining the Distance From an Object in a Video | Lear how to calculate the distance from an object in a video. Measuring the distance of detected objects in a 2D image/video ... | I have trained a YOLO pose estimation model to detect monkeys in videos. I have 20h + video footage from a stabilized 2D camera in a cage with about 12 monkeys. The detection and key point estimation works fine, but now I want to extend the research by adding social proximity, but I can’t get my head around it. I have researched several possibilities: Dist_YOLO (Applied Sciences | Free Full-Text | Dist-YOLO: Fast Object Detection with Distance Estimation) Measuring distance (Measure Distance i...

Measuring the distance of detected objects in a 2D image/video ... | I have trained a YOLO pose estimation model to detect monkeys in videos. I have 20h + video footage from a stabilized 2D camera in a cage with about 12 monkeys. The detection and key point estimation works fine, but now I want to extend the research by adding social proximity, but I can’t get my head around it. I have researched several possibilities: Dist_YOLO (Applied Sciences | Free Full-Text | Dist-YOLO: Fast Object Detection with Distance Estimation) Measuring distance (Measure Distance i... Distance Calculation - Ultralytics YOLO Docs | Learn how to calculate distances between objects using Ultralytics YOLO11 for accurate spatial positioning and scene understanding.

Distance Calculation - Ultralytics YOLO Docs | Learn how to calculate distances between objects using Ultralytics YOLO11 for accurate spatial positioning and scene understanding.