Kubernetes Endpoints Explained: A Simple Guide

Learn what a Kubernetes endpoint is, how it connects services to pods, and why it's essential for your containerized applications.

Learn what a Kubernetes endpoint is, how it connects services to pods, and why it's essential for your containerized applications.

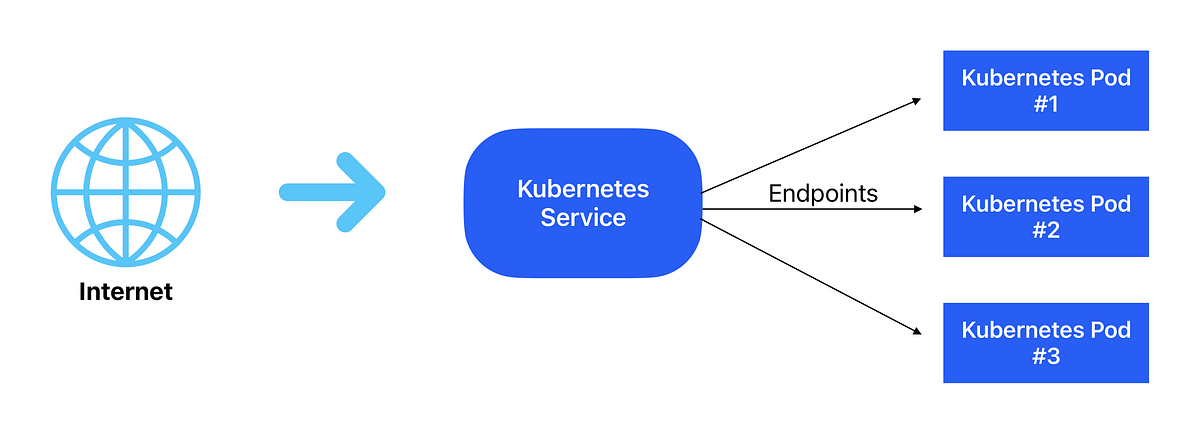

In Kubernetes, an Endpoint acts as a bridge between Services and Pods. Think of a Service as a fixed address for your application, and Pods as the actual instances running your code. Endpoints maintain a list of IP addresses and ports corresponding to healthy Pods that belong to a Service. When a Service needs to send traffic to a Pod, it consults its associated Endpoints to determine the correct destinations. This dynamic mapping ensures that even if Pods are created or destroyed (e.g., during scaling or updates), the Service remains accessible. For larger deployments, Kubernetes introduced EndpointSlices, which divide Endpoint information into smaller chunks. This improves scalability and performance by allowing for more efficient updates and distribution of Endpoint data across the cluster.

In Kubernetes, an Endpoint acts as a bridge between Services and Pods.

Think of a Service as a fixed address for your application, and Pods as the actual instances running your code.

kubectl get svc

kubectl get pods

Endpoints maintain a list of IP addresses and ports corresponding to healthy Pods that belong to a Service.

kubectl describe svc <your-service-name>

When a Service needs to send traffic to a Pod, it consults its associated Endpoints to determine the correct destinations.

This dynamic mapping ensures that even if Pods are created or destroyed (e.g., during scaling or updates), the Service remains accessible.

kubectl get endpoints

For larger deployments, Kubernetes introduced EndpointSlices, which divide Endpoint information into smaller chunks.

This improves scalability and performance by allowing for more efficient updates and distribution of Endpoint data across the cluster.

kubectl get endpointslices

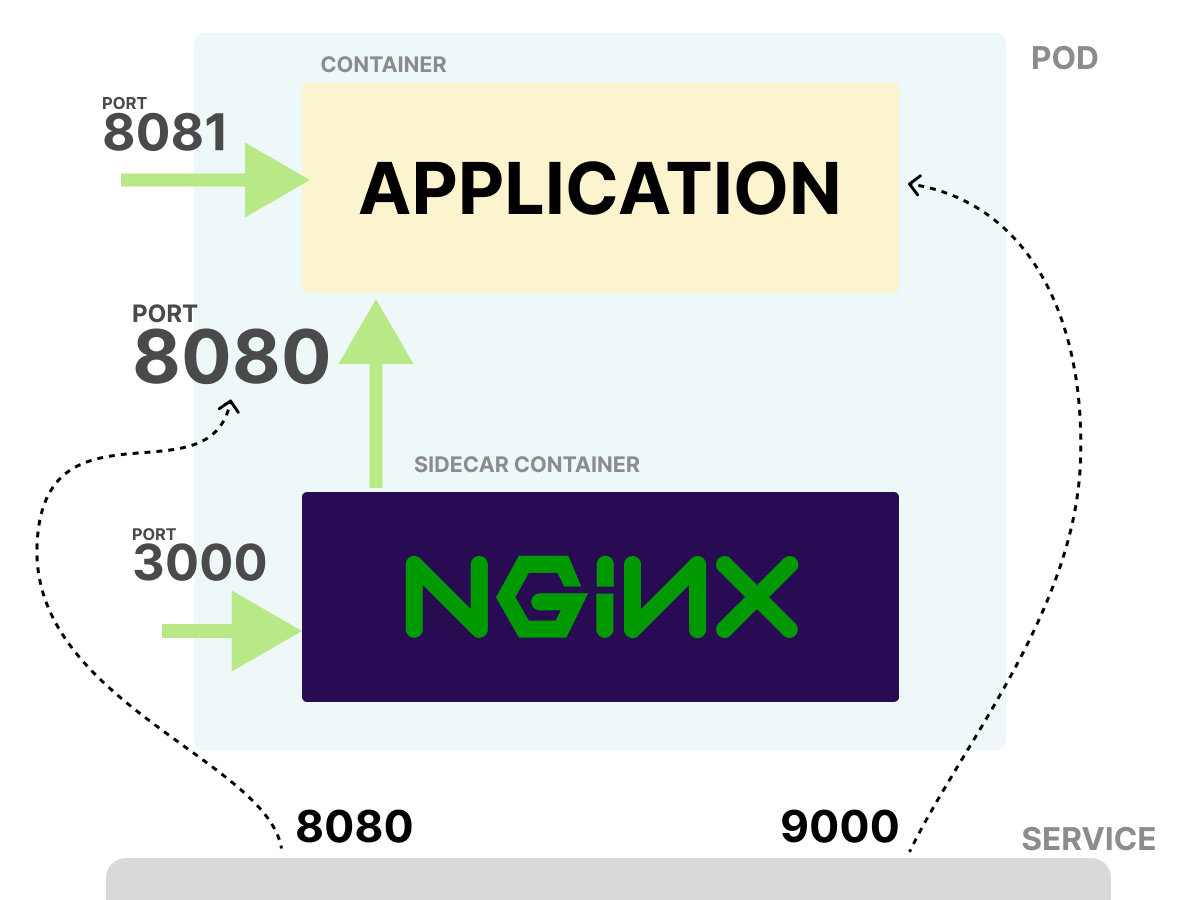

The provided YAML code defines a Kubernetes deployment and service. The deployment creates three pods running a specified application, each labeled with "app: my-app". The service exposes these pods externally using a load balancer, mapping port 80 on the service to port 8080 on the pods. Kubernetes automatically manages endpoints, which store the IP addresses and ports of the healthy pods, ensuring traffic directed to the service is routed appropriately. This setup ensures application accessibility even with pod changes.

Unfortunately, I can't directly execute kubectl commands or create real Kubernetes resources as I'm just a text-based program.

However, I can provide you with example YAML configurations to illustrate how Services, Pods, and Endpoints work together:

1. Deployment (Creating Pods):

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-deployment

spec:

replicas: 3 # Create 3 replica Pods

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app-container

image: <your-app-image>

ports:

- containerPort: 8080 # The port your app listens on2. Service (Exposing the App):

apiVersion: v1

kind: Service

metadata:

name: my-app-service

spec:

selector:

app: my-app # Target Pods with this label

ports:

- protocol: TCP

port: 80 # Service port (external traffic)

targetPort: 8080 # Pod port (internal traffic)

type: LoadBalancer # Expose using a Load Balancer (other options: ClusterIP, NodePort)Explanation:

app: my-app.my-app-service.

selector: It uses the label app: my-app to target the Pods created by the Deployment.ports: It maps port 80 on the Service to port 8080 on the Pods.type: LoadBalancer: This will provision a Load Balancer (if your cloud provider supports it) to expose the Service externally.How it Works:

app: my-app).Endpoints and EndpointSlices:

kubectl describe svc my-app-service to see the Endpoints associated with the Service.kubectl get endpointslices.Important:

<your-app-image> with the actual Docker image of your application.type: LoadBalancer might require specific configurations based on your cloud provider.This example demonstrates the basic relationship between Services, Pods, and Endpoints. Kubernetes handles the dynamic mapping and updates behind the scenes, ensuring that your application remains accessible even with Pod changes.

Core Functionality:

Beyond the Basics:

EndpointSlices for Scalability:

Troubleshooting:

kubectl describe svc <service-name>: This command is your primary tool for inspecting Endpoints. It shows you the associated Pods, their IP addresses, ports, and health status.In summary: Endpoints and EndpointSlices are essential components of Kubernetes networking. They provide a dynamic and scalable way to connect Services to Pods, ensuring that your applications remain available and resilient.

| Concept | Description | Kubectl Command |

|---|---|---|

| Service | A fixed address for your application, providing a stable entry point for accessing Pods. | kubectl get svc |

| Pod | An instance of your application running in a container. | kubectl get pods |

| Endpoint | A bridge between Services and Pods, maintaining a list of healthy Pod IP addresses and ports. |

kubectl describe svc <your-service-name> kubectl get endpoints

|

| EndpointSlice | A scalable mechanism for managing Endpoint information in larger deployments, dividing data into smaller chunks for efficiency. | kubectl get endpointslices |

Summary:

Kubernetes Endpoints and EndpointSlices play a crucial role in service discovery and load balancing. They dynamically map Services to healthy Pods, ensuring that applications remain accessible even during scaling events or Pod updates. While Endpoints provide the core functionality, EndpointSlices offer improved scalability and performance for large-scale deployments.

In conclusion, Kubernetes Endpoints and EndpointSlices form a critical, yet often unseen, layer in the platform's networking model. They provide the essential link between Services, which offer a stable point of access to applications, and the dynamic world of Pods, where application instances run and change over time. Endpoints maintain the mapping of IP addresses and ports for healthy Pods associated with a Service, ensuring traffic is directed appropriately. For larger deployments, EndpointSlices offer a more scalable and performant way to manage this mapping, dividing the information into smaller chunks for efficient updates and distribution across the cluster. Understanding how Endpoints and EndpointSlices function is key to building robust and scalable applications on Kubernetes.

Service | Kubernetes | Expose an application running in your cluster behind a single outward-facing endpoint, even when the workload is split across multiple backends.

Service | Kubernetes | Expose an application running in your cluster behind a single outward-facing endpoint, even when the workload is split across multiple backends. Kubernetes challenge 1: Counting endpoints | by Daniele Polencic ... | How many endpoint can you count?

Kubernetes challenge 1: Counting endpoints | by Daniele Polencic ... | How many endpoint can you count? What Are Kubernetes Endpoints?. And how to use them? | by Neil ... | And how to use them?

What Are Kubernetes Endpoints?. And how to use them? | by Neil ... | And how to use them? EndpointSlices | Kubernetes | The EndpointSlice API is the mechanism that Kubernetes uses to let your Service scale to handle large numbers of backends, and allows the cluster to update its list of healthy backends efficiently.

EndpointSlices | Kubernetes | The EndpointSlice API is the mechanism that Kubernetes uses to let your Service scale to handle large numbers of backends, and allows the cluster to update its list of healthy backends efficiently. Kubernetes - Endpoints - The IT Hollow | It’s quite possible that you could have a Kubernetes cluster but never have to know what an endpoint is or…

Kubernetes - Endpoints - The IT Hollow | It’s quite possible that you could have a Kubernetes cluster but never have to know what an endpoint is or… Kubernetes Endpoints | What are Kubernetes Endpoints? | Guide to Kubernetes Endpoints. Here we discuss the Introduction, What are Kubernetes Endpoints? examples with code implementation.

Kubernetes Endpoints | What are Kubernetes Endpoints? | Guide to Kubernetes Endpoints. Here we discuss the Introduction, What are Kubernetes Endpoints? examples with code implementation. Kubernetes Concept: Endpoints vs EndpointSlices | Mbong Ekwoge | You deploy applications to Kubernetes as a set of Pods. You then create Kubernetes Service objects that sit in front of Pods to provide stable and reliable networking.

Kubernetes Concept: Endpoints vs EndpointSlices | Mbong Ekwoge | You deploy applications to Kubernetes as a set of Pods. You then create Kubernetes Service objects that sit in front of Pods to provide stable and reliable networking. Document for exec command api endpoint? - Discuss Kubernetes | i cannot found any exec command apiserver endpoint in kubernetes pages. In particular, it is worse if it is not a pod. I could not find the contents of the command to exec for deployment anywhere. kubectl exec deploy/[deploymentname] -- commands Is there any documentation with information about this?

Document for exec command api endpoint? - Discuss Kubernetes | i cannot found any exec command apiserver endpoint in kubernetes pages. In particular, it is worse if it is not a pod. I could not find the contents of the command to exec for deployment anywhere. kubectl exec deploy/[deploymentname] -- commands Is there any documentation with information about this?