Batch Normalization in CNNs Explained: Benefits & Implementation

This article explores batch normalization, a technique used in convolutional neural networks to improve training speed and stability by reducing internal covariate shift.

This article explores batch normalization, a technique used in convolutional neural networks to improve training speed and stability by reducing internal covariate shift.

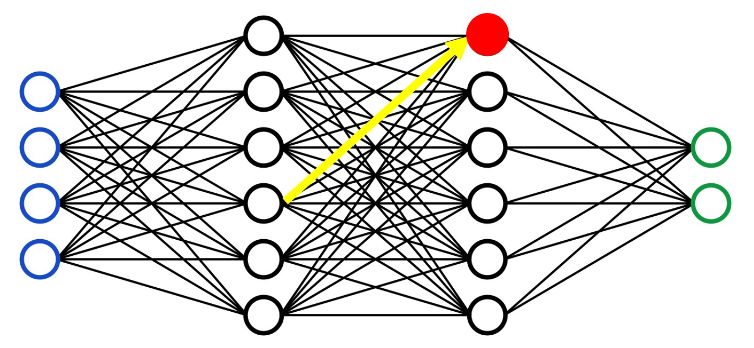

Deep learning models, particularly Convolutional Neural Networks (CNNs), have revolutionized computer vision tasks. However, training these deep networks can be challenging due to a phenomenon called "internal covariate shift." This phenomenon refers to the change in the distribution of each layer's inputs during training, caused by the updates to the preceding layers' parameters. Batch normalization is a powerful technique designed to mitigate this issue and significantly improve the training process of CNNs.

Understanding the Problem: Batch normalization addresses the "internal covariate shift" in deep learning, where the distribution of each layer's inputs changes during training as the preceding layers' parameters are updated. This slows down training and makes it harder to find optimal weights.

The Core Idea: Batch normalization normalizes the activations of a layer for each mini-batch during training. This means for each feature map in a CNN, we calculate the mean and variance across the mini-batch and use them to normalize the activations.

# Example in PyTorch

import torch.nn as nn

bn = nn.BatchNorm2d(num_features=32) # 32 is the number of feature mapsStep-by-Step Calculation:

a. Calculate mean and variance: For each mini-batch, calculate the mean and variance of the activations across all instances in the batch and all spatial locations within each feature map.

b. Normalize the activations: Subtract the mean and divide by the square root of the variance, stabilizing the activations around zero mean and unit variance.

c. Scale and Shift: Introduce learnable parameters (gamma and beta) to scale and shift the normalized activations. This allows the network to learn the optimal distribution for each layer.

Benefits for CNNs:

Placement in CNN Architecture: Batch normalization is typically applied after the convolutional layer and before the activation function.

conv = nn.Conv2d(in_channels=3, out_channels=32, kernel_size=3)

bn = nn.BatchNorm2d(num_features=32)

relu = nn.ReLU()

# Forward pass

x = conv(x)

x = bn(x)

x = relu(x)Inference (Testing): During inference, we use a running average of the mean and variance calculated during training to normalize the activations. This ensures consistent behavior even with a single input image.

Key Points:

This Python code defines a Convolutional Neural Network (CNN) with Batch Normalization for image classification. It uses PyTorch framework to define the model architecture, layers, and forward pass. The CNN includes two convolutional layers, each followed by batch normalization and ReLU activation. Max-pooling layers downsample the feature maps. Finally, a fully connected layer maps the features to 10 output classes. The code demonstrates how to create an instance of the model and perform a forward pass with an example input batch.

import torch

import torch.nn as nn

# Define a simple CNN with Batch Normalization

class CNNwithBatchNorm(nn.Module):

def __init__(self):

super(CNNwithBatchNorm, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=32, kernel_size=3, padding=1)

self.bn1 = nn.BatchNorm2d(num_features=32)

self.relu = nn.ReLU()

self.pool = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(in_channels=32, out_channels=64, kernel_size=3, padding=1)

self.bn2 = nn.BatchNorm2d(num_features=64)

self.fc = nn.Linear(64 * 8 * 8, 10) # Assuming input size of 32x32

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x) # Batch Normalization after Conv layer

x = self.relu(x)

x = self.pool(x)

x = self.conv2(x)

x = self.bn2(x) # Batch Normalization after Conv layer

x = self.relu(x)

x = self.pool(x)

x = x.view(-1, 64 * 8 * 8)

x = self.fc(x)

return x

# Example usage

model = CNNwithBatchNorm()

# Input batch (example)

input_batch = torch.randn(16, 3, 32, 32) # Batch size 16, 3 channels, 32x32 image

# Forward pass

output = model(input_batch)

print(output.shape) # Output shape (batch_size, num_classes)Explanation:

CNNwithBatchNorm with two convolutional layers.nn.BatchNorm2d layers are added after each convolutional layer (self.bn1 and self.bn2). The num_features argument should match the number of output channels from the preceding convolutional layer.forward method, we apply batch normalization immediately after each convolutional layer and before the ReLU activation.Key Points:

nn.BatchNorm2d learns two parameters (gamma and beta) for each feature map, allowing it to scale and shift the normalized activations.This code demonstrates the basic implementation of batch normalization within a CNN architecture. You can experiment with this code by changing the CNN architecture, adding more layers, or applying it to a different dataset.

Internal Covariate Shift:

Batch Normalization as a Solution:

Why It Works:

Implementation Details:

Beyond CNNs:

Alternatives and Extensions:

Batch Normalization is a powerful tool for improving deep learning models. Understanding its underlying principles and implementation details can significantly enhance your ability to train and optimize complex networks.

Problem: Deep neural networks suffer from "internal covariate shift," where the distribution of layer inputs changes during training, slowing it down.

Solution: Batch normalization stabilizes these distributions, leading to faster and more effective training.

How it Works:

Benefits:

Implementation:

Key Points:

In essence, batch normalization acts as a stabilizing force during training, preventing drastic shifts in layer inputs and leading to a more efficient and effective learning process for CNNs.

Batch normalization is a crucial technique for stabilizing and accelerating the training of deep convolutional neural networks. By normalizing the activations within each mini-batch, it mitigates the internal covariate shift phenomenon, enabling faster convergence and improved generalization performance. This method involves normalizing activations to zero mean and unit variance, then scaling and shifting them using learnable parameters. Batch normalization is typically applied after convolutional layers and before activation functions, contributing significantly to the effectiveness of modern CNN architectures in computer vision tasks. Its ability to stabilize training, along with its regularizing effect, makes it an essential tool for developing robust and high-performing deep learning models.

Batch Normalization in Convolutional Neural Networks | Baeldung ... | A quick and practical overview of batch normalization in convolutional neural networks.

Batch Normalization in Convolutional Neural Networks | Baeldung ... | A quick and practical overview of batch normalization in convolutional neural networks. What is Batch Normalization in CNN? - GeeksforGeeks | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions.

What is Batch Normalization in CNN? - GeeksforGeeks | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions. Batch Normalization in Convolutional Neural Networks | DigitalOcean | Batch normalization is a term commonly mentioned in the context of convolutional neural networks. In this article, we are going to explore what it actually e…

Batch Normalization in Convolutional Neural Networks | DigitalOcean | Batch normalization is a term commonly mentioned in the context of convolutional neural networks. In this article, we are going to explore what it actually e… The Role of Batch Normalization in CNNs - viso.ai | Batch normalization, CNN, neural network, network layers, deep learning method, gradient descent, convolutional neural networks

The Role of Batch Normalization in CNNs - viso.ai | Batch normalization, CNN, neural network, network layers, deep learning method, gradient descent, convolutional neural networks Batch Normalization in Convolutional Neural Networks — A ... | Deep learning is an emerging field of computational science that involves large quantity of data for training a model. In this paper, we have performed a comparative study of various state-of-the-art Convolutional Networks viz. DenseNet, VGG, Inception (v3) Network and Residual Network with different activation function, and demonstrate the importance of Batch Normalization. It is shown that Batch Normalization is not only important in improving the performance of the neural networks, but are essential for being able to train a deep convolutional networks. In this work state-ofthe-art convolutional neural networks viz. DenseNet, VGG, Residual Network and Inception (v3) Network are compared on a standard dataset, CIFAR-10 with batch normalization for 200 epochs. The conventional RELU activation results in accuracy of 82.68%, 88.79%, 81.01%, and 84.92% respectively. With ELU activation Residual and VGG Networks' performance increases to 84.59% and 89.91%, this is highly significant.

Batch Normalization in Convolutional Neural Networks — A ... | Deep learning is an emerging field of computational science that involves large quantity of data for training a model. In this paper, we have performed a comparative study of various state-of-the-art Convolutional Networks viz. DenseNet, VGG, Inception (v3) Network and Residual Network with different activation function, and demonstrate the importance of Batch Normalization. It is shown that Batch Normalization is not only important in improving the performance of the neural networks, but are essential for being able to train a deep convolutional networks. In this work state-ofthe-art convolutional neural networks viz. DenseNet, VGG, Residual Network and Inception (v3) Network are compared on a standard dataset, CIFAR-10 with batch normalization for 200 epochs. The conventional RELU activation results in accuracy of 82.68%, 88.79%, 81.01%, and 84.92% respectively. With ELU activation Residual and VGG Networks' performance increases to 84.59% and 89.91%, this is highly significant. Understanding the Impact of Batch Normalization on CNNs | Understand how batch normalization enhances CNN performance by improving training stability, accelerating convergence, and boosting model accuracy.

Understanding the Impact of Batch Normalization on CNNs | Understand how batch normalization enhances CNN performance by improving training stability, accelerating convergence, and boosting model accuracy. Example on how to use batch-norm? - PyTorch Forums | TLDR: What exact size should I give the batch_norm layer here if I want to apply it to a CNN? output? In what format? I have a two-fold question: So far I have only this link here, that shows how to use batch-norm. My first question is, is this the proper way of usage? For example bn1 = nn.BatchNorm2d(what_size_here_exactly?, eps=1e-05, momentum=0.1, affine=True) x1= bn1(nn.Conv2d(blah blah blah)) Is this the correct intended usage? Maybe an example of the syntax for it’s usage with a CN...

Example on how to use batch-norm? - PyTorch Forums | TLDR: What exact size should I give the batch_norm layer here if I want to apply it to a CNN? output? In what format? I have a two-fold question: So far I have only this link here, that shows how to use batch-norm. My first question is, is this the proper way of usage? For example bn1 = nn.BatchNorm2d(what_size_here_exactly?, eps=1e-05, momentum=0.1, affine=True) x1= bn1(nn.Conv2d(blah blah blah)) Is this the correct intended usage? Maybe an example of the syntax for it’s usage with a CN... 8.5. Batch Normalization — Dive into Deep Learning 1.0.3 ... | In this section, we describe batch normalization, a popular and effective technique that consistently accelerates the convergence of deep networks.

8.5. Batch Normalization — Dive into Deep Learning 1.0.3 ... | In this section, we describe batch normalization, a popular and effective technique that consistently accelerates the convergence of deep networks. Convolutional neural network with batch normalization for glioma ... | Accurate glioma detection using magnetic resonance imaging (MRI) is a complicated job. In this research, deep learning model is presented for glioma a…

Convolutional neural network with batch normalization for glioma ... | Accurate glioma detection using magnetic resonance imaging (MRI) is a complicated job. In this research, deep learning model is presented for glioma a…