TensorFlow Pre-trained Word Embeddings: Word2Vec & GloVe

Learn how to effectively utilize pre-trained word embeddings like Word2Vec and GloVe in your TensorFlow models for enhanced natural language processing tasks.

Learn how to effectively utilize pre-trained word embeddings like Word2Vec and GloVe in your TensorFlow models for enhanced natural language processing tasks.

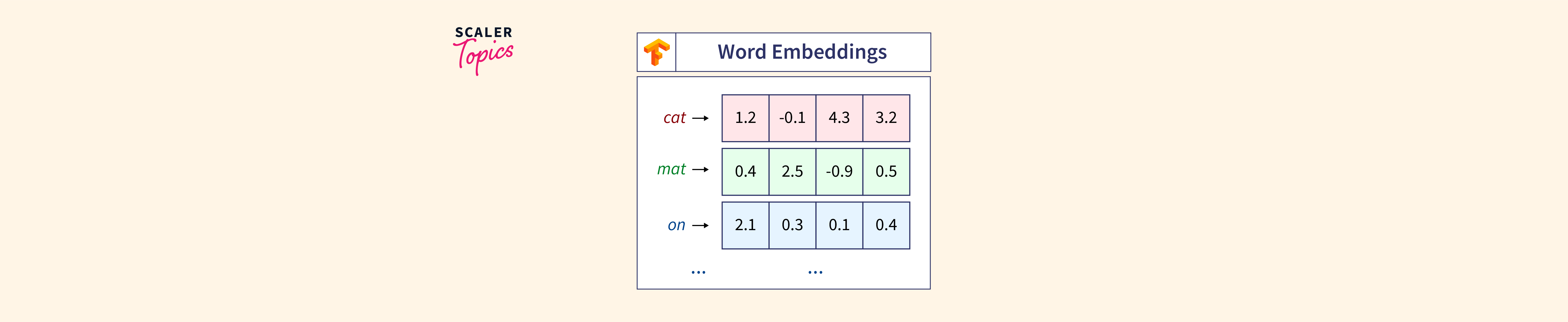

Word embeddings are a powerful technique in natural language processing (NLP) that represent words as dense vectors, capturing semantic relationships between them. Leveraging pre-trained word embeddings can significantly enhance the performance of your NLP models, especially when dealing with limited training data. This article provides a step-by-step guide on how to incorporate pre-trained word embeddings into your neural network models.

Choose a pre-trained word embedding model: Popular options include Word2Vec, GloVe, and FastText. Each model has its own strengths and weaknesses, so consider your specific task and dataset.

import gensim.downloader as api

# Download the "glove-wiki-gigaword-50" embeddings

glove_model = api.load("glove-wiki-gigaword-50") Load the pre-trained embeddings: These embeddings are typically stored in a large text file. You can use libraries like Gensim or TensorFlow to load them into memory.

# Access the embedding for the word "king"

king_embedding = glove_model["king"] Create an embedding matrix: This matrix will map words in your vocabulary to their corresponding embeddings. You can initialize the matrix randomly and then update the rows corresponding to words in your vocabulary with the pre-trained embeddings.

import numpy as np

embedding_dim = 50

embedding_matrix = np.random.rand(vocab_size, embedding_dim)

for word, i in word_index.items():

if word in glove_model:

embedding_matrix[i] = glove_model[word]Build your neural network model: Use the embedding matrix as the weight for the embedding layer in your model. This layer will transform words into their corresponding embeddings.

from tensorflow.keras.layers import Embedding

model = Sequential()

model.add(Embedding(vocab_size, embedding_dim, weights=[embedding_matrix], trainable=False))Train and evaluate your model: You can now train your model on your dataset. Since you're using pre-trained embeddings, you might see faster convergence and better performance, especially if your dataset is small.

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.fit(X_train, y_train, epochs=10)Remember to choose an embedding model that aligns with your task and dataset. Experiment with different pre-trained embeddings and fine-tuning techniques to optimize your model's performance.

This Python code demonstrates how to build a simple neural network for natural language processing tasks using pre-trained word embeddings. It downloads GloVe embeddings, prepares a vocabulary from a sample corpus, creates an embedding matrix, and builds a model with an embedding layer, an LSTM layer, and a dense output layer. The code then trains the model on sample data and evaluates its performance. This example provides a starting point for incorporating pre-trained word embeddings into various NLP tasks.

import gensim.downloader as api

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Embedding, LSTM, Dense

# 1. Choose and download pre-trained word embeddings

glove_model = api.load("glove-wiki-gigaword-50")

# Example corpus (replace with your own data)

corpus = [

"This is a sample sentence",

"Another sentence for demonstration",

"Word embeddings are powerful"

]

# 2. Prepare vocabulary and word-to-index mapping

vocabulary = set()

for sentence in corpus:

vocabulary.update(sentence.lower().split())

word_index = {word: index for index, word in enumerate(vocabulary)}

vocab_size = len(vocabulary)

# 3. Create embedding matrix

embedding_dim = 50

embedding_matrix = np.random.rand(vocab_size, embedding_dim)

for word, i in word_index.items():

if word in glove_model:

embedding_matrix[i] = glove_model[word]

# 4. Prepare data for the model

# (This is a simplified example, you'll need to adjust based on your task)

X = [[word_index[word] for word in sentence.lower().split()] for sentence in corpus]

y = [0, 1, 0] # Example labels (replace with your own)

# 5. Build the neural network model

model = Sequential()

model.add(Embedding(vocab_size, embedding_dim, weights=[embedding_matrix], trainable=False))

model.add(LSTM(128)) # Example RNN layer

model.add(Dense(1, activation='sigmoid')) # Example output layer

# 6. Compile and train the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

model.fit(X, y, epochs=10) # Adjust epochs as needed

# 7. Evaluate the model (replace with your evaluation method)

loss, accuracy = model.evaluate(X, y)

print(f"Loss: {loss}, Accuracy: {accuracy}")Explanation:

trainable=False argument in the embedding layer ensures that the pre-trained embeddings are not updated during training.Key Points:

trainable=True) to fine-tune the embeddings on your specific task.This example provides a basic framework for using pre-trained word embeddings in your neural network models. You can adapt and expand upon this code to suit your specific natural language processing tasks.

1. Choosing a Pre-trained Model:

2. Loading Embeddings:

.txt or .bin. Libraries like Gensim handle these formats.3. Embedding Matrix:

4. Neural Network Model:

trainable=False keeps pre-trained embeddings fixed. Fine-tuning (trainable=True) can improve performance but risks overfitting on small datasets.5. Training and Evaluation:

General Tips:

Additional Resources:

This guide outlines how to leverage pre-trained word embeddings for improved performance in your NLP neural networks.

1. Choose and Load:

2. Create Embedding Matrix:

3. Integrate into Neural Network:

trainable=False for the embedding layer to prevent modification during training (optional).4. Train and Evaluate:

Key Points:

This approach allows you to benefit from the rich semantic information captured in pre-trained embeddings, enhancing your model's ability to understand and process language.

By incorporating pre-trained word embeddings, you can significantly enhance the performance of your NLP models, especially when working with limited training data. Remember to carefully choose pre-trained embeddings that align with your specific task and dataset, and consider fine-tuning the embeddings for optimal results. Experimentation with different architectures, hyperparameters, and pre-trained embedding models is crucial for achieving the best possible performance in your NLP applications. This guide provides a solid foundation for understanding and implementing pre-trained word embeddings in your neural network models, empowering you to tackle a wide range of NLP challenges effectively.

Using pre-trained word embeddings | May 5, 2020 ... Description: Text classification on the Newsgroup20 dataset using pre-trained GloVe word embeddings. ... Setup. import os # Only the TensorFlow ...

Using pre-trained word embeddings | May 5, 2020 ... Description: Text classification on the Newsgroup20 dataset using pre-trained GloVe word embeddings. ... Setup. import os # Only the TensorFlow ... Word embeddings | Text | TensorFlow | May 27, 2023 ... This tutorial contains an introduction to word embeddings. You will train your own word embeddings using a simple Keras model for a sentiment classification ...

Word embeddings | Text | TensorFlow | May 27, 2023 ... This tutorial contains an introduction to word embeddings. You will train your own word embeddings using a simple Keras model for a sentiment classification ... Guide to Using Pre-trained Word Embeddings in NLP | In this article, we'll take a look at how you can use pre-trained word embeddings to classify text with TensorFlow. Full code included.

Guide to Using Pre-trained Word Embeddings in NLP | In this article, we'll take a look at how you can use pre-trained word embeddings to classify text with TensorFlow. Full code included. Word Embeddings with TensorFlow - Scaler Topics | This tutorial covers the knowledge of Word Embeddings.

Word Embeddings with TensorFlow - Scaler Topics | This tutorial covers the knowledge of Word Embeddings. Top 5 Pre-trained Word Embeddings | by Aakanksha Patil | Medium | Introduction

Top 5 Pre-trained Word Embeddings | by Aakanksha Patil | Medium | Introduction Pre-trained Word embedding using Glove in NLP models ... | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions.

Pre-trained Word embedding using Glove in NLP models ... | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions. How to use Pre-trained Word Embeddings in PyTorch | by Martín ... | “For decades, machine learning approaches targeting Natural Language Processing problems have been based on shallow models (e.g., SVM and…

How to use Pre-trained Word Embeddings in PyTorch | by Martín ... | “For decades, machine learning approaches targeting Natural Language Processing problems have been based on shallow models (e.g., SVM and… models.word2vec – Word2vec embeddings — gensim | Efficient topic modelling in Python

models.word2vec – Word2vec embeddings — gensim | Efficient topic modelling in Python