Ingress vs Load Balancer: Choosing the Right Tool

Learn the key differences between Ingress and Load Balancers in Kubernetes and choose the right solution for routing traffic to your applications.

Learn the key differences between Ingress and Load Balancers in Kubernetes and choose the right solution for routing traffic to your applications.

In Kubernetes, both Ingress and Load Balancers play crucial roles in managing network traffic, but they have distinct purposes. Load Balancers act like traffic cops, efficiently distributing incoming requests to different pods within your cluster. While Ingress functions as a receptionist, directing traffic to specific services based on rules like hostnames or paths.

In Kubernetes, both Ingress and Load Balancers help manage network traffic, but they serve different purposes.

Think of a Load Balancer as a traffic cop directing cars (requests) to different parking spots (pods) within a parking lot (cluster).

apiVersion: v1

kind: Service

metadata:

name: my-loadbalancer

spec:

type: LoadBalancer

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080This code creates a Load Balancer that distributes incoming traffic on port 80 to pods with the label "app: my-app" on port 8080.

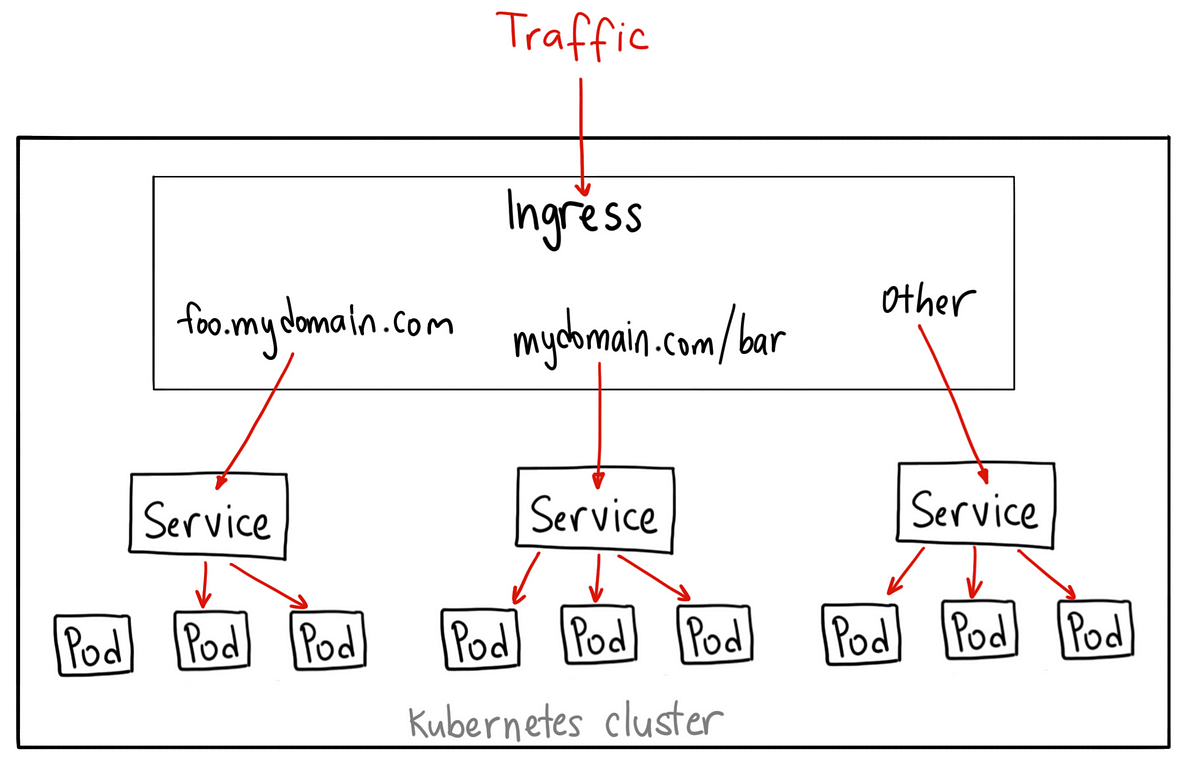

An Ingress is like a receptionist in a building, directing visitors (traffic) to specific offices (services) based on their names or departments (hostnames or paths).

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

spec:

rules:

- host: example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: my-service

port:

number: 80This code defines an Ingress rule that forwards traffic from "example.com" to the "my-service" service on port 80.

Key Differences:

In essence, use a Load Balancer to expose a service externally and an Ingress to manage traffic routing within your cluster based on HTTP/HTTPS rules.

This code demonstrates how to expose two Kubernetes services (frontend and backend) externally using Load Balancers and Ingress. It deploys frontend and backend applications with multiple replicas for redundancy and exposes them using LoadBalancer services. An Ingress resource is then defined to route traffic based on the hostname, directing requests to www.example.com to the frontend service and requests to api.example.com to the backend service. This setup ensures even traffic distribution, external accessibility, and a single entry point for managing external traffic.

This example demonstrates the difference between Load Balancers and Ingress in Kubernetes, using a simple web application scenario.

Scenario:

We have a web application with two services:

We want to:

www.example.com for the frontend.api.example.com for the backend.Solution:

We'll use a combination of Load Balancers and Ingress:

Load Balancers:

Ingress:

www.example.com to the frontend service.api.example.com to the backend service.Code:

1. Deployments and Services:

# frontend deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

replicas: 3

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: frontend

image: nginx:latest

ports:

- containerPort: 80

# frontend service

apiVersion: v1

kind: Service

metadata:

name: frontend-service

spec:

type: LoadBalancer

selector:

app: frontend

ports:

- protocol: TCP

port: 80

targetPort: 80

# backend deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend

spec:

replicas: 2

selector:

matchLabels:

app: backend

template:

metadata:

labels:

app: backend

spec:

containers:

- name: backend

image: httpd:latest

ports:

- containerPort: 80

# backend service

apiVersion: v1

kind: Service

metadata:

name: backend-service

spec:

type: LoadBalancer

selector:

app: backend

ports:

- protocol: TCP

port: 80

targetPort: 802. Ingress:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

spec:

rules:

- host: www.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: frontend-service

port:

number: 80

- host: api.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: backend-service

port:

number: 80Explanation:

www.example.com to the frontend-service.api.example.com to the backend-service.This setup ensures that:

This is a basic example, and you can further customize Ingress rules with path-based routing, SSL termination, and more.

Load Balancers:

Ingress:

General:

Choosing the Right Approach:

| Feature | Load Balancer | Ingress |

|---|---|---|

| Analogy | Traffic cop directing cars to parking spots | Receptionist directing visitors to offices |

| Layer | Transport (TCP/UDP) | Application (HTTP/HTTPS) |

| Function | Exposes services externally, distributes traffic evenly | Routes traffic internally based on rules |

| IP Address | Gets its own external IP | Sits behind a Load Balancer or NodePort |

| Routing | Basic, based on port | Advanced, based on paths, headers, etc. |

| Use Case | Exposing a service to the outside world | Managing internal traffic routing based on HTTP/HTTPS rules |

In short: Use a Load Balancer to make your service accessible externally. Use Ingress to intelligently route traffic within your cluster based on HTTP/HTTPS criteria.

In conclusion, while both Load Balancers and Ingress manage network traffic in Kubernetes, they do so at different levels and with distinct purposes. Load Balancers handle external traffic distribution to expose services, acting as a gateway to your cluster. Ingress, on the other hand, manages internal traffic routing based on HTTP/HTTPS rules, directing requests to the appropriate services within your cluster. Choosing the right approach depends on your specific needs, with Load Balancers suitable for simple service exposure and Ingress offering more advanced routing capabilities for complex applications. Understanding the differences between these two components is crucial for designing and deploying scalable and efficient applications in Kubernetes.

Ingress vs. Load Balancer in Kubernetes | Baeldung on Ops | Learn about different mechanisms to help manage network traffic and ensure requests get to their desired destination inside a cluster

Ingress vs. Load Balancer in Kubernetes | Baeldung on Ops | Learn about different mechanisms to help manage network traffic and ensure requests get to their desired destination inside a cluster What is Kubernetes Ingress? Concepts and Examples | Kong Inc. | Discover Kubernetes Ingress basics and efficient ways to manage external traffic routing to services in a K8s cluster. Explore load balancing, SSL termination, and much more. Learn More!

What is Kubernetes Ingress? Concepts and Examples | Kong Inc. | Discover Kubernetes Ingress basics and efficient ways to manage external traffic routing to services in a K8s cluster. Explore load balancing, SSL termination, and much more. Learn More! Difference Between Kubernetes Ingress And Loadbalancer ... | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions.

Difference Between Kubernetes Ingress And Loadbalancer ... | A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions. Differences between Kubernetes Ingress vs. load balancer ... | For external traffic management, IT orgs must evaluate Kubernetes Ingress vs. load balancers. Factors like traffic source and type must weigh in.

Differences between Kubernetes Ingress vs. load balancer ... | For external traffic management, IT orgs must evaluate Kubernetes Ingress vs. load balancers. Factors like traffic source and type must weigh in. Kubernetes NodePort vs LoadBalancer vs Ingress? When should I ... | Recently, someone asked me what the difference between NodePorts, LoadBalancers, and Ingress were. They are all different ways to get…

Kubernetes NodePort vs LoadBalancer vs Ingress? When should I ... | Recently, someone asked me what the difference between NodePorts, LoadBalancers, and Ingress were. They are all different ways to get… Ingress | Kubernetes | Make your HTTP (or HTTPS) network service available using a protocol-aware configuration mechanism, that understands web concepts like URIs, hostnames, paths, and more. The Ingress concept lets you map traffic to different backends based on rules you define via the Kubernetes API.

Ingress | Kubernetes | Make your HTTP (or HTTPS) network service available using a protocol-aware configuration mechanism, that understands web concepts like URIs, hostnames, paths, and more. The Ingress concept lets you map traffic to different backends based on rules you define via the Kubernetes API.