Understanding global_step in TensorFlow

Understand the crucial role of global_step in TensorFlow for tracking training iterations, optimizing learning rates, and restoring models for consistent, efficient training.

Understand the crucial role of global_step in TensorFlow for tracking training iterations, optimizing learning rates, and restoring models for consistent, efficient training.

In TensorFlow, keeping track of your training progress is essential for effective model development. A key component of this is the global_step, a variable that acts as a counter for the total number of training steps taken. This simple counter plays a vital role in various aspects of training, from monitoring progress to implementing sophisticated learning rate schedules.

In TensorFlow, the global_step is a variable that keeps track of the total number of training steps taken.

Think of it like a counter that increments every time your model processes a batch of data.

global_step = tf.Variable(1, name="global_step") It's useful for things like:

summary_writer.add_summary(summary, global_step=global_step)While TensorFlow 1.x had a built-in tf.train.get_global_step(), in TensorFlow 2.0 and later, it's recommended to manage your own global_step variable.

You can manually increment it within your training loop.

global_step.assign_add(1)Even though you manage it yourself, the global_step remains a crucial aspect of tracking and managing your training process in TensorFlow.

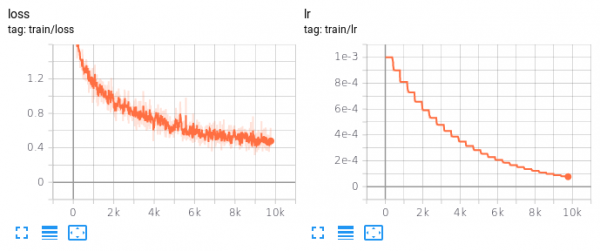

This Python code defines and trains a simple feedforward neural network using TensorFlow. It defines a two-layer model, an Adam optimizer, and the mean squared error loss function. The code then iterates through epochs and batches of data, calculating the loss and gradients, applying gradients to update model weights, and incrementing a global step variable. It logs the loss and mean absolute error to TensorBoard, prints progress updates, and saves checkpoints at specific global step intervals. The global step variable is used to track training progress and associate summaries and checkpoints with specific training iterations.

import tensorflow as tf

# Define your model

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(10, activation='relu', input_shape=(100,)),

tf.keras.layers.Dense(1)

])

# Define your optimizer

optimizer = tf.keras.optimizers.Adam(learning_rate=0.01)

# Define your loss function

loss_fn = tf.keras.losses.MeanSquaredError()

# Define your metrics

metrics = ['mean_absolute_error']

# Initialize the global step variable

global_step = tf.Variable(1, name="global_step", trainable=False, dtype=tf.int64)

# Create a TensorBoard summary writer

summary_writer = tf.summary.create_file_writer('logs/training')

# Training loop

for epoch in range(10):

for batch_idx, (x_batch, y_batch) in enumerate(dataset):

with tf.GradientTape() as tape:

predictions = model(x_batch)

loss = loss_fn(y_batch, predictions)

# Calculate gradients

gradients = tape.gradient(loss, model.trainable_variables)

# Apply gradients and update model weights

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

# Increment the global step

global_step.assign_add(1)

# Log metrics and summaries

with summary_writer.as_default():

tf.summary.scalar('loss', loss, step=global_step)

tf.summary.scalar('mean_absolute_error',

tf.keras.metrics.mean_absolute_error(y_batch, predictions),

step=global_step)

# Print progress

if global_step % 100 == 0:

print(f'Epoch: {epoch}, Global Step: {global_step.numpy()}, Loss: {loss.numpy()}')

# Example: Save a checkpoint every 500 steps

if global_step % 500 == 0:

checkpoint = tf.train.Checkpoint(model=model, optimizer=optimizer, global_step=global_step)

checkpoint.save(file_prefix='./checkpoints/my_checkpoint')

# Close the summary writer

summary_writer.close()Explanation:

Initialization:

global_step variable is initialized to 1.Training Loop:

global_step using assign_add(1) after each batch.global_step.global_step.global_step intervals.TensorBoard Visualization:

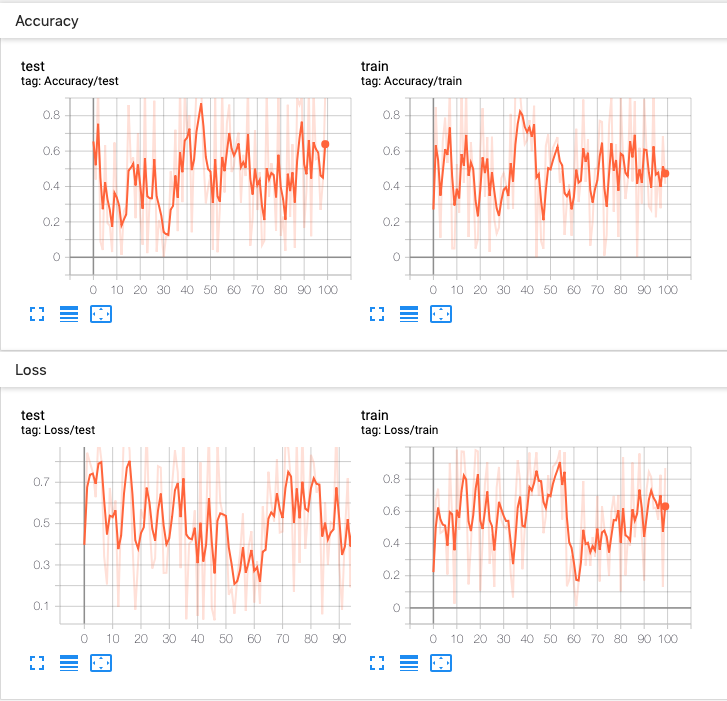

tensorboard --logdir=logs/training in your terminal to visualize the training progress, including the loss and metrics plotted against the global_step.Checkpoint Saving:

Key Points:

global_step manually.global_step for logging, checkpointing, and potentially learning rate scheduling.global_step.Relationship with epochs: While an epoch represents a full pass through your entire training dataset, the global_step increments with each batch processed. Therefore, the global_step provides a more granular view of the training progress, especially when dealing with large datasets that require multiple epochs.

Customization: You can initialize the global_step to a value other than 1 if you're resuming training from a previously saved checkpoint. This ensures continuity in your training process.

Alternatives in Keras: If you're using Keras for model training, you might not directly interact with the global_step variable. Keras provides callbacks like ModelCheckpoint and TensorBoard that internally handle the global_step for saving checkpoints and logging summaries.

Debugging and analysis: By analyzing the metrics and summaries logged against the global_step in TensorBoard, you can gain insights into your model's training behavior, identify potential issues like overfitting or slow convergence, and make informed decisions about hyperparameter tuning.

| Feature | Description |

|---|---|

| What it is | A tf.Variable acting as a counter for training steps (batches processed). |

| How to create it | global_step = tf.Variable(1, name="global_step") |

| How to increment it |

global_step.assign_add(1) within your training loop. |

| Key uses | |

| - Tracking progress | Monitor how far training has progressed. |

| - Saving checkpoints | Save the model at specific steps. |

| - Learning rate decay | Adjust learning rate dynamically. |

| - TensorBoard visualization | Log summaries and metrics against the step. |

| TensorFlow 1.x vs 2.x | |

| - 1.x | Used tf.train.get_global_step(). |

| - 2.x onwards | Manually manage the global_step variable. |

Key takeaway: Even though manually managed in TensorFlow 2.0+, global_step remains essential for tracking, managing, and visualizing your training process.

The global_step in TensorFlow, while a simple concept, is a fundamental tool for managing and understanding your model's training process. It acts as a universal counter, tracking the number of training steps taken, and seamlessly integrates with other TensorFlow components for checkpointing, learning rate decay, and visualization in TensorBoard. Whether you're working on a single machine or in a distributed training environment, effectively using and monitoring the global_step can significantly improve your model development workflow and lead to more informed training decisions.

tf.compat.v1.train.global_step | TensorFlow v2.16.1 | Small helper to get the global step.

tf.compat.v1.train.global_step | TensorFlow v2.16.1 | Small helper to get the global step. Code with Eager Execution, Run with Graphs: Optimizing Your Code ... | The TensorFlow blog contains regular news from the TensorFlow team and the community, with articles on Python, TensorFlow.js, TF Lite, TFX, and more.

Code with Eager Execution, Run with Graphs: Optimizing Your Code ... | The TensorFlow blog contains regular news from the TensorFlow team and the community, with articles on Python, TensorFlow.js, TF Lite, TFX, and more.![[RLlib] Problem with TFModelV2 loading after having saved one with ...](https://global.discourse-cdn.com/flex020/uploads/ray/original/1X/8f4dcb72f7cd34e2a332d548bd65860994bc8ff1.png) [RLlib] Problem with TFModelV2 loading after having saved one with ... | Hello everybody! I am new to topic creation in general so I would request a bit of patience if I do not do everything correctly from the start 😃 Background I use trainer.get_policy().export_model() export to get a TFModel. The model is exported succesfully. What’s the problem? I am unable to load with tf.saved_model.load() or use the model with SavedModel CLI. Script ray.init() trainer = DQNTrainer(env="CartPole-v0") for i in range(2): result = trainer.train() print(pretty_print...

[RLlib] Problem with TFModelV2 loading after having saved one with ... | Hello everybody! I am new to topic creation in general so I would request a bit of patience if I do not do everything correctly from the start 😃 Background I use trainer.get_policy().export_model() export to get a TFModel. The model is exported succesfully. What’s the problem? I am unable to load with tf.saved_model.load() or use the model with SavedModel CLI. Script ray.init() trainer = DQNTrainer(env="CartPole-v0") for i in range(2): result = trainer.train() print(pretty_print... Monitoring PyTorch Training using Tensorboard • David Stutz | This article presents a simple example, including code, for monitoring PyTorch training using Tensorboard.

Monitoring PyTorch Training using Tensorboard • David Stutz | This article presents a simple example, including code, for monitoring PyTorch training using Tensorboard. torch.utils.tensorboard — PyTorch 2.5 documentation | Before going further, more details on TensorBoard can be found at https://www.tensorflow.org/tensorboard/ ... global_step (int) – Global step value to record.

torch.utils.tensorboard — PyTorch 2.5 documentation | Before going further, more details on TensorBoard can be found at https://www.tensorflow.org/tensorboard/ ... global_step (int) – Global step value to record.