Tensorflow SSE4.2 and AVX Compilation Guide

Learn to optimize your TensorFlow performance by compiling it with SSE4.2 and AVX instructions for faster deep-learning model training and execution.

Learn to optimize your TensorFlow performance by compiling it with SSE4.2 and AVX instructions for faster deep-learning model training and execution.

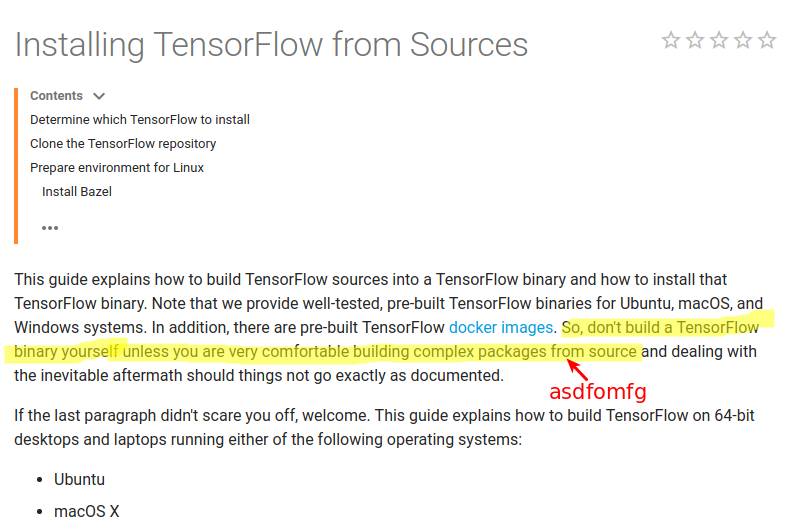

To harness the full potential of your CPU's instruction sets like SSE4.2 and AVX for accelerated TensorFlow performance, compiling TensorFlow from source might be necessary. Pre-compiled TensorFlow binaries often prioritize broad compatibility, potentially missing out on optimizations tailored for specific CPU architectures. If you encounter a message stating "The TensorFlow library wasn't compiled to use SSE4.2 instructions," it signifies that your CPU supports these instructions, but the installed TensorFlow binary isn't leveraging them.

To leverage the power of SSE4.2 and AVX instructions for faster TensorFlow computations, you might need to compile it from source. Pre-built TensorFlow binaries often prioritize compatibility, omitting optimizations for specific CPU architectures.

If you encounter a message like "The TensorFlow library wasn't compiled to use SSE4.2 instructions," it indicates your CPU supports these instructions, but the TensorFlow binary isn't utilizing them.

Solution:

Check CPU Support: Ensure your CPU supports the desired instructions (SSE4.2, AVX, etc.).

Build from Source: Download TensorFlow source code and follow the build instructions.

Configuration: During the build process, you'll likely find configuration options or flags to enable specific instruction sets. Refer to TensorFlow's documentation for precise instructions.

Example (Illustrative):

bazel build --config=opt \

--copt=-mavx \

--copt=-mavx2 \

//tensorflow/tools/pip_package:build_pip_packageNote: Building from source can be complex and time-consuming. Ensure you have the necessary build tools and dependencies.

Alternative:

Explore pre-built TensorFlow packages optimized for specific CPU architectures. Some package managers or repositories might offer these.

This code provides a guide to building TensorFlow from source with SSE4.2 and AVX instructions enabled for improved performance. It outlines steps to check CPU support using lscpu, install dependencies, download TensorFlow source code, configure the build by modifying cpu_feature_guard.cc, and build using Bazel with optimization flags. It also includes instructions for creating a Python wheel package, installing it, and verifying the installation. Remember to consult the official TensorFlow documentation for the most accurate and up-to-date information.

This example demonstrates how to check for CPU support and build TensorFlow from source with SSE4.2 and AVX instructions enabled.

1. Check CPU Support:

Use the lscpu command to check for supported instruction sets:

lscpu | grep -E 'sse4_2|avx'Look for the presence of sse4_2 and avx flags in the output. If they are present, your CPU supports these instructions.

2. Install Dependencies:

Install the necessary dependencies for building TensorFlow. Refer to the official TensorFlow installation guide for detailed instructions based on your operating system: https://www.tensorflow.org/install

3. Download TensorFlow Source Code:

Clone the TensorFlow repository from GitHub:

git clone https://github.com/tensorflow/tensorflow.git

cd tensorflow4. Configure Build with SSE4.2 and AVX Support:

Edit the tensorflow/core/platform/cpu_feature_guard.cc file. Locate the TF_RETURN_IF_ERROR lines for SSE4.2 and AVX and comment them out:

// Original lines:

// TF_RETURN_IF_ERROR(port::TestCPUFeature(CPUFeature::SSE4_2));

// TF_RETURN_IF_ERROR(port::TestCPUFeature(CPUFeature::AVX));

// Commented out:

// // TF_RETURN_IF_ERROR(port::TestCPUFeature(CPUFeature::SSE4_2));

// // TF_RETURN_IF_ERROR(port::TestCPUFeature(CPUFeature::AVX));5. Build TensorFlow:

Use Bazel to build TensorFlow with the desired optimizations:

bazel build --config=opt \

--copt=-msse4.2 \

--copt=-mavx \

--copt=-mavx2 \

//tensorflow/tools/pip_package:build_pip_packageThis command builds TensorFlow with:

--config=opt: Enables optimizations.--copt=-msse4.2: Enables SSE4.2 instructions.--copt=-mavx: Enables AVX instructions.--copt=-mavx2: Enables AVX2 instructions (if supported by your CPU).6. Create a Wheel Package:

After the build completes, create a Python wheel package:

bazel-bin/tensorflow/tools/pip_package/build_pip_package /tmp/tensorflow_pkg7. Install the Package:

Install the built TensorFlow package:

pip install /tmp/tensorflow_pkg/tensorflow-*.whl8. Verify Installation:

Run a simple TensorFlow program to verify the installation and check if SSE4.2 and AVX instructions are being utilized.

import tensorflow as tf

print(tf.config.list_physical_devices('CPU'))The output should indicate that your CPU and the supported instruction sets are being used by TensorFlow.

Note: This is a simplified example. The actual build process and configuration options may vary depending on your system and desired settings. Always refer to the official TensorFlow documentation for the most up-to-date instructions.

| Issue | Solution

In conclusion, while pre-built TensorFlow binaries offer convenience, compiling from source empowers you to unlock optimal performance by leveraging your CPU's full instruction set capabilities, such as SSE4.2 and AVX. By carefully configuring the build process, you can achieve substantial speed improvements in TensorFlow computations. However, this approach requires familiarity with build systems and potential compatibility considerations. Weigh the performance benefits against the complexity of building from source and explore alternative optimization techniques to determine the most effective strategy for your specific TensorFlow workloads.

Building TensorFlow from source for SSE/AVX/FMA instructions ... | tl;dr: Yes. I got ~40% faster CPU-only training on a small CNN.

Building TensorFlow from source for SSE/AVX/FMA instructions ... | tl;dr: Yes. I got ~40% faster CPU-only training on a small CNN. Swift 4 TensorFlow is 10x slower when running in xcode versus ... | Hi Everyone, Please excuse me and redirect me to the appropriate forum to ask this question if necessary. My question concerns the swift-4-tensorflow framework. I recently started reading David Foster's "Generative Deep Learning" book. I want to follow the examples, but I prefer swift over Python, and additionaly I prefer running my code in xcode instead of jupyter notebook. I successfully converted his first notebook example python code to Swift using the "TensorFlow" swift library and I ca...

Swift 4 TensorFlow is 10x slower when running in xcode versus ... | Hi Everyone, Please excuse me and redirect me to the appropriate forum to ask this question if necessary. My question concerns the swift-4-tensorflow framework. I recently started reading David Foster's "Generative Deep Learning" book. I want to follow the examples, but I prefer swift over Python, and additionaly I prefer running my code in xcode instead of jupyter notebook. I successfully converted his first notebook example python code to Swift using the "TensorFlow" swift library and I ca... TMVA Tutorial Fatal error - Newbie - ROOT Forum | Hi Root Forum, While trying out the TMVA tutorial : CNN classification for Python, I keep getting the same fatal error. I opted for using keras. My python version is Python 3.6.16 root-config --python-version: 3.6.9 I had to adjust the code of the tutorial slightly for it to run, the “theOption” arguments in the factory.bookmethod TMVA.Factory() and loader.PrepareTrainingAndTestTree() had to be adjusted slightly. The problem is that a fatal error occurs for the bookmethod, and some warning o...

TMVA Tutorial Fatal error - Newbie - ROOT Forum | Hi Root Forum, While trying out the TMVA tutorial : CNN classification for Python, I keep getting the same fatal error. I opted for using keras. My python version is Python 3.6.16 root-config --python-version: 3.6.9 I had to adjust the code of the tutorial slightly for it to run, the “theOption” arguments in the factory.bookmethod TMVA.Factory() and loader.PrepareTrainingAndTestTree() had to be adjusted slightly. The problem is that a fatal error occurs for the bookmethod, and some warning o... Building custom tensorflow without SSE 4.2 support | by Karina ... | Recently one colleague of mine faced up with a problem of launching stock tensorflow code on rather old CPU without SSE 4.2 support. So he…

Building custom tensorflow without SSE 4.2 support | by Karina ... | Recently one colleague of mine faced up with a problem of launching stock tensorflow code on rather old CPU without SSE 4.2 support. So he… Optimizing TensorFlow for your laptop | May 28, 2017 ... W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are ...

Optimizing TensorFlow for your laptop | May 28, 2017 ... W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are ... Installation problem for tensorflow2.15 using conda - General ... | i have problem to install tensorflow2. My steps are: 1 conda creat env 2 conda install -c conda-forge tensorflow==2.15 Then i run to check the installation import tensorflow as tf; print(tf.version); The output is: 024-05-06 15:00:04.543840: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2024-05-06 15:00:04.543873: E external/local_xla/xla/stream_execu...

Installation problem for tensorflow2.15 using conda - General ... | i have problem to install tensorflow2. My steps are: 1 conda creat env 2 conda install -c conda-forge tensorflow==2.15 Then i run to check the installation import tensorflow as tf; print(tf.version); The output is: 024-05-06 15:00:04.543840: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2024-05-06 15:00:04.543873: E external/local_xla/xla/stream_execu... Tensorflow slower as NixOS native than inside a Docker container ... | Hey all! I’m trying to make our data science infrastructue more pure. We have a Tensorflow project that does some computation. At the moment, the computation is done inside a Docker container. I’d like to do the computation natively on NixOS, so I can get rid of Docker. The problem is that the computation is about 10% slower natively than it is inside the Docker container. I can’t figure out why and am looking for ideas what else to try. I’m doing the testing on a single g4dn.xlarge AWS instance...

Tensorflow slower as NixOS native than inside a Docker container ... | Hey all! I’m trying to make our data science infrastructue more pure. We have a Tensorflow project that does some computation. At the moment, the computation is done inside a Docker container. I’d like to do the computation natively on NixOS, so I can get rid of Docker. The problem is that the computation is about 10% slower natively than it is inside the Docker container. I can’t figure out why and am looking for ideas what else to try. I’m doing the testing on a single g4dn.xlarge AWS instance...