TensorFlow Model Saving: Understanding the 3 File Output

This article explains the purpose of the three files generated when saving a TensorFlow model: checkpoint, .meta, and .data.

This article explains the purpose of the three files generated when saving a TensorFlow model: checkpoint, .meta, and .data.

When working with TensorFlow or Keras, saving your trained models is a crucial step. You'll often encounter three files associated with your saved model. These files serve distinct purposes in storing your model's architecture and learned parameters. Understanding their roles is essential for managing, sharing, and deploying your models effectively.

When you save a TensorFlow/Keras model, you might notice three files:

my_model.pb (or similar name): This file stores the model's architecture (the layers, connections, etc.). Think of it as the blueprint of your model.variables.data-00000-of-00001: This file contains the actual trained values (weights and biases) of your model. These values determine how your model makes predictions.variables.index: This file acts as an index, mapping variable names to their locations within the variables.data file.Why three files?

TensorFlow separates the model structure from the variable values for flexibility:

.pb file) without sharing potentially large variable data.Loading the model:

You typically don't need to handle these files individually. Use tf.keras.models.load_model("my_model") to load the entire model, including architecture and weights.

Important Notes:

.h5, .hdf5).pickle, it's generally not recommended for TensorFlow models due to potential compatibility issues.This Python code demonstrates how to create, train, save, load, and use a simple convolutional neural network model using TensorFlow and Keras. It defines a model architecture, simulates training with random data, saves the trained model to disk, loads the saved model back into memory, and uses the loaded model to make predictions. This example highlights the basic workflow for saving and loading Keras models for later use.

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

# --- Building a simple model ---

model = keras.Sequential(

[

layers.Input(shape=(28, 28, 1)),

layers.Conv2D(32, kernel_size=3, activation="relu"),

layers.MaxPooling2D(pool_size=(2, 2)),

layers.Flatten(),

layers.Dense(10, activation="softmax"),

]

)

model.compile(optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"])

# --- Simulating some training (no real data used here) ---

model.fit(tf.random.normal((10, 28, 28, 1)), tf.random.normal((10, 10)), epochs=1)

# --- Saving the model ---

model.save("my_model")

# This will create:

# - my_model.pb (or similar)

# - variables.data-00000-of-00001

# - variables.index

# --- Loading the model ---

loaded_model = keras.models.load_model("my_model")

# --- Using the loaded model ---

# Make predictions, continue training, etc.

predictions = loaded_model.predict(tf.random.normal((1, 28, 28, 1))) Explanation:

model.save("my_model") line is where the magic happens. It saves the model to the specified directory, creating the three files mentioned in the article.keras.models.load_model() to load the saved model, including its architecture and trained weights.Key Points:

.pb file containing the architecture. It might reveal sensitive information about your model's design.model.save: While model.save is convenient, you can achieve finer control over the saving process by using the lower-level tf.saved_model.save function. This is particularly useful for complex models or when you need to customize the saved model's structure.| File | Description

In conclusion, understanding the mechanics of saving and loading TensorFlow/Keras models is fundamental for any machine learning practitioner. The three files generated during the saving process work in tandem to store your model's architecture and trained parameters, ensuring portability and reusability. While the process is typically straightforward with model.save and load_model, being mindful of potential compatibility issues, custom objects, and deployment optimization techniques is crucial. As you delve into more complex scenarios, exploring the SavedModel format and adopting robust version control practices will become increasingly important for managing and deploying your models effectively. Remember to prioritize security considerations when sharing your models and always thoroughly test loaded models to guarantee their intended performance.

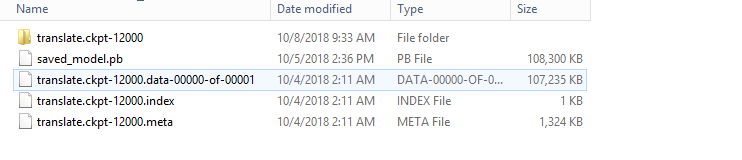

Error Using Tensorflow models into KNIME or Keras Nodes - KNIME ... | Hello There, I’m trying to use some of the saved models from Tensorflow into Keras nodes. I have the below files: it gives me an error while loading this into Python Network reader or Keras Network reader. Is there a simple way of reading this model into KNIME without re-running the model again. @christian.dietz @MarcelW Appreciate any help. Thanks ! Mohammed Ayub

Error Using Tensorflow models into KNIME or Keras Nodes - KNIME ... | Hello There, I’m trying to use some of the saved models from Tensorflow into Keras nodes. I have the below files: it gives me an error while loading this into Python Network reader or Keras Network reader. Is there a simple way of reading this model into KNIME without re-running the model again. @christian.dietz @MarcelW Appreciate any help. Thanks ! Mohammed Ayub Save and load models | TensorFlow Core | Apr 3, 2024 ... Model progress can be saved during and after training. This means a model can resume where it left off and avoid long training times.

Save and load models | TensorFlow Core | Apr 3, 2024 ... Model progress can be saved during and after training. This means a model can resume where it left off and avoid long training times. How to save my model to use it later - Beginners - Hugging Face ... | Hello Amazing people, This is my first post and I am really new to machine learning and Hugginface. I followed this awesome guide here multilabel Classification with DistilBert and used my dataset and the results are very good. I am having a hard time know trying to understand how to save the model I trainned and all the artifacts needed to use my model later. I tried at the end of the tutorial: torch.save(trainer, 'my_model') but I got this error msg: AttributeError: Can't pickle local ...

How to save my model to use it later - Beginners - Hugging Face ... | Hello Amazing people, This is my first post and I am really new to machine learning and Hugginface. I followed this awesome guide here multilabel Classification with DistilBert and used my dataset and the results are very good. I am having a hard time know trying to understand how to save the model I trainned and all the artifacts needed to use my model later. I tried at the end of the tutorial: torch.save(trainer, 'my_model') but I got this error msg: AttributeError: Can't pickle local ... Using the SavedModel format | TensorFlow Core | Mar 23, 2024 ... If you just want to save/load weights during training, refer to the checkpoints guide. Caution: TensorFlow models are code and it is important ...

Using the SavedModel format | TensorFlow Core | Mar 23, 2024 ... If you just want to save/load weights during training, refer to the checkpoints guide. Caution: TensorFlow models are code and it is important ... OSError: Unable to load weights from pytorch checkpoint file ... | Hi, everyone. I need some help. I have been developing the Flask website that has embedded one of Transformer’s fine-tuned models within it. I fine-tuned the model with PyTorch. I’ve tested the web on my local machine and it worked at all. I used fine-tuned model that I’ve already saved the weight to use locally, as pictured in the figure below: The saved results contain: config.json pytorch_model.bin special_tokens_map.json tokenizer_config.json vocab.txt Then, I tried to deploy it to t...

OSError: Unable to load weights from pytorch checkpoint file ... | Hi, everyone. I need some help. I have been developing the Flask website that has embedded one of Transformer’s fine-tuned models within it. I fine-tuned the model with PyTorch. I’ve tested the web on my local machine and it worked at all. I used fine-tuned model that I’ve already saved the weight to use locally, as pictured in the figure below: The saved results contain: config.json pytorch_model.bin special_tokens_map.json tokenizer_config.json vocab.txt Then, I tried to deploy it to t...