The "RuntimeError: Expected 4-dimensional input for 4-dimensional weight" is a common error encountered in PyTorch when working with convolutional neural networks (CNNs). This error typically occurs when the input tensor provided to a convolutional layer (like nn.Conv2d) does not have the expected four-dimensional shape. This article will guide you through the causes of this error and provide solutions to resolve it.

The "RuntimeError: Expected 4-dimensional input for 4-dimensional weight" in PyTorch usually means you're feeding an image tensor with an incorrect shape into a convolutional layer (like nn.Conv2d). Here's a breakdown:

Understanding the Error

-

4D Input: Convolutional layers in PyTorch expect input tensors in the format (Batch Size, Channels, Height, Width).

-

Batch Size: Number of images processed simultaneously.

-

Channels: Number of color channels (e.g., 3 for RGB, 1 for grayscale).

-

Height, Width: Image dimensions.

-

The Mismatch: The error arises when your input tensor doesn't have these four dimensions.

Common Causes and Solutions

-

Missing Batch Dimension: If you're processing a single image, you might forget to add the batch dimension.

# Incorrect: input_tensor.shape is (3, 224, 224)

input_tensor = torch.randn(3, 224, 224)

# Correct: Add batch dimension using unsqueeze

input_tensor = input_tensor.unsqueeze(0) # Now (1, 3, 224, 224)

-

Incorrect Data Loading: Ensure your dataset loader (e.g., DataLoader) is correctly transforming and shaping the images.

# Example using torchvision.transforms

transform = transforms.Compose([

transforms.ToTensor(), # Converts image to tensor

transforms.Resize((224, 224)), # Resize if needed

])

-

Input Shape Mismatch: Double-check that the input size you defined in your model's first layer matches the actual image dimensions.

# Example: First layer expecting 224x224 images

self.conv1 = nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1)

-

Permute Dimensions: You might need to rearrange the dimensions of your input tensor.

# If your input is (Height, Width, Channels)

input_tensor = input_tensor.permute(2, 0, 1).unsqueeze(0)

Debugging Tips

-

Print Shapes: Frequently print the shapes of your tensors (

print(tensor.shape)) at different stages to identify where the mismatch occurs.

-

Start Small: Begin with a very small dataset (even a single image) to isolate the issue.

Remember: The exact solution depends on how you're loading, preprocessing, and feeding your data into the model. Carefully examine each step to ensure your input tensor has the expected 4 dimensions.

This Python code demonstrates how to handle the common issue of missing batch dimensions when working with convolutional neural networks (CNNs) in PyTorch. It shows how to add a batch dimension to an input image tensor using unsqueeze(0). The code also provides an example of using torchvision.transforms for loading and preprocessing images, including resizing. Additionally, it illustrates how to permute image dimensions if the input image is not in the channel-first (CHW) format expected by PyTorch CNNs. The code emphasizes debugging by printing tensor shapes at each step and encourages adaptation to specific data loading pipelines and model input sizes.

import torch

import torch.nn as nn

from torchvision import transforms

# Example convolutional model

class SimpleCNN(nn.Module):

def __init__(self):

super(SimpleCNN, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1)

def forward(self, x):

x = self.conv1(x)

return x

# Simulate loading a single image (replace with your actual loading)

image = torch.randn(3, 224, 224) # Example: (Channels, Height, Width)

# 1. Missing Batch Dimension (Incorrect)

try:

model = SimpleCNN()

output = model(image) # Error! Missing batch dimension

except RuntimeError as e:

print(f"Error: {e}")

# 1. Add Batch Dimension (Correct)

image = image.unsqueeze(0) # Now (1, 3, 224, 224)

output = model(image)

print("Output shape (after adding batch dimension):", output.shape)

# 2. Example using transforms for data loading

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Resize((224, 224)), # Resize if needed

])

# 4. Permute Dimensions (if necessary)

# Example: If your input is (Height, Width, Channels)

image = torch.randn(224, 224, 3)

image = image.permute(2, 0, 1).unsqueeze(0) # Now (1, 3, 224, 224)

output = model(image)

print("Output shape (after permuting dimensions):", output.shape)Explanation:

-

Error Demonstration: The code first intentionally creates the error by passing an image tensor without a batch dimension.

-

Adding Batch Dimension: The

unsqueeze(0) method adds the necessary batch dimension.

-

Transforms Example: Shows how to use

torchvision.transforms to load and preprocess images, including resizing to the expected input size.

-

Permute Dimensions: Demonstrates how to rearrange dimensions if your input image is in a different order (HWC instead of CHW).

Key Points:

-

Debugging: The code includes

print(tensor.shape) statements to help you track the dimensions of your tensors at each step.

-

Adapt to Your Code: Replace the example image loading and transformation with your actual data loading pipeline.

-

Model Input Size: Make sure the

Resize transform (or your image loading logic) matches the input size expected by your convolutional layer (nn.Conv2d).

Input/Output Channels:

- The

in_channels argument of your nn.Conv2d layer must match the number of channels in your input tensor.

- Similarly, the

out_channels determines the number of channels in the output tensor, which will be the in_channels for the next convolutional layer.

Kernel Size and Stride:

- While not directly related to the error, remember that the kernel size and stride affect the spatial dimensions (height and width) of the output tensor.

- Ensure these parameters are set correctly to produce output tensors of the expected size for subsequent layers.

Alternative to unsqueeze():

- Instead of

unsqueeze(0) to add the batch dimension, you can directly create a 4D tensor during initialization:

input_tensor = torch.randn(1, 3, 224, 224)

Dataset Loading Best Practices:

- Use a well-established dataset class from

torchvision.datasets or write your custom dataset class inheriting from torch.utils.data.Dataset.

- Implement proper image loading, transformation, and collation within your dataset class to ensure consistent input shapes.

Beyond Images:

- Although this error is common with image data, the same principles apply to other data types processed by convolutional layers, such as time-series data (1D convolution) or volumetric data (3D convolution). The key is to understand the expected input shape for your specific convolutional layer.

Transfer Learning:

- When fine-tuning pre-trained models, ensure your input image size aligns with the architecture's expectations. Pre-trained models often come with specific input size requirements.

Batch Size Considerations:

- While a batch size of 1 is suitable for debugging, larger batch sizes are generally used during training for efficiency. Adjust your code to handle variable batch sizes gracefully.

This error occurs when feeding an incorrectly shaped image tensor into a PyTorch convolutional layer (nn.Conv2d).

Problem: Convolutional layers require a 4D input tensor: (Batch Size, Channels, Height, Width). Your input doesn't match this format.

Common Causes & Solutions:

| Cause |

Description |

Solution |

| Missing Batch Dimension |

Processing a single image without adding a batch dimension. |

Use tensor.unsqueeze(0) to add a batch dimension. |

| Incorrect Data Loading |

Dataset loader isn't transforming/shaping images correctly. |

Verify transformations in your DataLoader (e.g., transforms.ToTensor(), transforms.Resize()). |

| Input Shape Mismatch |

Model's first layer expects a different input size. |

Ensure the first layer's input size matches your image dimensions. |

| Permute Dimensions |

Input tensor dimensions are in the wrong order. |

Use tensor.permute() to rearrange dimensions (e.g., from (Height, Width, Channels) to (Channels, Height, Width)). |

Debugging Tips:

-

Print tensor shapes at different stages to pinpoint the mismatch.

-

Start with a small dataset to isolate the issue.

Key Takeaway: Meticulously check each step of data loading, preprocessing, and feeding to ensure your input tensor has the required 4D format.

By understanding the causes and solutions presented in this article, you can effectively troubleshoot and resolve this common PyTorch error, ensuring that your convolutional neural networks receive input tensors in the correct format for successful training and inference.

-

RuntimeError: Expected 4-dimensional input for 4-dimensional ... | import os import numpy as np import torch import torch.nn as nn import torch.nn.functional as F from torch.autograd import Variable import torch.utils.data as data import torchvision from torchvision import transforms EPOCHS = 2 BATCH_SIZE = 10 LEARNING_RATE = 0.003 TRAIN_DATA_PATH = r"path" TEST_DATA_PATH = r"path" TRANSFORM_IMG = transforms.Compose( [transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]) train_data = torchvision.datasets.ImageFolder(root=TRA...

RuntimeError: Expected 4-dimensional input for 4-dimensional ... | import os import numpy as np import torch import torch.nn as nn import torch.nn.functional as F from torch.autograd import Variable import torch.utils.data as data import torchvision from torchvision import transforms EPOCHS = 2 BATCH_SIZE = 10 LEARNING_RATE = 0.003 TRAIN_DATA_PATH = r"path" TEST_DATA_PATH = r"path" TRANSFORM_IMG = transforms.Compose( [transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]) train_data = torchvision.datasets.ImageFolder(root=TRA...

-

Expected 5-dimensional input for 5-dimensional weight but got 4 ... | May 31, 2021 ... Your model expect the 5-dimensional input, same with [32,3,1,5,5] shape. But you input the [3,256,128,128] shape.

Expected 5-dimensional input for 5-dimensional weight but got 4 ... | May 31, 2021 ... Your model expect the 5-dimensional input, same with [32,3,1,5,5] shape. But you input the [3,256,128,128] shape.

-

Expected 4-dimensional input, got 3-dimensional input - PyTorch ... | Hi. I have 3-dimensional input tensor with size (1,128, 100) when the agent selects the action and (batch_size, 128, 100) when the agent trains. The input is a sequence of words that tokenized and get vector for every token from Word2Vec model and concatenate to a tensor. So 128 is the number of tokens and 100 is W2V vector size. In this convolutional network: class Actor(nn.Module): def init(self, state_dim, hidden_dim, action_dim): super(Actor, self).init() self...

Expected 4-dimensional input, got 3-dimensional input - PyTorch ... | Hi. I have 3-dimensional input tensor with size (1,128, 100) when the agent selects the action and (batch_size, 128, 100) when the agent trains. The input is a sequence of words that tokenized and get vector for every token from Word2Vec model and concatenate to a tensor. So 128 is the number of tokens and 100 is W2V vector size. In this convolutional network: class Actor(nn.Module): def init(self, state_dim, hidden_dim, action_dim): super(Actor, self).init() self...

- ![Expected 4-dimensional input for 4-dimensional weight 64, 3, 3, 3 ... [Expected 4-dimensional input for 4-dimensional weight 64, 3, 3, 3 ... | May 7, 2022 ... 问题原因Expected 4-dimensional input for 4-dimensional weight [64, 3, 3, 3], but got 3-dimensional input of size [3, 224, 224] instead找到 ...

-

![RuntimeError: Given groups=1, weight[64, 3, 3, 3], so expected input ...](https://discuss.pytorch.org/uploads/default/original/2X/1/15a7e2573aeb9e6ba8995f824d3b63171a433041.png) RuntimeError: Given groups=1, weight[64, 3, 3, 3], so expected input ... | Why am I getting this error? RuntimeError: Given groups=1, weight[64, 3, 3, 3], so expected input[16, 64, 256, 256] to have 3 channels, but got 64 channels instead I wrote an implementation of U-net. class double_conv(nn.Module): def init(self, in_ch, out_ch): super(double_conv, self).init() self.conv1 = nn.Conv2d(in_ch, out_ch, 3, padding=1) self.conv2 = nn.Conv2d(in_ch, out_ch, 3, padding=1) def forward(self, x): x = F.relu(self.conv1(x)) x = F.relu(self...

RuntimeError: Given groups=1, weight[64, 3, 3, 3], so expected input ... | Why am I getting this error? RuntimeError: Given groups=1, weight[64, 3, 3, 3], so expected input[16, 64, 256, 256] to have 3 channels, but got 64 channels instead I wrote an implementation of U-net. class double_conv(nn.Module): def init(self, in_ch, out_ch): super(double_conv, self).init() self.conv1 = nn.Conv2d(in_ch, out_ch, 3, padding=1) self.conv2 = nn.Conv2d(in_ch, out_ch, 3, padding=1) def forward(self, x): x = F.relu(self.conv1(x)) x = F.relu(self...

-

UNet Training Error: Size of Tensors Mismatched · Issue #678 ... | I'm currently experiencing mismatch between my input tensors while trying to train UNet with BraTS2018 data. I'm working off of the spleen example, which has been very helpful, but I've been unable...

UNet Training Error: Size of Tensors Mismatched · Issue #678 ... | I'm currently experiencing mismatch between my input tensors while trying to train UNet with BraTS2018 data. I'm working off of the spleen example, which has been very helpful, but I've been unable...

-

Trying to get the loss of a CNN, but getting three (contradicting ... | Hello! I’ve managed to get a small CNN model running, and am now trying to extract its outputs (the loss, mainly). My code for running the data through the CNN looks as follows: # Get the model information. model, opt = get_model() # Define the loss function. loss_func = F.cross_entropy # Run the data through the neural network: for epoch in range(epochs): for train_x, train_y in train_dl: # Create a prediction. pred = model(train_x.permute(0, 3, 1, 2)) # Reshaping...

Trying to get the loss of a CNN, but getting three (contradicting ... | Hello! I’ve managed to get a small CNN model running, and am now trying to extract its outputs (the loss, mainly). My code for running the data through the CNN looks as follows: # Get the model information. model, opt = get_model() # Define the loss function. loss_func = F.cross_entropy # Run the data through the neural network: for epoch in range(epochs): for train_x, train_y in train_dl: # Create a prediction. pred = model(train_x.permute(0, 3, 1, 2)) # Reshaping...

-

RuntimeError: Expected 2D (unbatched) or 3D (batched) input to ... | Running the default inference parameters on the v1 branch appears to lead to a dimension error in the UnetUp class here: https://github.com/YuvalNirkin/fsgan/blob/v1/models/simple_unet.py#L135 pyth...

RuntimeError: Expected 2D (unbatched) or 3D (batched) input to ... | Running the default inference parameters on the v1 branch appears to lead to a dimension error in the UnetUp class here: https://github.com/YuvalNirkin/fsgan/blob/v1/models/simple_unet.py#L135 pyth...

-

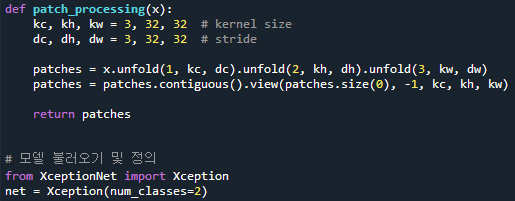

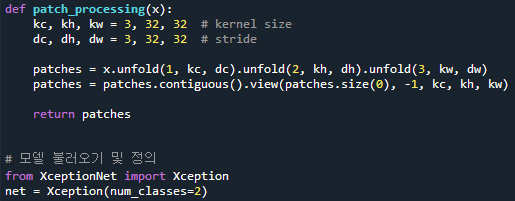

RuntimeError: Expected 4-dimensional input for 4-dimensional ... | Hello, I’m currently using a code to learn RGB images of 256x256 size using Xception. This time, I found and used the code to learn using Patch Processing. Thanks to this post, I succeeded in dividing the sensor into patches, but as the number of patches parameter was used, the 4-dimensional sensor became 5-dimensional, making it difficult to learn. I searched, but there were many 4D-3D transformations, but I couldn’t find any 4D-5D transformations. I wonder how to use Xception as it is by main...

RuntimeError: Expected 4-dimensional input for 4-dimensional ... | Hello, I’m currently using a code to learn RGB images of 256x256 size using Xception. This time, I found and used the code to learn using Patch Processing. Thanks to this post, I succeeded in dividing the sensor into patches, but as the number of patches parameter was used, the 4-dimensional sensor became 5-dimensional, making it difficult to learn. I searched, but there were many 4D-3D transformations, but I couldn’t find any 4D-5D transformations. I wonder how to use Xception as it is by main...