PyTorch RuntimeError: Tensor Size Mismatch at Dimension 0

Learn how to troubleshoot and resolve the PyTorch RuntimeError "The size of tensor a (4) must match the size of tensor b (3) at non-singleton dimension 0" in your deep learning projects.

Learn how to troubleshoot and resolve the PyTorch RuntimeError "The size of tensor a (4) must match the size of tensor b (3) at non-singleton dimension 0" in your deep learning projects.

In PyTorch, encountering the "RuntimeError: The size of tensor a (...) must match the size of tensor b (...) at non-singleton dimension ..." message indicates a shape mismatch between tensors during an operation. This error commonly occurs when performing element-wise operations like addition, subtraction, or concatenation, where the involved tensors lack compatible shapes. This introduction delves into the nuances of this error, exploring its causes and providing solutions to resolve it effectively.

The error "RuntimeError: The size of tensor a (...) must match the size of tensor b (...) at non-singleton dimension ..." in PyTorch signifies a shape mismatch between tensors during an operation. Here's a breakdown:

Understanding the Error: PyTorch performs element-wise operations. This error arises when you try operations like addition, subtraction, or concatenation, and the tensors involved don't have compatible shapes.

"Non-Singleton Dimension": A singleton dimension is one with a size of 1. The error message highlights that the mismatch occurs in a dimension that is not a singleton dimension in at least one of the tensors.

a = torch.randn(4)

b = torch.randn(3)

# Trying to add tensors of different sizes

c = a + b # This will throw an errorCommon Causes and Solutions:

Input Data Mismatch: Verify the dimensions of your input data. Ensure they align with your network's architecture.

# Example: Input data with incorrect dimensions for a linear layer

linear_layer = nn.Linear(10, 5)

input_data = torch.randn(20, 15) # Incorrect: Should be (batch_size, 10)

output = linear_layer(input_data) # Error!Incorrect Reshaping: Double-check the output shapes after reshaping operations (view, reshape, transpose).

x = torch.randn(4, 1, 28, 28)

# Incorrect reshaping, leading to a mismatch

y = x.view(4, 28 * 28) # Should be x.view(4, -1) for correct flatteningPermute/Transpose Errors: Be mindful of the order of dimensions when using permute or transpose.

Broadcasting Mismatch: PyTorch allows broadcasting, where smaller tensors are expanded to match larger ones. However, this has rules. If dimensions intended for broadcasting don't align, you'll get this error.

Debugging Tips:

.shape to pinpoint the mismatch.Remember, resolving this error involves carefully examining your code, understanding the expected tensor shapes at each step, and ensuring your operations align with those shapes.

The Python code demonstrates common shape errors in PyTorch and how to fix them. It covers input data mismatch with linear layers, incorrect reshaping using the view function, errors in permuting tensor dimensions, and broadcasting errors due to incompatible shapes. Each example includes the error-causing code, the error message, and the corrected code with explanations.

import torch

import torch.nn as nn

# Example 1: Input Data Mismatch

linear_layer = nn.Linear(10, 5)

# Incorrect input data dimensions

input_data = torch.randn(20, 15)

try:

output = linear_layer(input_data)

except RuntimeError as e:

print(f"Error: {e}")

print("Input data shape:", input_data.shape)

print("Expected input features:", linear_layer.in_features)

# Correcting the input data dimensions

input_data_corrected = torch.randn(20, 10)

output = linear_layer(input_data_corrected)

print("Corrected output shape:", output.shape)

# Example 2: Incorrect Reshaping

x = torch.randn(4, 1, 28, 28)

# Incorrect reshaping

try:

y = x.view(4, 28 * 28)

print(y.shape)

except RuntimeError as e:

print(f"Error: {e}")

# Correct reshaping

y_corrected = x.view(4, -1)

print("Corrected reshaped shape:", y_corrected.shape)

# Example 3: Permute/Transpose Errors

z = torch.randn(2, 3, 4)

# Incorrect permutation (trying to permute a dimension that doesn't exist)

try:

z_permuted = z.permute(0, 3, 1)

except RuntimeError as e:

print(f"Error: {e}")

# Correct permutation

z_permuted_corrected = z.permute(2, 1, 0)

print("Corrected permuted shape:", z_permuted_corrected.shape)

# Example 4: Broadcasting Mismatch

a = torch.randn(4, 3)

b = torch.randn(4)

# Trying to add tensors with incompatible shapes for broadcasting

try:

c = a + b

except RuntimeError as e:

print(f"Error: {e}")

# Reshaping 'b' to enable broadcasting

b_reshaped = b.view(4, 1)

c_corrected = a + b_reshaped

print("Corrected output shape (after broadcasting):", c_corrected.shape)Explanation:

view operation and how to fix it using -1 to infer the correct dimension.permute when trying to access a non-existent dimension and provides the correct permutation.This code provides concrete examples of the error and how to resolve it in different scenarios, making it easier to understand and debug similar issues in your PyTorch code.

These notes expand on the provided information, offering deeper insights and practical advice:

Understanding the Importance of Tensor Shapes:

Beyond the Obvious:

Advanced Debugging:

assert statements to check tensor shapes at critical points in your code. This helps catch errors early.

assert x.shape[1] == linear_layer.in_features, "Input features mismatch!" Broadcasting in Detail:

view, unsqueeze, or expand_as to achieve the desired alignment.Key Takeaway: Mastering tensor shapes is essential for effective PyTorch development. By carefully analyzing your code, using debugging tools, and understanding broadcasting, you can overcome shape mismatches and build robust deep learning models.

This table summarizes the error and provides solutions:

| Error Description | Meaning | Common Causes | Solutions |

|---|---|---|---|

| RuntimeError: The size of tensor a (...) must match the size of tensor b (...) at non-singleton dimension ... | Tensors involved in an operation (e.g., addition, concatenation) have incompatible shapes. The mismatch occurs in a dimension that is not 1 (singleton) in at least one tensor. |

1. Input Data Mismatch: Input data dimensions don't match the network architecture. 2. Incorrect Reshaping: Errors in view, reshape, or transpose operations result in incorrect output shapes. 3. Permute/Transpose Errors: Incorrect dimension order when using permute or transpose. 4. Broadcasting Mismatch: Dimensions intended for broadcasting don't align. |

1. Verify Input Data: Check and correct input data dimensions. 2. Double-Check Reshaping: Review and fix reshaping operations to produce the desired output shapes. 3. Review Permute/Transpose: Ensure the correct order of dimensions when using these functions. 4. Understand Broadcasting Rules: Ensure dimensions intended for broadcasting align with PyTorch's rules. |

| Debugging Tips: |

1. Print Tensor Shapes: Use .shape to print and compare tensor dimensions. 2. Use a Debugger: Step through code to observe tensor shapes at different points. |

To conclude, the "RuntimeError: The size of tensor a (...) must match the size of tensor b (...) at non-singleton dimension ..." error in PyTorch highlights a fundamental aspect of tensor operations: shape compatibility. This error, often encountered during element-wise operations or reshaping, signifies that the tensors involved have mismatched dimensions. By understanding the root causes, such as input data mismatches, incorrect reshaping, or improper broadcasting, and by utilizing debugging techniques like printing tensor shapes and using a debugger, you can effectively resolve this error. Remember that mastering tensor shapes is crucial for successful PyTorch development, enabling you to build and debug robust deep learning models.

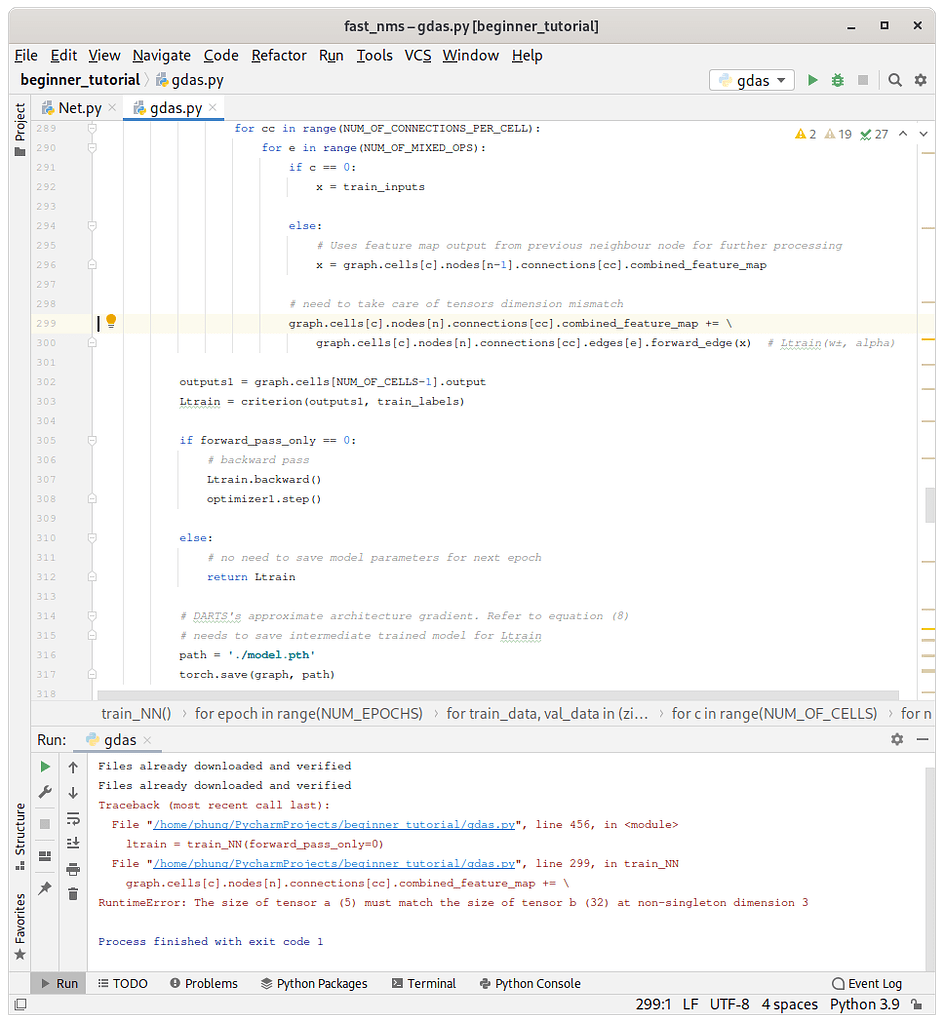

RuntimeError: The size of tensor a (5) must match the size of tensor ... | For the RuntimeError: The size of tensor a (5) must match the size of tensor b (32) at non-singleton dimension 3 , may I know why tensor b is of size 32 ? and what does it exactly mean by “singleton dimension 3” ? # https://github.com/D-X-Y/AutoDL-Projects/issues/99 import torch import torch.utils.data import torch.nn as nn import torch.nn.functional as F import torch.optim as optim import torchvision import torchvision.transforms as transforms import numpy as np USE_CUDA = torch.cuda.is_...

RuntimeError: The size of tensor a (5) must match the size of tensor ... | For the RuntimeError: The size of tensor a (5) must match the size of tensor b (32) at non-singleton dimension 3 , may I know why tensor b is of size 32 ? and what does it exactly mean by “singleton dimension 3” ? # https://github.com/D-X-Y/AutoDL-Projects/issues/99 import torch import torch.utils.data import torch.nn as nn import torch.nn.functional as F import torch.optim as optim import torchvision import torchvision.transforms as transforms import numpy as np USE_CUDA = torch.cuda.is_... Size mismatch when running FasterRCNN in parallel - PyTorch ... | Hi all, I’m following this tutorial (https://pytorch.org/tutorials/intermediate/torchvision_tutorial.html) and I have everything working fine on a single GPU with a batch size of 4 and a custom dataset (I’m using PyTorch 1.2 and torchvision 0.4 with 2 GPUs) I’m trying to get it to work either with DataParallel or DistributedDataParallel (as per https://github.com/pytorch/vision/blob/master/references/detection/train.py). I’m getting this error with DataParallel: Traceback (most recent call l...

Size mismatch when running FasterRCNN in parallel - PyTorch ... | Hi all, I’m following this tutorial (https://pytorch.org/tutorials/intermediate/torchvision_tutorial.html) and I have everything working fine on a single GPU with a batch size of 4 and a custom dataset (I’m using PyTorch 1.2 and torchvision 0.4 with 2 GPUs) I’m trying to get it to work either with DataParallel or DistributedDataParallel (as per https://github.com/pytorch/vision/blob/master/references/detection/train.py). I’m getting this error with DataParallel: Traceback (most recent call l... briaai/RMBG-1.4 · The size of tensor a (4) must match the size of ... | Apr 22, 2024 ... The size of tensor a (4) must match the size of tensor b (3) at non-singleton dimension 1. #28. by DarkCava1 - opened Apr 22, 2024.

briaai/RMBG-1.4 · The size of tensor a (4) must match the size of ... | Apr 22, 2024 ... The size of tensor a (4) must match the size of tensor b (3) at non-singleton dimension 1. #28. by DarkCava1 - opened Apr 22, 2024. Trainer RuntimeError: The size of tensor a (462) must match the size ... | Hi, I am finetuning Whisper and run into a trainer issue and don’t know what to do: RuntimeError: The size of tensor a (462) must match the size of tensor b (448) at non-singleton dimension 1 The trace goes: --------------------------------------------------------------------------- RuntimeError Traceback (most recent call last) File :28 File ~/.local/lib/python3.9/site-packages/transformers/trainer.py:1515, in Trainer.train(self, resume_from_checkpoi...

Trainer RuntimeError: The size of tensor a (462) must match the size ... | Hi, I am finetuning Whisper and run into a trainer issue and don’t know what to do: RuntimeError: The size of tensor a (462) must match the size of tensor b (448) at non-singleton dimension 1 The trace goes: --------------------------------------------------------------------------- RuntimeError Traceback (most recent call last) File :28 File ~/.local/lib/python3.9/site-packages/transformers/trainer.py:1515, in Trainer.train(self, resume_from_checkpoi...

About Creating a New Task Questions - Isaac Gym - NVIDIA ... | Hi. I’ve read the documentation and the sample code, and I’ve tried to create my new reinforcement learning tasks, but I don’t understand the principles of the whole framework yet. I’ve taken some tensors and used them to build actions and rewards, but so far I haven’t been able to run them successfully because there are too many errors about tensors and dimensions, similar to the following: RuntimeError: mat1 dim 1 must match mat2 dim 0 @torch.jit.script def quat_mul(a, b): assert a.shape...

About Creating a New Task Questions - Isaac Gym - NVIDIA ... | Hi. I’ve read the documentation and the sample code, and I’ve tried to create my new reinforcement learning tasks, but I don’t understand the principles of the whole framework yet. I’ve taken some tensors and used them to build actions and rewards, but so far I haven’t been able to run them successfully because there are too many errors about tensors and dimensions, similar to the following: RuntimeError: mat1 dim 1 must match mat2 dim 0 @torch.jit.script def quat_mul(a, b): assert a.shape...