OpenCV calibrateCamera: Acceptable Return Values

Learn how to evaluate the re-projection error from cv::calibrateCamera in OpenCV and determine if your camera calibration is successful.

Learn how to evaluate the re-projection error from cv::calibrateCamera in OpenCV and determine if your camera calibration is successful.

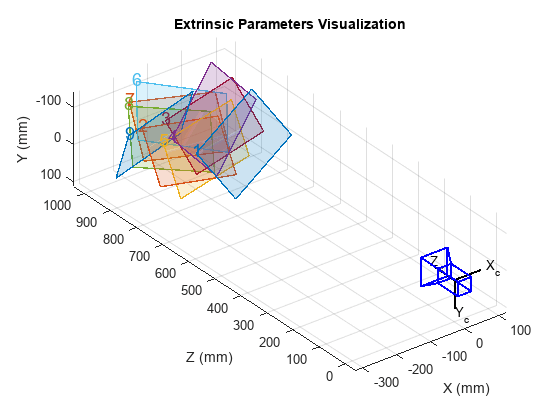

Camera calibration is a crucial step in computer vision applications, ensuring that your camera's intrinsic and extrinsic parameters are accurately determined. A key metric to assess the quality of your calibration is the Reprojection Error (RPE). This article provides a practical guide to understanding, evaluating, and improving RPE for successful camera calibration.

Understand Reprojection Error (RPE): After calibration, points from your calibration pattern are projected onto the image using the calculated camera parameters. RPE is the average difference between these projected points and their actual locations.

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(...)

print("Reprojection Error:", ret)Acceptable RPE: There's no magic number. Aim for RPE < 1 pixel for good calibration. However, acceptable RPE depends on your application's accuracy needs.

Iterative Refinement: If RPE is too high:

cv2.calibrateCamera (e.g., CALIB_FIX_PRINCIPAL_POINT) to constrain the optimization.Visual Inspection:

cv2.undistort with the obtained camera parameters to remove distortion from images.Consider Application:

Remember, camera calibration is an iterative process. Experimentation and careful analysis of results are key to achieving satisfactory accuracy.

This Python code performs camera calibration using a chessboard pattern. It first detects the chessboard corners in a set of calibration images and calculates the camera's intrinsic and extrinsic parameters. The code then undistorts a test image using the obtained calibration parameters and displays the result. Finally, it provides an option to save the calibration parameters for future use.

import cv2

import numpy as np

import glob

# Define the chessboard dimensions

CHECKERBOARD = (6, 9) # Example: 6 rows, 9 columns

# Termination criteria for calibration

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

# Prepare object points (3D points in real world space)

objp = np.zeros((CHECKERBOARD[0] * CHECKERBOARD[1], 3), np.float32)

objp[:, :2] = np.mgrid[0:CHECKERBOARD[0], 0:CHECKERBOARD[1]].T.reshape(-1, 2)

# Arrays to store object points and image points from all images

objpoints = [] # 3D points in real world space

imgpoints = [] # 2D points in image plane

# Load calibration images

images = glob.glob('calibration_images/*.jpg') # Adjust path as needed

for fname in images:

img = cv2.imread(fname)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Find chessboard corners

ret, corners = cv2.findChessboardCorners(gray, CHECKERBOARD, None)

# If corners are found, add object points and image points

if ret == True:

objpoints.append(objp)

corners2 = cv2.cornerSubPix(gray, corners, (11, 11), (-1, -1), criteria)

imgpoints.append(corners2)

# Draw and display the corners (optional for visualization)

cv2.drawChessboardCorners(img, CHECKERBOARD, corners2, ret)

cv2.imshow('img', img)

cv2.waitKey(500)

cv2.destroyAllWindows()

# Perform camera calibration

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, gray.shape[::-1], None, None)

# Print reprojection error

print("Reprojection Error:", ret)

# ------------------------------------------------------------------------

# Visual Inspection (Undistort an image)

# ------------------------------------------------------------------------

img = cv2.imread('test_image.jpg') # Load an image to undistort

h, w = img.shape[:2]

newcameramtx, roi = cv2.getOptimalNewCameraMatrix(mtx, dist, (w, h), 1, (w, h))

# Undistort the image

dst = cv2.undistort(img, mtx, dist, None, newcameramtx)

# Crop the image (optional, if there's black border after undistortion)

x, y, w, h = roi

dst = dst[y:y+h, x:x+w]

cv2.imshow('Undistorted Image', dst)

cv2.waitKey(0)

cv2.destroyAllWindows()

# Save calibration parameters (optional)

np.savez("calibration_params.npz", mtx=mtx, dist=dist, rvecs=rvecs, tvecs=tvecs)Explanation:

cv2.findChessboardCorners.cv2.cornerSubPix.cv2.calibrateCamera to calculate camera matrix, distortion coefficients, rotation and translation vectors.ret) as a measure of calibration quality.cv2.undistort and the calculated camera parameters.Key Points:

Understanding RPE:

Acceptable RPE:

Iterative Refinement:

Visual Inspection:

Beyond the Basics:

This table summarizes key aspects of evaluating and refining camera calibration results:

| Aspect | Description

By understanding the factors influencing RPE and employing a combination of quantitative analysis and visual inspection, you can achieve accurate camera calibration for your computer vision tasks. Remember that the acceptable RPE threshold is application-dependent, and achieving a very low RPE might involve trade-offs. This iterative process requires careful planning, execution, and analysis of results, ultimately leading to more reliable and precise computer vision applications.

Calibrate fisheye lens using OpenCV — part 2 | by Kenneth Jiang ... | In part 1 we covered some basics on how to use OpenCV to calibrate your fisheye lens. If you are happy with the un-distorted image after…

Calibrate fisheye lens using OpenCV — part 2 | by Kenneth Jiang ... | In part 1 we covered some basics on how to use OpenCV to calibrate your fisheye lens. If you are happy with the un-distorted image after… Undistortion at far edges of image - OpenCV Q&A Forum | Feb 15, 2014 ... Keep repeating this process until you converge to an acceptable solution. Preview: (hide). save cancel. Comments. I have seen this effect as ...

Undistortion at far edges of image - OpenCV Q&A Forum | Feb 15, 2014 ... Keep repeating this process until you converge to an acceptable solution. Preview: (hide). save cancel. Comments. I have seen this effect as ... Inconsistent results with

Inconsistent results with recoverPose - calib3d - OpenCV | I would like to estimate the pose of the camera between frames in a video. I care more about the relative rotation between frames than the translation. Unfortunately, I am getting inconsistent results and I do not know where to start debugging. Perhaps you can help. The process to recover the pose consists essentially in these steps. Camera calibration. I get an error of about 0.03, which I think is ok. Read two consecutive frames. I convert both to grayscale and undistort them with the camer... 2. Geometric Transformations — Computer Vision | On the other hand, when enlarging an image, using INTER_CUBIC (higher quality, but slower) or INTER_LINEAR (faster but still acceptable quality) interpolation ...

2. Geometric Transformations — Computer Vision | On the other hand, when enlarging an image, using INTER_CUBIC (higher quality, but slower) or INTER_LINEAR (faster but still acceptable quality) interpolation ... Evaluating the Accuracy of Single Camera Calibration | How to Improve Calibration Accuracy. Whether or not a particular reprojection or estimation error is acceptable depends on the precision requirements of your ...

Evaluating the Accuracy of Single Camera Calibration | How to Improve Calibration Accuracy. Whether or not a particular reprojection or estimation error is acceptable depends on the precision requirements of your ...