Kubernetes Pod Volume Node Affinity Conflict Troubleshooting

Learn how to troubleshoot and resolve the Kubernetes pod warning "1 node(s) had volume node affinity conflict" in your cluster.

Learn how to troubleshoot and resolve the Kubernetes pod warning "1 node(s) had volume node affinity conflict" in your cluster.

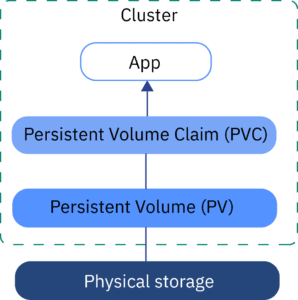

The error "volume node affinity conflict" in Kubernetes occurs when a pod cannot be scheduled due to its PersistentVolumeClaims (PVCs) being associated with different zones or nodes. This conflict arises because Kubernetes needs to ensure that a pod runs in a location where it can access its persistent storage.

The error "volume node affinity conflict" in Kubernetes arises when a pod can't be scheduled because its PersistentVolumeClaims (PVCs) are tied to different zones or nodes.

Let's break down why this happens and how to fix it:

Understanding the Problem

Common Causes

Troubleshooting and Solutions

Check PVC and PV Locations:

kubectl describe pvc <pvc-name> and kubectl describe pv <pv-name> to verify.Review Node Affinity Rules:

nodeAffinity or nodeSelector settings too strict?apiVersion: v1

kind: Pod

spec:

affinity:

nodeAffinity:

# ... your rules hereExamine StorageClass Configuration:

apiVersion: storage.k8s.io/v1

kind: StorageClass

# ... your StorageClass definitionConsider Taints and Tolerations:

Reschedule or Delete and Redeploy:

Example: Relaxing Node Affinity

If your pod is too picky about nodes:

# Before (too restrictive)

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values: ["node1", "node2"] # After (more flexible)

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

preference:

matchExpressions:

- key: kubernetes.io/zone

operator: In

values: ["your-zone"] Important: Always back up your data and test changes in a non-production environment before applying them to live systems.

This code demonstrates a "volume node affinity conflict" scenario in a Kubernetes cluster spanning two availability zones. A PersistentVolume is provisioned in one zone, while the pod using it might be scheduled to another zone due to unspecified zone preference in PersistentVolumeClaim, leading to a conflict. The solution involves adding "allowedTopologies" to the PVC, restricting it to the same zone as the PV. This ensures that the pod and its storage reside in the same zone, resolving the conflict. The code provides examples of both the problematic setup and the solution using YAML configurations for PV, PVC, and the pod.

Let's imagine you have a Kubernetes cluster spanning two availability zones: "us-central1-a" and "us-central1-b". You're trying to deploy a pod that needs persistent storage, but you're getting the dreaded "volume node affinity conflict" error.

1. The Problem:

Your PersistentVolume (PV) was provisioned in "us-central1-a", but your PersistentVolumeClaim (PVC) doesn't specify any zone preference. Kubernetes tries to schedule the pod in "us-central1-b" (due to resource availability), leading to the conflict.

2. Code Examples:

a) Problematic Setup:

# pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: my-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /mnt/data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- us-central1-a

---

# pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 500Mi

storageClassName: local-storage

---

# pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-container

image: nginx:latest

volumeMounts:

- mountPath: /data

name: my-volume

volumes:

- name: my-volume

persistentVolumeClaim:

claimName: my-pvcb) Solution: Enforce Zone for PVC:

# pvc.yaml (updated)

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 500Mi

storageClassName: local-storage

allowedTopologies:

- matchLabelExpressions:

- key: topology.kubernetes.io/zone

values:

- us-central1-a 3. Explanation:

allowedTopologies to the PVC, explicitly stating that it should only bind to PVs in the "us-central1-a" zone. This ensures the pod and its storage reside in the same zone.Important Considerations:

nodeAffinity settings to avoid overly restrictive rules.By understanding the relationship between PVs, PVCs, zones, and node affinity, you can effectively troubleshoot and resolve "volume node affinity conflict" errors in your Kubernetes deployments.

Visualization: Imagine Kubernetes as a matchmaking service. It tries to match pods (guests) with nodes (hotel rooms) that have the right resources. The "volume node affinity conflict" is like saying, "This guest needs a room with an ocean view," but the only available rooms with ocean views are already booked by their luggage (the PVs).

Impact: This error prevents pods from starting, impacting application availability. It's crucial to understand the root cause and apply the right fix.

Debugging Tips:

kubectl describe pod <pod-name>: Look for "Events" to see why scheduling failed.kubectl get nodes -o wide: Check node zones (topology.kubernetes.io/zone label).Prevention:

Beyond Zones: While this note focuses on zones, the same principles apply to other node affinity constraints, such as specific hardware requirements or custom labels.

Community Resources: The Kubernetes community is vast! Don't hesitate to search forums, Stack Overflow, and the official documentation for more help and examples.

| Problem | Description | Causes | Solutions |

|---|---|---|---|

| "volume node affinity conflict" error | A pod can't be scheduled because its PersistentVolumeClaims (PVCs) and the underlying PersistentVolumes (PVs) are tied to different zones or nodes. | * Multi-AZ Clusters: Storage or PVCs are zone-specific. * Misconfigured Affinity: Overly restrictive nodeAffinity or nodeSelector rules. * StorageClass Issues: StorageClass doesn't support multi-zone setups or desired affinity. |

1. Check PVC and PV Locations: Ensure they are in the same zone using kubectl describe. 2. Review Node Affinity Rules: Relax overly strict rules in your pod's configuration. 3. Examine StorageClass Configuration: Verify multi-zone support and parameters. 4. Consider Taints and Tolerations: Ensure pod tolerates taints on nodes with required storage. 5. Reschedule or Delete and Redeploy: Allow Kubernetes to resolve minor conflicts. |

Key Concepts:

Example Solution: Relaxing overly restrictive nodeAffinity rules to allow scheduling in a specific zone.

Important: Always back up data and test changes in a non-production environment.

By addressing PVC and PV locations, reviewing node affinity rules, examining StorageClass configurations, considering taints and tolerations, and utilizing rescheduling or redeployment, you can overcome "volume node affinity conflict" errors. Visualizing the matchmaking process and understanding the impact of this error are crucial for effective troubleshooting. Debugging tips such as using kubectl commands and examining logs can help pinpoint the root cause. Implementing preventive measures like defining a clear zone strategy, configuring StorageClass defaults, and utilizing Infrastructure-as-Code can minimize the occurrence of such errors. Remember that community resources and online forums offer valuable support and insights for tackling Kubernetes challenges.

How to fix this error -- "2 node(s) had volume node affinity conflict ... | Cluster information: Kubernetes version: v1.27.10 Cloud being used: AWS Installation method: kube-adm Host OS: Amazon Linux 2 (centos rhel fedora) CNI and version: calico (3.26.3) CRI and version: containerd (1.7.2) StorageClass.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: annotations: storageclass.kubernetes.io/is-default-class: "true" name: gp2 parameters: fsType: ext4 type: gp2 provisioner: kubernetes.io/aws-ebs reclaimPolicy: Delete volumeBindingMode: Wa...

How to fix this error -- "2 node(s) had volume node affinity conflict ... | Cluster information: Kubernetes version: v1.27.10 Cloud being used: AWS Installation method: kube-adm Host OS: Amazon Linux 2 (centos rhel fedora) CNI and version: calico (3.26.3) CRI and version: containerd (1.7.2) StorageClass.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: annotations: storageclass.kubernetes.io/is-default-class: "true" name: gp2 parameters: fsType: ext4 type: gp2 provisioner: kubernetes.io/aws-ebs reclaimPolicy: Delete volumeBindingMode: Wa... Resolving the “Volume Node Affinity Conflict” Error in Kubernetes ... | In the world of Kubernetes, ensuring that your applications run seamlessly across nodes is essential. However, you might encounter…

Resolving the “Volume Node Affinity Conflict” Error in Kubernetes ... | In the world of Kubernetes, ensuring that your applications run seamlessly across nodes is essential. However, you might encounter… Fixed: node affinity mismatch stopping some pods from starting ... | Posting how I solved this in case others run into something similar. I set up a JupyterHub w Kubernetes on Azure and had been using it with a small team of 3-4 for a year. Then I did a workshop to test it with more people. It worked great during the workshop. After the workshop, I crashed my server (ran out of RAM). No problem. That often happens and I restart. This time, I got a volume / node affinity error and the pod was stuck in pending. Some other people could still launch pods, but I coul...

Fixed: node affinity mismatch stopping some pods from starting ... | Posting how I solved this in case others run into something similar. I set up a JupyterHub w Kubernetes on Azure and had been using it with a small team of 3-4 for a year. Then I did a workshop to test it with more people. It worked great during the workshop. After the workshop, I crashed my server (ran out of RAM). No problem. That often happens and I restart. This time, I got a volume / node affinity error and the pod was stuck in pending. Some other people could still launch pods, but I coul... AKS 1.22.11 - node(s) had volume node affinity conflict - Microsoft ... | Use Case: Backup Aks PVC(using velero) which is running on Azure AKS 1.22.11 with no AZs.

AKS 1.22.11 - node(s) had volume node affinity conflict - Microsoft ... | Use Case: Backup Aks PVC(using velero) which is running on Azure AKS 1.22.11 with no AZs. What does the error "Volume node affinity conflict" mean ? - DEV ... | When you deploy a pod, are doing a cluster update or you are just cleaning a node, you can have pods...

What does the error "Volume node affinity conflict" mean ? - DEV ... | When you deploy a pod, are doing a cluster update or you are just cleaning a node, you can have pods... Pods stuck in "Pending" state due to volume node affinity conflict ... | Pod could not be scheduled on nodes due to the following error: 0/14 nodes are available: 1 Insufficient cpu, 3 Insufficient memory, 3 node(s) had taint {node-role.kubernetes.io/infra: }, that the pod didn't tolerate, 3 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 4 node(s) had volume node affinity conflict. Pods stuck in the "Pending" state due to volume node affinity conflict. Pods are in "Pending" state after cluster upgrade.

Pods stuck in "Pending" state due to volume node affinity conflict ... | Pod could not be scheduled on nodes due to the following error: 0/14 nodes are available: 1 Insufficient cpu, 3 Insufficient memory, 3 node(s) had taint {node-role.kubernetes.io/infra: }, that the pod didn't tolerate, 3 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 4 node(s) had volume node affinity conflict. Pods stuck in the "Pending" state due to volume node affinity conflict. Pods are in "Pending" state after cluster upgrade.